Character.AI and Google have reached settlements in several lawsuits brought by families who alleged that the Character.AI platform contributed to the deaths of their teenage sons and exposed minors to the risk of sexual exploitation. This resolution marks a significant development in the ongoing debate regarding the allocation of responsibility for AI-generated interactions among developers, distributors, and corporate partners.

The lawsuits, initiated by the Social Media Victims Law Center and the Tech Justice Law Project, contended that the design of Character.AI’s product was negligent and failed to adequately protect minors. Plaintiffs described instances where chatbots functioned as intimate partners, engaged with users about mental health crises without meaningful intervention, and facilitated role-play scenarios that allegedly led to grooming. Independent assessments cited in the litigation uncovered numerous cases in which accounts registered as minors were exposed to sexual content or drawn into risky conversations.

While the precise terms of the settlements remain undisclosed, the cases introduced a novel theory that positions conversational AI as a consumer product. Plaintiffs argued that the platform’s design choices—including filters, age verification, escalation protocols, and human review—should adhere to a duty of care when minors are involved. They asserted that the protections offered by the platform were too simplistic and easily bypassed, with warning labels and parental controls rendered ineffective by the real-time, persuasive nature of interactions with virtual characters.

Google was named alongside Character.AI, facing claims that it shared engineering expertise and resources with the core technology, thereby establishing a broad commercial partnership. The lawsuits contended that this relationship positioned Google as a co-creator responsible for anticipating potential harms to young users. Furthermore, the suits highlighted that Character.AI’s co-founders were former Google employees who had previously worked on neural network projects before launching their startup; they later formalized this collaboration through a licensing agreement reportedly valued at $2.7 billion.

By settling, Google avoids a courtroom examination of the extent to which liability could extend to a corporate partner that did not directly operate the app but allegedly contributed to its functionality. For large corporations investing in or integrating third-party AI technologies, the outcome serves as a clear lesson: indirect involvement does not shield a company from claims if plaintiffs can credibly link design decisions and deployment to foreseeable risks for youth.

The lawsuits represent a broader legal effort to hold technology platforms accountable for harm done to younger users. An increasing number of plaintiffs are framing their cases as product liability and negligent design to circumvent speech immunity protections that shield platforms from claims relating to user-generated content. A recent appellate decision involving a social app’s “speed filter” indicated that design-based claims might be viable if connected to real-world risks.

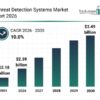

Amid these legal developments, regulators are sharpening their tools. The Federal Trade Commission has issued warnings to AI companies regarding misleading safety claims, while the National Institute of Standards and Technology emphasizes harm mitigation and human oversight for high-impact systems. Internationally, online safety regulations are moving towards imposing stricter duties of care for services accessible to children, requiring risk assessments and adequate safeguards.

The public health landscape adds urgency to these issues. According to the Centers for Disease Control and Prevention (C.D.C.), approximately 30 percent of teenage girls reported significant risk factors for suicide, with L.G.B.T.Q.+ youth at even greater risk. In this context, a chatbot designed to appear empathetic and supportive could create a “high-velocity risk environment” for users already vulnerable to feelings of isolation.

As a result of the settlements, experts predict a series of reforms aimed at improving AI safety and youth protection. Anticipated changes may include stricter age verification processes, automatic opt-out options for sensitive role-play scenarios, enhanced guardrails around sexual content, and real-time crisis detection that connects users to trained support staff, all subject to human review. The industry may also see an increase in independent red-teaming, third-party audits, and safety benchmarks, with measurable outcomes expected concerning exposure to harmful content.

For both Character.AI and Google, this resolution marks the end of a challenging chapter, but it does not resolve the underlying issues. As AI chatbots transition from novelty to daily companions for millions of teenagers, the need for product designs informed by developmental psychology becomes increasingly critical. Each case highlights a somber truth: if a system can simulate concern, it must also be designed to prevent harm.

See also Unlocking Responsible AI: Essential Strategies for Healthcare Leaders to Mitigate Risks and Drive Innovation

Unlocking Responsible AI: Essential Strategies for Healthcare Leaders to Mitigate Risks and Drive Innovation Uncover 2 AI Stocks Set to Surge: Nvidia & Meta Could Lead You to Millions

Uncover 2 AI Stocks Set to Surge: Nvidia & Meta Could Lead You to Millions CES 2026 Wraps Up: Major AI Breakthroughs from Nvidia, AMD, and Robot Innovations by Hyundai

CES 2026 Wraps Up: Major AI Breakthroughs from Nvidia, AMD, and Robot Innovations by Hyundai Mastering Storytelling: The Essential Leadership Skill Driving Success in 2026

Mastering Storytelling: The Essential Leadership Skill Driving Success in 2026