November 18, 2025

4 min read

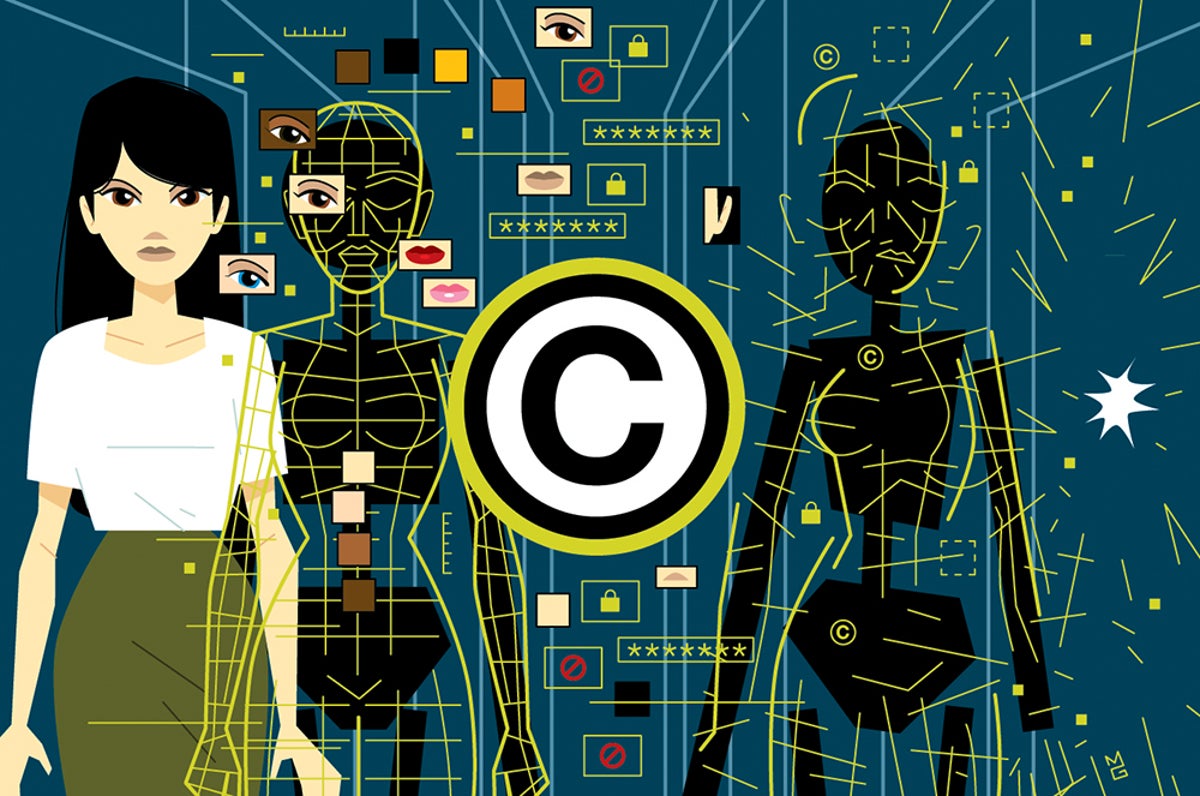

To Solve the Deepfake Problem, People Need the Rights to Their Own Image

When anyone can forge reality, society can’t self-govern. Borrowing Denmark’s approach could help the U.S. restore accountability around deepfakes

As generative artificial intelligence technology advances, the ability to create deepfakes—deceptively realistic images, videos, and audio—is becoming increasingly sophisticated. These fabrications pose significant risks, not only to individuals but to societal norms and democratic processes. The ability to manipulate perceptions of reality undermines trust, complicating self-governance and critical discourse within the public sphere.

Denmark is pioneering a legislative response aimed at addressing the deepfake dilemma. In June, the Danish government put forth an amendment to its copyright law, proposing that individuals would gain rights over their own faces and voices. This amendment intends to make it unlawful to create deepfakes of individuals without their explicit consent and establishes penalties for violators. This legal framework asserts the principle that every person has ownership over their own image and likeness.

The Impact of Copyright Law on Deepfakes

The Danish approach has a notable advantage as it engenders corporate accountability through copyright law. A 2024 study published on arXiv.org examined the removal of deepfake content from social media platforms. Researchers found that when deepfakes were reported as copyright violations, platforms like X took action swiftly. In contrast, reports focusing on non-consensual nudity did not yield the same results, highlighting the effectiveness of copyright claims in ensuring content removal.

This legal recognition of personal likeness is crucial given the stark realities surrounding deepfake abuse. Victims often face exploitation for financial gain or harassment. Deepfakes have been linked to severe psychological harm, including instances of suicides among victims, particularly teenage boys targeted by scammers. Alarmingly, research indicates that 96 percent of deepfakes are non-consensual, and a staggering 99 percent of sexual deepfakes feature women.

The prevalence of this issue is alarming. A survey of over 16,000 individuals across ten countries revealed that 2.2 percent had experienced deepfake pornography. Additionally, the Internet Watch Foundation reported a 400 percent increase in AI-generated deepfakes of child sexual abuse from the first half of 2024 to 2025, underscoring the urgency for legislative intervention.

Moreover, deepfakes pose a threat to democratic integrity. A notable instance occurred shortly before the 2024 U.S. presidential election when Elon Musk shared a deepfake video of Vice President Kamala Harris. Despite violating the platform’s own guidelines, the content remained on the site, illustrating the challenges of misinformation and its potential impact on public opinion.

Legislative Movements in the U.S. and Abroad

In the face of these challenges, the U.S. has begun making strides. The bipartisan TAKE IT DOWN Act, enacted this year, criminalizes the publication or threat of non-consensual intimate images, including deepfakes. Individual states are also taking action, such as Texas’ law against deceptive AI videos aimed at influencing elections and California’s requirements for platforms to manage misleading AI-generated content.

Despite these developments, this fragmented approach lacks comprehensiveness. Advocates are urging the introduction of a federal law to protect individuals’ rights to their own likeness, which would streamline the process for victims to seek removal and compensation. Proposed legislation like the NO FAKES Act aims to extend protections to all individuals, not just public figures, while the Protect Elections from Deceptive AI Act seeks to eliminate deepfakes targeting federal candidates.

Internationally, the E.U. AI Act mandates that synthetic media must be identifiable, and the Digital Services Act requires major platforms to counteract manipulated media. The U.S. should adopt similar measures to ensure accountability and consumer protection.

Addressing the proliferation of explicit deepfake sites is also necessary. San Francisco’s city attorney has successfully shut down several such platforms, while California’s AB 621 aims to restrict services enabling deepfake creation. Companies like Meta are also taking legal action against those facilitating the production of exploitative content.

Ultimately, Denmark’s approach serves as a vital model for safeguarding personal rights against the misuse of emerging technologies. While no legal framework can eradicate the issue entirely, establishing accountability through legislation is essential to prevent societal upheaval and protect individual dignity.

See also OpenAI Explores Personalized Ad Model for ChatGPT Amid Rising Commerce Queries

OpenAI Explores Personalized Ad Model for ChatGPT Amid Rising Commerce Queries MMI Wins 2025 AI Pioneer Award for Transformative Mortgage Tech Using AI Insights

MMI Wins 2025 AI Pioneer Award for Transformative Mortgage Tech Using AI Insights Google DeepMind Launches WeatherNext 2, Achieving Global Forecasts in Under a Minute

Google DeepMind Launches WeatherNext 2, Achieving Global Forecasts in Under a Minute AI Fails 95% of the Time: Companies Must Prioritize Human-Centered Design to Succeed

AI Fails 95% of the Time: Companies Must Prioritize Human-Centered Design to Succeed Butterfly Network Licenses Ultrasound-On-Chip Tech to Midjourney for $15M Deal

Butterfly Network Licenses Ultrasound-On-Chip Tech to Midjourney for $15M Deal