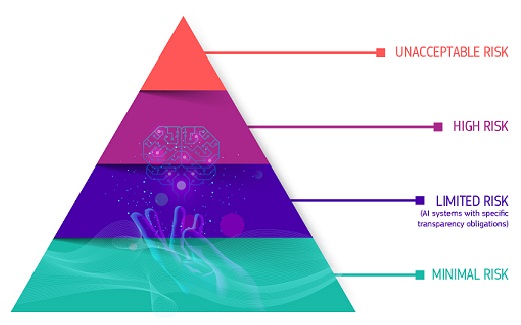

The European Union’s AI Act, set to be fully applicable by August 2026, lays out a comprehensive framework for regulating artificial intelligence, categorizing AI systems into four distinct risk levels: unacceptable, high, limited, and minimal or no risk. The act introduces strict regulations particularly for high-risk AI systems, which could pose serious threats to health, safety, or fundamental rights, while banning certain practices outright that are deemed to pose unacceptable risks to individuals.

Beginning in February 2025, the AI Act will prohibit eight practices classified as posing an unacceptable risk. These include harmful AI-based manipulation, social scoring, and real-time remote biometric identification for law enforcement purposes in public spaces. The regulations aim to protect the safety and rights of EU citizens by banning practices that could be detrimental to their livelihoods. This stringent approach reflects growing concerns over the ethical implications and potential dangers posed by advanced AI technologies.

High-risk AI systems, which encompass applications in critical infrastructure, education, and law enforcement, will be subject to rigorous obligations before they can enter the market. Providers will be required to implement adequate risk assessment and mitigation systems, ensure high-quality datasets to minimize discriminatory outcomes, and maintain detailed documentation for compliance assessment. These rules will gradually come into effect from August 2026 to August 2027, underscoring the EU’s proactive stance in managing AI’s risks while fostering innovation.

A particularly notable aspect of the AI Act is its emphasis on transparency. Providers of generative AI models will need to ensure that AI-generated content is identifiable, requiring labeling for items like deep fakes. These transparency rules also include obligations for AI systems such as chatbots to inform users when they are interacting with a machine. The intention is to maintain public trust and ensure users are aware of AI’s role in their interactions.

Moreover, the AI Act does not impose rules for AI systems classified as minimal or no risk, which currently represent the majority of applications in the EU, including AI-enabled video games and spam filters. By distinguishing between various risk levels, the legislation aims to balance regulation with innovation, allowing low-risk AI technologies to flourish without unnecessary constraints.

As the act begins its phased implementation, compliance will be facilitated by several key instruments developed by the European Commission. Guidelines clarifying providers’ obligations, a voluntary Code of Practice for GPAI models, and a template for public summaries of training content are all designed to assist firms in navigating the regulatory landscape. Additional support tools are expected to be released in the second quarter of 2026 to further aid compliance with transparency rules.

The governance of the AI Act will be overseen by the European AI Office and member state authorities, backed by advisory panels to ensure effective enforcement and compliance. This structured approach aims to mitigate compliance costs while reinforcing the safety of AI technologies across the EU.

In response to feedback on the complexities of the existing framework, the Commission has proposed amendments to streamline the implementation of the AI Act. These include a shortened timeline for high-risk rules and enhanced support for small and medium-sized enterprises (SMEs). The intention is to simplify compliance without compromising on safety or ethical standards.

As discussions continue among the European Parliament and Council regarding the Digital Omnibus on AI, the implications of the AI Act extend far beyond Europe. With AI technologies increasingly permeating various sectors, how these regulations evolve could significantly influence global standards for AI governance, impacting international trade and technological collaboration.

See also Nvidia Stock Rises 1.79% as Trump Considers Selling H200 AI Chips to China

Nvidia Stock Rises 1.79% as Trump Considers Selling H200 AI Chips to China Mitsotakis Signs AI Cooperation Agreement with Mistral AI to Boost Greek Tech Ecosystem

Mitsotakis Signs AI Cooperation Agreement with Mistral AI to Boost Greek Tech Ecosystem Penn State’s NaviSense App Empowers Visually Impaired with Real-Time Object Navigation

Penn State’s NaviSense App Empowers Visually Impaired with Real-Time Object Navigation Nvidia Accused of Misappropriating Avian AI Software Worth $1.5 Billion in Lawsuit

Nvidia Accused of Misappropriating Avian AI Software Worth $1.5 Billion in Lawsuit Trump Launches “Genesis Mission” to Accelerate Scientific Discovery with AI-Powered Supercomputing

Trump Launches “Genesis Mission” to Accelerate Scientific Discovery with AI-Powered Supercomputing