The emergence of advanced AI models has taken a significant leap forward with the adoption of **mixture-of-experts** (MoE) architecture, a design that has quickly become the standard among leading open-source models. A recent analysis from the Artificial Analysis leaderboard reveals that the top 10 most intelligent models, including **DeepSeek AI’s DeepSeek-R1**, **Moonshot AI’s Kimi K2 Thinking**, and **OpenAI’s gpt-oss-120B**, utilize this innovative architecture, marking a noteworthy trend in AI development.

MoE models optimize performance by activating only a subset of specialized “experts” for each task, mimicking the brain’s efficiency. This selective activation allows for faster and more efficient token generation without necessitating a proportional increase in computational resources. The benefits of this architecture are amplified when deployed on **NVIDIA’s GB200 NVL72** systems, which offer notable performance enhancements; for instance, Kimi K2 Thinking operates 10 times faster on the GB200 compared to its predecessor, the **NVIDIA HGX H200**.

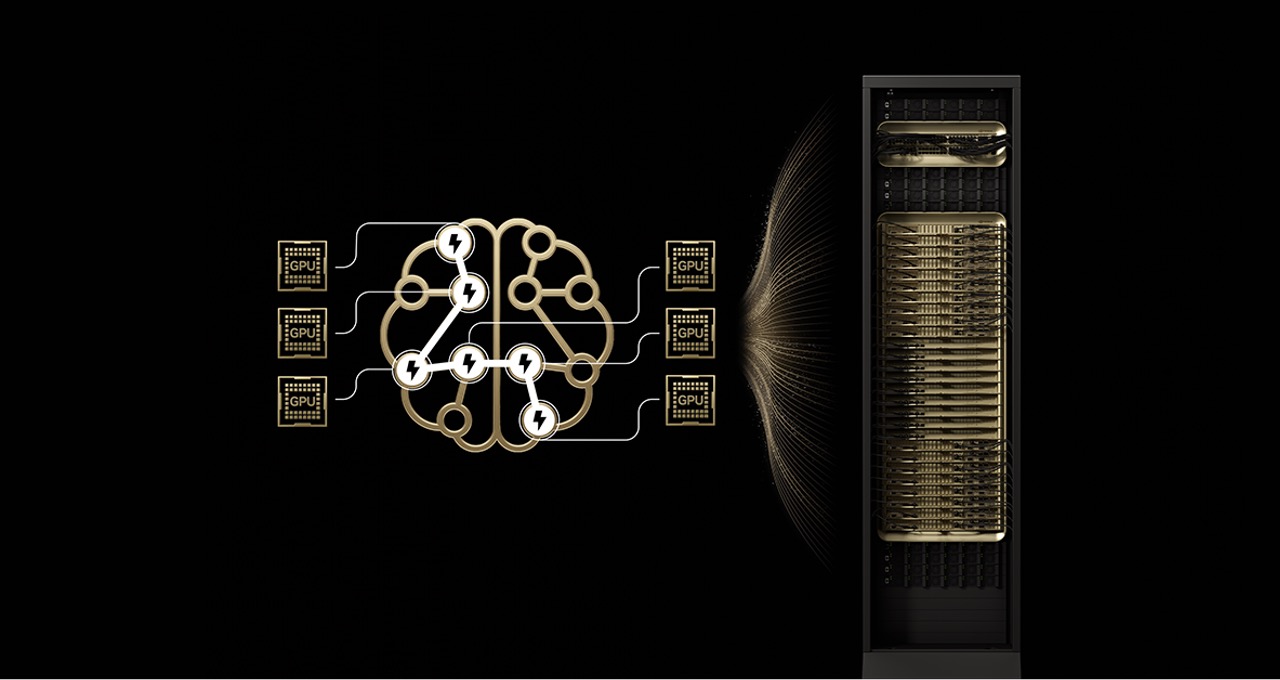

Despite the advantages of MoE architecture, scaling these models in production environments has proven difficult. The GB200 NVL72 combines extreme codesign features that enhance both hardware and software, enabling practical scalability for MoE models. With **72 NVIDIA Blackwell GPUs** working in unison, the system delivers **1.4 exaflops of AI performance** and **30TB of fast shared memory**, resolving common bottlenecks associated with MoE deployment.

This architecture enables lower memory pressure per GPU by distributing the workload across a larger number of GPUs, thereby allowing each expert model to operate more effectively. The NVLink interconnect fabric facilitates instantaneous communication between GPUs, which is crucial for achieving the rapid exchanges of information necessary for effective model functioning. As a result, the GB200 NVL72 allows for the utilization of more experts, thereby enhancing both performance and efficiency.

Comments from industry leaders underscore the significance of this technological advancement. **Guillaume Lample**, cofounder and chief scientist at **Mistral AI**, stated, “Our pioneering work with OSS mixture-of-experts architecture… ensures advanced intelligence is both accessible and sustainable for a broad range of applications.” Notably, **Mistral Large 3** has also realized a 10x performance gain on the GB200 NVL72, reinforcing the growing trend toward MoE models.

Beyond mere performance gains, the GB200 NVL72 is set to transform the economics of AI. NVIDIA’s latest advancements have fostered a 10x improvement in performance per watt, allowing for a corresponding increase in token revenue. This transformation is essential for data centers constrained by power and cost considerations, making MoE models not just a technical innovation but a strategic business advantage. Companies such as **DeepL** and **Fireworks AI** are already leveraging this architecture to push the boundaries of AI capabilities.

As the AI landscape continues to evolve, the future appears increasingly reliant on advanced architectures like MoE. The advent of multimodal AI models, which incorporate various specialized components akin to MoE, signifies a shift towards shared pools of experts that can address diverse applications efficiently. This trend points to a growing recognition of MoE as a foundational building block for scalable, intelligent AI systems.

The NVIDIA GB200 NVL72 is not just a platform for MoE but a pivotal development in the ongoing journey towards more efficient, powerful AI architectures. As companies integrate these systems into their operational frameworks, they stand to redefine the parameters of AI performance and efficiency, paving the way for a new era in intelligent computing.

See also Europe’s AI Data Centers Face Urgent Sovereignty, Energy, and Environmental Struggles

Europe’s AI Data Centers Face Urgent Sovereignty, Energy, and Environmental Struggles AI-Powered eSignatures Revolutionize Healthcare Approvals, Boost Compliance and Efficiency

AI-Powered eSignatures Revolutionize Healthcare Approvals, Boost Compliance and Efficiency Amazon Unveils Agent-Driven AI Strategy, Analysts Boost Price Targets to $340

Amazon Unveils Agent-Driven AI Strategy, Analysts Boost Price Targets to $340 Quantum-AI Convergence Looms: Altucher Warns of Major Tech Disruption Ahead

Quantum-AI Convergence Looms: Altucher Warns of Major Tech Disruption Ahead Mistral Launches AI Family with 675B Parameters, Competes Head-to-Head with DeepSeek

Mistral Launches AI Family with 675B Parameters, Competes Head-to-Head with DeepSeek