UNIVERSITY PARK, Pa. — A team of researchers at Penn State has developed an innovative AI-powered smartphone application, NaviSense, designed to assist visually impaired individuals in navigating their surroundings. The application, which utilizes recommendations from the visually impaired community, aims to improve daily tasks by identifying objects based on spoken prompts. The team presented NaviSense at the Association for Computing Machinery’s SIGACCESS ASSETS ‘25 conference in Denver, where it received the Best Audience Choice Poster Award.

In recent years, various systems and applications have emerged to support visually impaired users, but significant gaps remain. Many existing solutions rely on human assistance, which can be inefficient and raise privacy concerns. According to Vijaykrishnan Narayanan, the team lead and A. Robert Noll Chair Professor of Electrical Engineering at Penn State, previously available automated services faced limitations, as they required preloaded models of objects for recognition. “This is highly inefficient and gives users much less flexibility when using these tools,” Narayanan noted.

To overcome these challenges, the researchers integrated large-language models (LLMs) and vision-language models (VLMs) into NaviSense. This technology allows the application to learn about its environment and recognize objects in real time without the need for preloaded models. Narayanan emphasized that this advancement represents a major milestone, as NaviSense can now respond to voice commands directly, enhancing user experience and accessibility.

Before launching the development of NaviSense, the team conducted interviews with visually impaired individuals to ensure the app’s features would effectively address their specific needs. Ajay Narayanan Sridhar, a doctoral student in computer engineering and lead investigator, commented on the importance of these interviews in shaping the app’s functionality. “These interviews gave us a good sense of the actual challenges visually impaired people face,” Sridhar said.

Once users request an object, NaviSense meticulously searches the environment, filtering out unrelated items and asking follow-up questions if it does not understand the request. This conversational capability distinguishes NaviSense from existing solutions, offering users convenience and flexibility. The application also tracks hand movements in real time, providing feedback on the location of the sought-after object relative to where the user is reaching, a feature that survey participants frequently requested.

The team conducted tests with 12 participants in a controlled environment, comparing NaviSense against two commercial alternatives. Results indicated that NaviSense significantly reduced the time users spent locating objects while demonstrating higher accuracy in identification. Feedback gathered post-experiment highlighted users’ satisfaction with the app’s functionality, with one participant praising its ability to provide directional cues, such as “left or right, up or down,” leading to a successful identification.

While the current version of NaviSense shows promise, Narayanan acknowledges that improvements are necessary before commercialization. The team is focusing on optimizing the application’s power consumption to minimize battery drain on smartphones, as well as enhancing the efficiency of the LLMs and VLMs. “This technology is quite close to commercial release, and we’re working to make it even more accessible,” he stated, emphasizing their commitment to improving the app based on user feedback.

The team comprises several experts from Penn State, including Mehrdad Mahdavi, Hartz Family Associate Professor of Computer Science and Engineering, and Fuli Qiao, a doctoral student in computer science. The research also includes contributions from Nelson Daniel Troncoso Aldas, an independent researcher, and Laurent Itti and Yanpei Shi, associated with the University of Southern California.

This initiative received support from the U.S. National Science Foundation, which plays a crucial role in funding innovative research aimed at addressing pressing challenges in society. As federal funding faces potential cuts, the continuation of such projects becomes increasingly vital for advancing technologies that enhance quality of life.

As researchers at Penn State work towards refining NaviSense, they aim to bridge the gap in access for visually impaired individuals, promising a future where technology can significantly better integrate into daily life and provide essential support.

See also Nvidia Accused of Misappropriating Avian AI Software Worth $1.5 Billion in Lawsuit

Nvidia Accused of Misappropriating Avian AI Software Worth $1.5 Billion in Lawsuit Trump Launches “Genesis Mission” to Accelerate Scientific Discovery with AI-Powered Supercomputing

Trump Launches “Genesis Mission” to Accelerate Scientific Discovery with AI-Powered Supercomputing Small Businesses Embrace Answer Engine Optimization to Thrive in AI Search Era

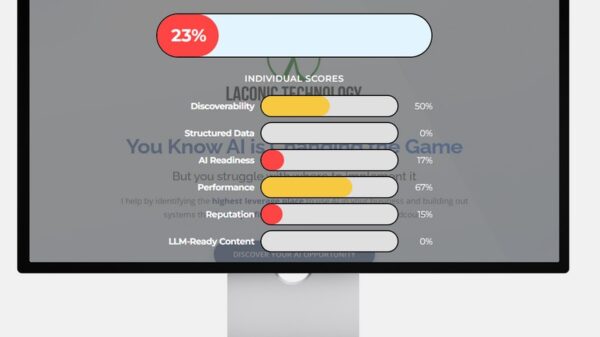

Small Businesses Embrace Answer Engine Optimization to Thrive in AI Search Era Trump Launches Genesis Mission Executive Order to Accelerate AI-Driven Scientific Breakthroughs

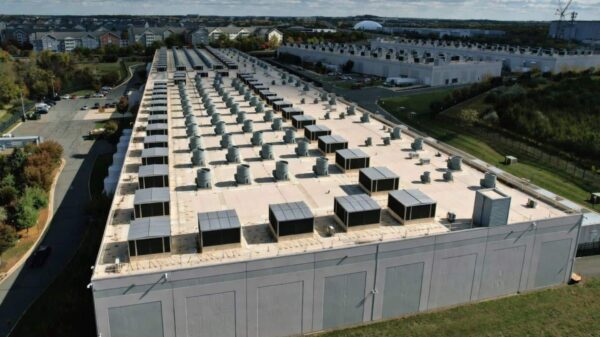

Trump Launches Genesis Mission Executive Order to Accelerate AI-Driven Scientific Breakthroughs Carney Advocates Carbon-Neutral AI Data Centers, Champions EU Carbon Pricing at G20 Summit

Carney Advocates Carbon-Neutral AI Data Centers, Champions EU Carbon Pricing at G20 Summit