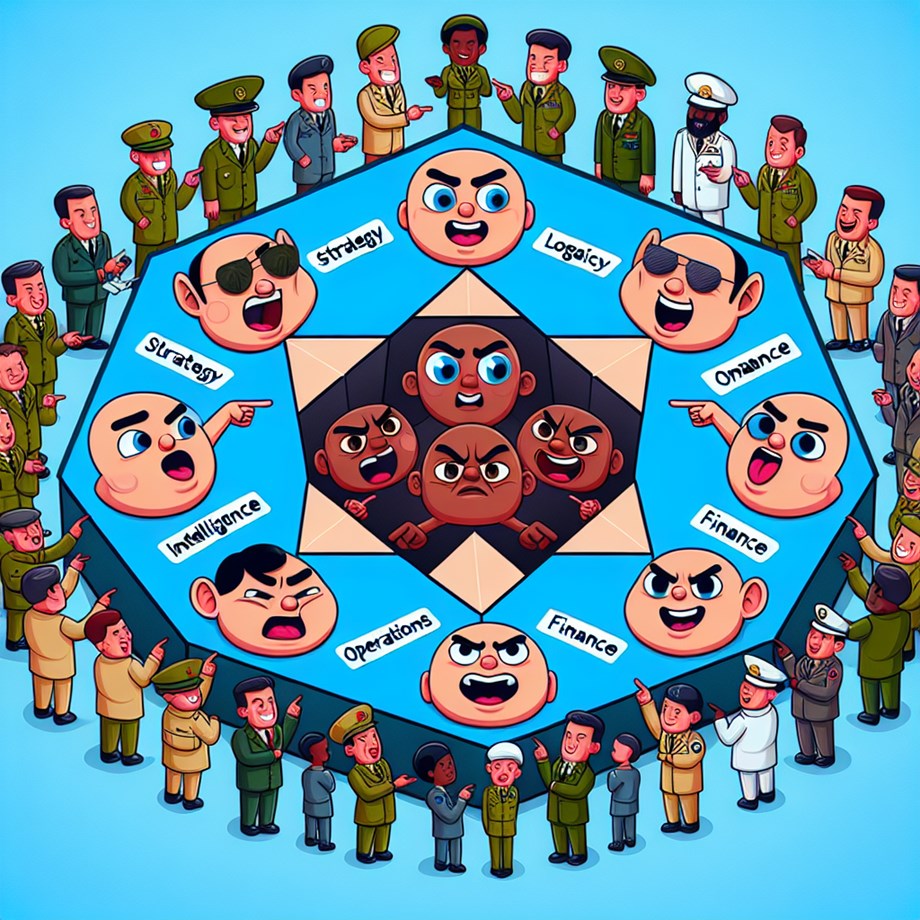

The Pentagon is reportedly weighing the possibility of terminating its partnership with Anthropic, an artificial intelligence company, amid disputes over the use of AI technologies in military applications. According to a report from Axios, Anthropic has imposed certain restrictions on its AI models, which the Pentagon views as significant obstacles to their intended military use.

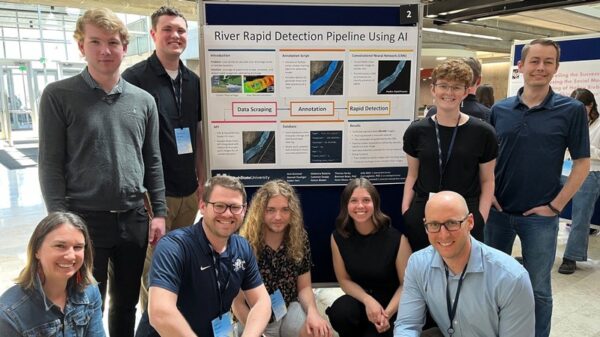

Four prominent AI companies, including Anthropic, OpenAI, Google, and xAI, are facing increasing pressure from the Pentagon to permit their models to be utilized for “all lawful purposes.” Key military interests include areas such as weapons development and intelligence gathering. Despite this mounting pressure, Anthropic has remained steadfast in its commitment to ethical guidelines governing the use of its technologies.

Anthropic’s AI model, Claude, has been employed in notable operations, including the capture of former Venezuelan President Nicolas Maduro. However, the company asserts that its discussions with the U.S. government have primarily revolved around usage policies, particularly regarding constraints on fully autonomous weaponry and mass surveillance.

This situation unfolds as the U.S. military increasingly integrates artificial intelligence into its operations, navigating the complex ethical landscape that accompanies such advancements. The Department of Defense has identified AI as a critical component for maintaining national security and competitive advantage. However, it faces challenges in reconciling innovative technology with ethical considerations.

Anthropic’s insistence on adhering to ethical constraints highlights a growing divide between tech companies and military interests. While the Pentagon seeks to leverage AI for enhanced capabilities, companies like Anthropic are prioritizing ethical frameworks to prevent misuse. This tension underscores the broader conversation about the role of AI in society, particularly in contexts involving defense and surveillance.

In its efforts to balance innovation with responsibility, Anthropic has emerged as a leader in advocating for ethical AI practices. The company’s leadership has expressed concerns that unrestricted military applications could lead to potential abuses of technology, which could compromise human rights and civil liberties.

The ongoing deliberations reflect a critical juncture for the intersection of technology and defense. As the Pentagon seeks to harness AI capabilities, the response from companies like Anthropic may shape the future landscape of military technology. The outcome of these discussions could have far-reaching implications, not only for national security but also for the ethical governance of artificial intelligence.

Moving forward, the relationship between the Pentagon and AI companies will likely be scrutinized closely. As both sides navigate these complex dynamics, the importance of establishing a collaborative framework that respects ethical considerations while addressing national security needs will become increasingly evident. The developments in this arena may set precedents for how AI technologies are deployed across various sectors in the future.

See also Cohere Achieves $240M ARR in 2025, Surpassing Targets and Signaling Strong Enterprise Growth

Cohere Achieves $240M ARR in 2025, Surpassing Targets and Signaling Strong Enterprise Growth Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032