Samsung Electronics is set to initiate mass production of its sixth-generation high-bandwidth memory, known as HBM4, as early as next week, as reported by industry sources. These new memory chips are specifically engineered for next-generation graphics processing units, particularly those developed by Nvidia for advanced artificial intelligence systems.

According to South Korea’s Yonhap News Agency, Samsung’s production schedule has been strategically aligned with Nvidia’s plans to unveil its upcoming AI accelerator, codenamed Vera Rubin. Shipments of the HBM4 chips are anticipated to begin shortly after the Lunar New Year holiday. Samsung has already passed Nvidia’s rigorous quality certification process and secured purchase orders, confirming readiness for integration into high-performance computing platforms.

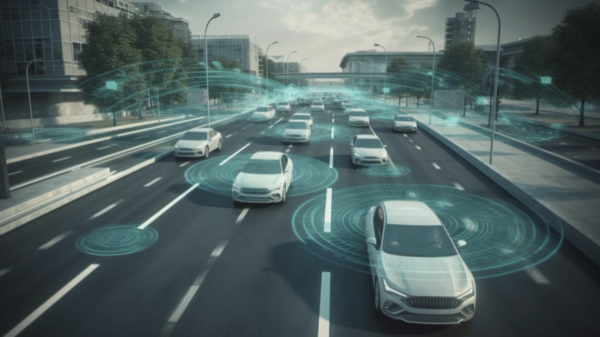

The HBM4 memory signifies a considerable advancement over the current industry-standard fifth-generation HBM3E chips, offering improved bandwidth and efficiency that are crucial for training and executing large generative AI models. As the demand for AI computing power escalates, HBM4 is expected to emerge as a foundational technology in data centers and advanced workstations, underscoring its strategic importance as Nvidia incorporates it into its Vera Rubin platform.

Samsung has reportedly ramped up the volume of HBM4 samples for customer-side module testing, suggesting robust preparatory measures ahead of full-scale manufacturing. This development not only solidifies Samsung’s competitive position in the global HBM market but also highlights its rivalry with other industry players such as SK Hynix. The company’s ability to deliver cutting-edge memory solutions is increasingly vital as the semiconductor industry pivots toward AI-driven hardware development.

The introduction of HBM4 comes at a crucial time for the tech landscape, where advancements in memory technology are essential for meeting the escalating demands of AI applications. As both consumer and enterprise sectors increasingly rely on AI, the performance and efficiency of memory components like HBM4 will play a significant role in shaping future computing capabilities.

In light of these developments, Samsung’s strategic alignment with Nvidia indicates a concerted effort to capitalize on the burgeoning AI market. The partnership not only enhances Nvidia’s hardware offerings but also reinforces Samsung’s position as a leader in memory technology. As the semiconductor industry continues to evolve, the implications of these advancements may extend far beyond initial deployments, potentially influencing various sectors reliant on high-performance computing.

See also Google and Meta Boost Broadcom’s AI Outlook Despite Share Price Decline

Google and Meta Boost Broadcom’s AI Outlook Despite Share Price Decline Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032