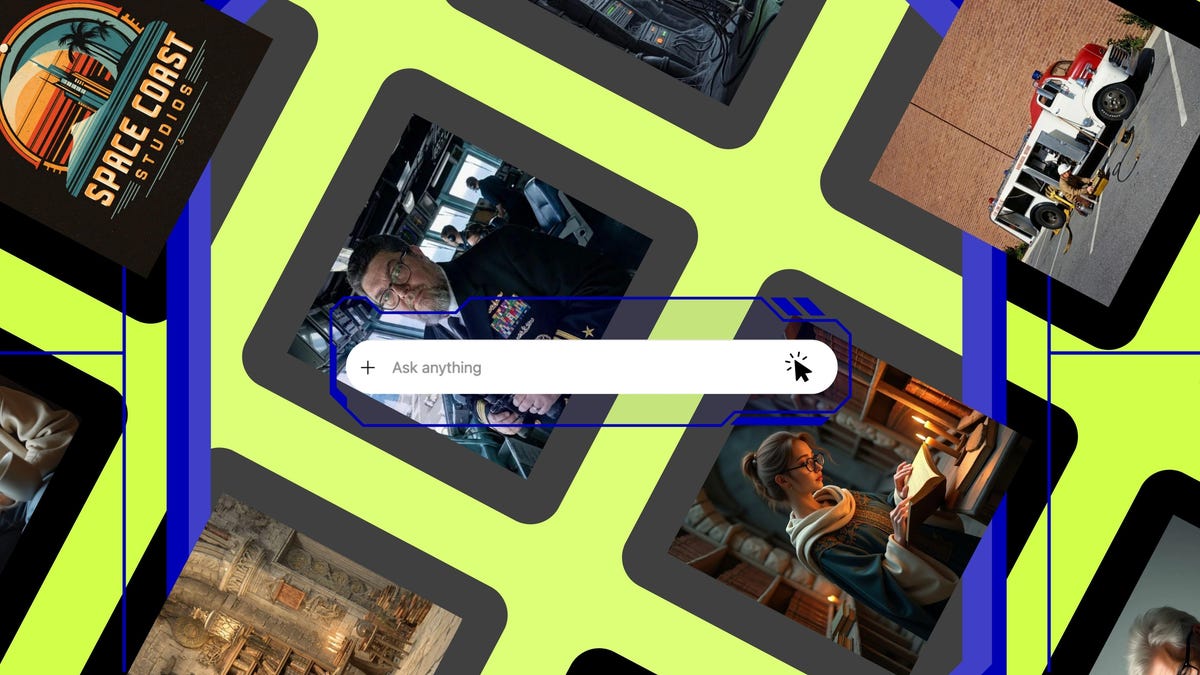

In a recent evaluation of AI image generators, Google’s Nano Banana Pro emerged as a clear frontrunner, achieving a remarkable score of 93%. Conducted by David Gewirtz and Elyse Betters Picaro for ZDNET, the review assessed six popular tools, with ChatGPT following in a distant second place at 74%, while the others ranged between 43% and 57%. The tests involved generating over 50 images based on nine prompts, aimed at determining which AI tools are worth subscription fees for serious users.

The evaluation revealed that Nano Banana Pro, part of Google’s Gemini 3 suite, stood out in nearly every category, scoring exceptionally well in photo recontextualization, original image generation, and the ability to incorporate text into images. Users were given a choice of prompts ranging from logo creation to pop culture references, and the results showcased a significant disparity in performance among the tools tested.

Gewirtz noted that while all six contenders performed adequately in creating logos, their abilities faltered in more complex tasks. For instance, Nano Banana Pro successfully transformed an image of Gewirtz into a U.S. Navy admiral, maintaining facial features and context, whereas many competitors struggled with both accuracy and clarity. The tool’s capacity to restore and enhance old photographs was also highlighted, although it did encounter minor issues, such as misinterpreting text details.

In comparison, ChatGPT displayed strengths in natural language processing and iteration but fell short in terms of image generation quality. The latest iteration of ChatGPT’s image generator was noted to have improved over its predecessors but still lacked the finesse exhibited by Nano Banana Pro. For example, in some cases, ChatGPT inaccurately rendered clothing details or failed to generate an expected level of creativity in its outputs.

Other tools such as Midjourney, Adobe Firefly, and Leonardo AI were also evaluated, each showing unique strengths and weaknesses. Midjourney, known for its cinematic imagery, scored 57% but struggled with tasks involving text and clarity in recontextualization. Adobe Firefly was praised for its focus on commercial-safe images but was criticized for its cumbersome usability and copyright restrictions, achieving a score of 54%. Leonardo AI, specializing in fantasy art, was rated slightly lower at 52%, while Canva, primarily a marketing tool, rounded out the evaluation with a score of 43% due to its inconsistent outputs and difficulties with image cleanup.

The review’s methodology involved a scoring system based on a series of specific prompts, allowing for a comprehensive comparison of each tool’s capabilities. The results indicate that while various AI image generators are available, only a few are equipped to meet the nuanced demands of users seeking quality image generation.

As the landscape of AI continues to evolve, the findings from this assessment underscore the importance of thorough testing and evaluation for users considering subscription-based AI tools. With generative AI maintaining its rapid development trajectory, enthusiasts and professionals alike will benefit from the insights provided by such evaluations, guiding them in choosing the most effective tools for their needs.

See also Google Cloud and Al Jazeera Launch ‘The Core’: A Transformative AI-Powered Journalism Model

Google Cloud and Al Jazeera Launch ‘The Core’: A Transformative AI-Powered Journalism Model Wealthy Elites Use AI to Consolidate Power, Warns Sociologist Tressie McMillan Cottom

Wealthy Elites Use AI to Consolidate Power, Warns Sociologist Tressie McMillan Cottom Shield AI’s New CEO Gary Steele Aims for $1B Revenue by 2028, Targets Global Defense Contracts

Shield AI’s New CEO Gary Steele Aims for $1B Revenue by 2028, Targets Global Defense Contracts AI Infrastructure Scrutiny and $10B Google-Palo Alto Deal Shape Holiday Trading Landscape

AI Infrastructure Scrutiny and $10B Google-Palo Alto Deal Shape Holiday Trading Landscape Alphabet Set to Outperform Nvidia as AI Infrastructure Investment Surges Ahead of 2026

Alphabet Set to Outperform Nvidia as AI Infrastructure Investment Surges Ahead of 2026