The U.S. stock market remains significantly buoyed by investments in artificial intelligence (AI), even as the valuations of key players like Nvidia, Oracle, and Coreweave have declined since their mid-2025 highs. Currently, 75% of the returns in the S&P 500 index can be attributed to just 41 AI stocks, with the so-called “magnificent seven” — Nvidia, Microsoft, Amazon, Google, Meta, Apple, and Tesla — accounting for 37% of the index’s overall performance.

Despite the impressive returns, there are growing concerns about the sustainability of this reliance on AI, predominantly centered around one type of AI: Large Language Models (LLMs). Executives in the field, including Jensen Huang, CEO of Nvidia, downplay fears of a potential AI bubble, asserting that “we are long, long away from that.”

However, skepticism persists among analysts and academics. Some, like Gary Marcus, an AI scientist and emeritus professor at New York University, warn that unchecked optimism surrounding AI’s profitability is beginning to unsettle investors. With a significant portion of U.S. economic growth this year driven by AI investments, Marcus cautions that the fallout from a potential market correction could be severe, stating, “the ‘blast radius’ could be much greater.”

Indicators hinting at a looming crisis include projections that tech giants like Microsoft, Amazon, Google, Meta, and Oracle are expected to spend around $1 trillion on AI by 2026, while OpenAI, the creator of ChatGPT, has committed to a staggering $1.4 trillion in expenditures over the next three years. Yet, the returns on these investments remain modest; OpenAI’s profit for 2025 is expected to exceed $20 billion, a figure far below the required spending to sustain their ambitious commitments.

The current AI boom, or bubble, is largely predicated on the technological advancements of LLMs, which saw a breakthrough with the launch of ChatGPT-4. This model demonstrated a quantitative leap in performance, requiring 3,000 to 10,000 times more computational power than its predecessor, GPT-2. The training data expanded from 1.5 billion parameters for GPT-2 to 1.8 trillion for GPT-4, encompassing a vast swath of global text, image, and video data.

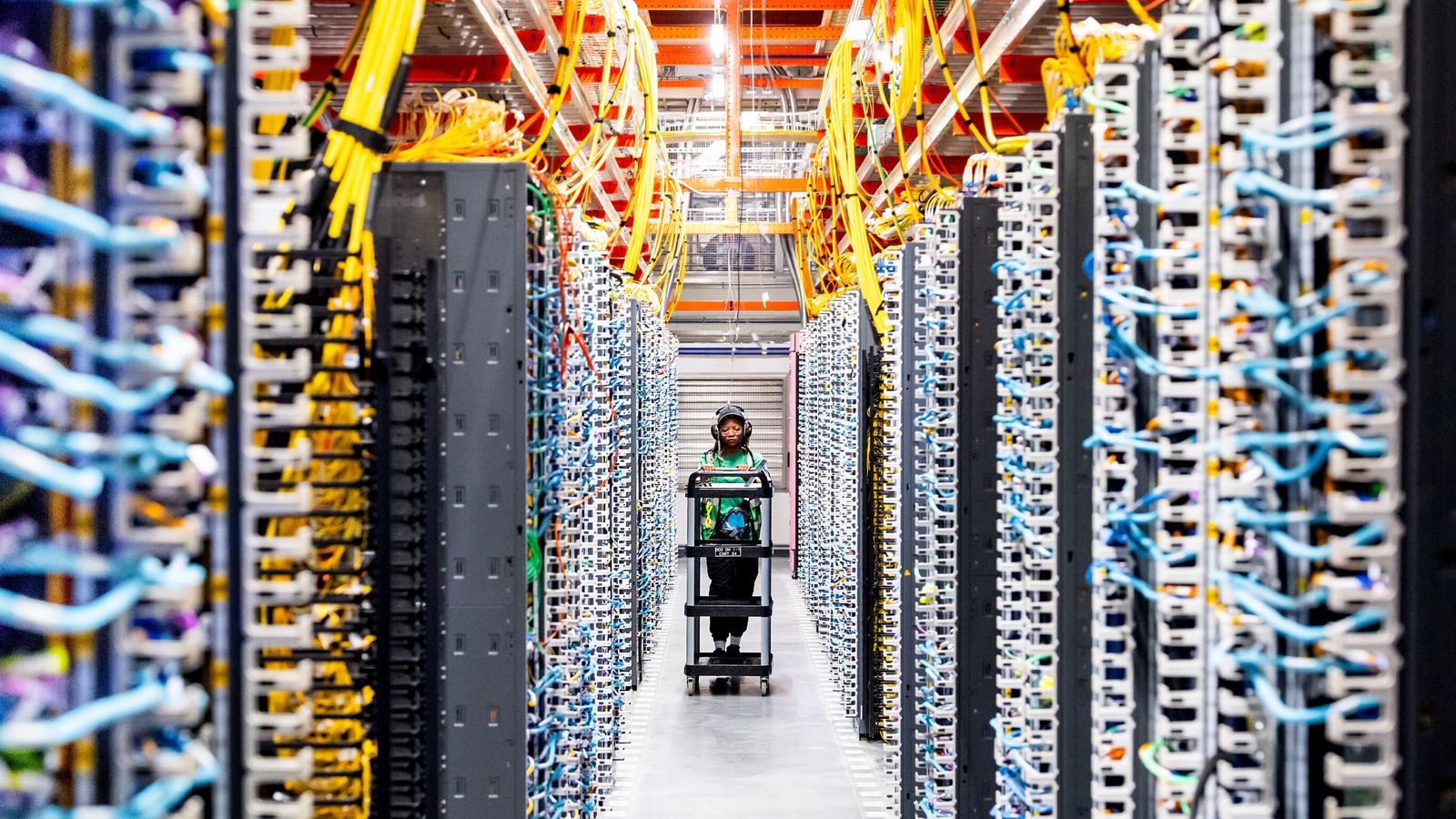

The demand for GPUs to train AI systems has surged, propelling Nvidia’s stock price. In reaction, significant investments are being made into constructing new mega-data centers, including the Stargate complex in Texas, which is expected to cover an area similar to Manhattan’s Central Park by mid-2026. In comparison, Meta’s $27 billion Hyperion data center in Louisiana is anticipated to consume twice as much energy as New Orleans.

This rapid escalation in power requirements has placed considerable strain on the U.S. power grid, leading some data centers to experience delays of years for grid connections. Optimists argue that companies like Microsoft, Meta, and Google, with substantial financial resources, may sidestep these challenges by building their own power facilities.

Yet, doubts linger about the long-term viability of these investments. Unlike traditional infrastructure that typically benefits from predictable depreciation schedules, AI data centers are expected to require constant upgrades. Nvidia’s latest chips are projected to last three to six years, but there are concerns about the necessity for even more frequent replacements due to competition.

Fund manager Michael Burry, known for predicting the subprime mortgage crisis, has voiced his apprehension by betting against AI stocks. He argues that if AI chips must be replaced every three years, the potential depreciation could result in a $780 billion loss in value for major tech companies, or as much as $1.6 trillion if the replacement cycle accelerates to every two years. This stark reality emphasizes the already immense gap between spending and anticipated revenue.

As AI technology advances, adoption is visibly increasing, with indications that it could revolutionize sectors such as software development and the creative industries. OpenAI claims an impressive 800 million weekly active users across its products, but only 5% are paying customers. Business adoption data is less encouraging, showing that only 12-14% of larger companies were utilizing AI in mid-2025, down from earlier estimates.

While Large Language Models continue to show improvements in technical benchmarks, their effectiveness in practical applications is still under scrutiny. Experts indicate that while LLMs excel in statistical predictions, they lack true understanding and long-term memory, leading to repetitive errors. As Ilya Sutskever, co-founder of OpenAI, noted, the industry may be reverting to a research phase despite significant financial investments.

The pressing question remains: how long will shareholders tolerate these vast expenditures without significant returns? As skepticism about the potential of current AI models increases, the market may soon confront the reality of its heavy bets on AI.

See also MINISFORUM and AMD Launch AI Mini Workstation MS-S1 MAX, Cutting Costs by 80%

MINISFORUM and AMD Launch AI Mini Workstation MS-S1 MAX, Cutting Costs by 80% Siléane Launches First International Subsidiary in Western Switzerland to Enhance Automation Solutions

Siléane Launches First International Subsidiary in Western Switzerland to Enhance Automation Solutions Samsung Partners with Elon Musk’s xAI to Develop Custom AI Chips for Tesla

Samsung Partners with Elon Musk’s xAI to Develop Custom AI Chips for Tesla Indian American Engineers Unveil First Monolithic 3D Chip, Achieving 4x Speed Gains

Indian American Engineers Unveil First Monolithic 3D Chip, Achieving 4x Speed Gains Sai Sreenivas Kodur Reveals Strategies for Scalable, Reliable Enterprise AI Systems

Sai Sreenivas Kodur Reveals Strategies for Scalable, Reliable Enterprise AI Systems