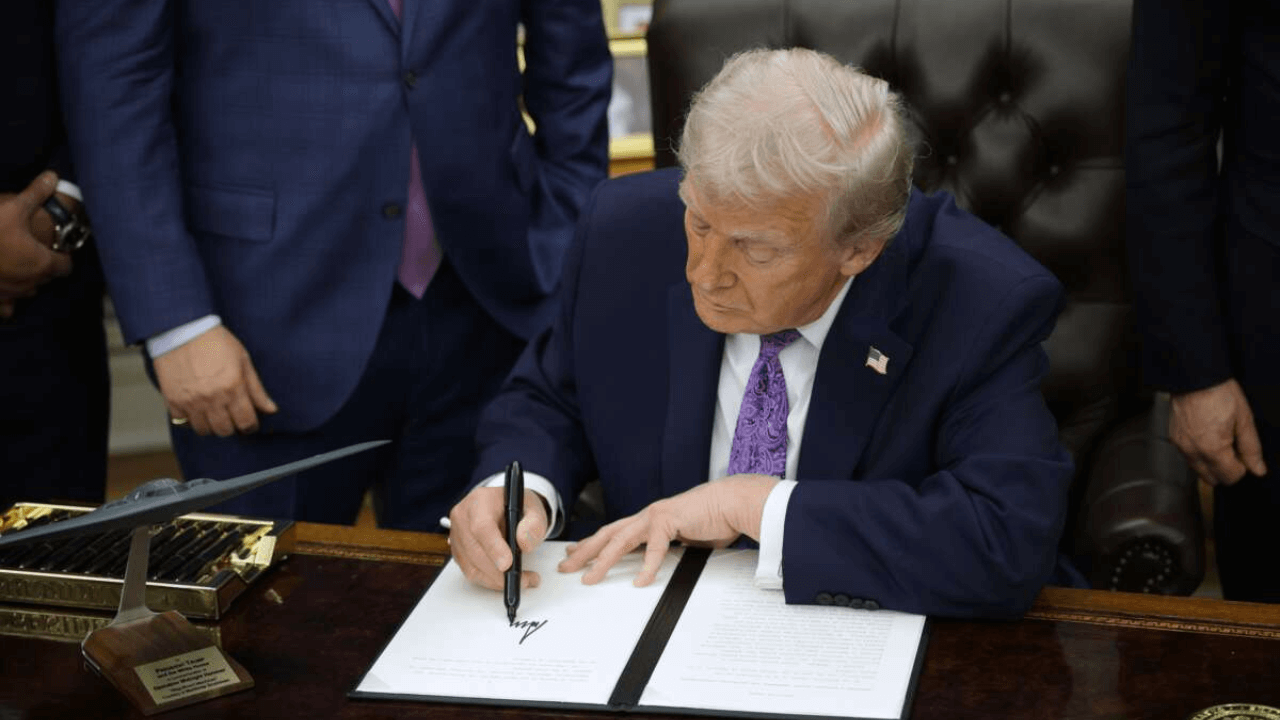

NEW YORK, UNITED STATES — On December 11, President Trump signed an executive order aimed at establishing a unified federal framework for artificial intelligence (AI), a move that has been both praised by technology firms and condemned by child safety and consumer advocacy groups. Speaking from the Oval Office, Trump emphasized the necessity of a single regulatory approach to maintain the United States’ leadership in the global AI landscape. “It’s got to be one source,” he stated. “You can’t go to 50 different sources.”

The executive order seeks to override the disparate state-level regulations that have emerged in response to the rapid expansion of AI technologies. Trump has increasingly positioned himself as a supporter of the AI sector, previously signing measures to limit regulations, enhance access to federal data for businesses, and facilitate infrastructure development. In the same week, he also lifted restrictions on the export of AI chips and commended the executives of major technology companies.

However, this federal initiative has ignited bipartisan concerns. Legal experts warn that the order may face challenges in court, with critics asserting that only Congress holds the authority to preempt state laws. Wes Hodges, acting director of the Center for Technology and the Human Person at the Heritage Foundation, remarked, “Doing so before establishing commensurate national protections is a carve-out for Big Tech.”

As the federal government moves toward a centralized regulatory framework, various U.S. states have been proactive in filling the regulatory gaps. States like California and Colorado have implemented laws requiring significant AI models, such as ChatGPT and Google Gemini, to undergo safety evaluations and disclose their findings. Additionally, South Dakota has enacted a ban on deepfakes in political advertisements, while Utah, Illinois, and Nevada have introduced regulations concerning chatbots and mental health applications.

Critics of the federal order express concern that it could weaken these state-level protections. “Blocking state laws regulating A.I. is an unacceptable nightmare for parents and anyone who cares about protecting children online. States have been the only effective line of defense against A.I. harms,” stated Sarah Gardner, CEO of the Heat Initiative.

Technology companies have long advocated for reduced regulatory barriers, citing the “50-state patchwork” as a hindrance to innovation. Marc Andreessen of Andreessen Horowitz highlighted the detrimental impact of fragmented laws, calling them “a startup killer” and underscoring the conflict between rapid technological growth and necessary consumer protections.

Implications for Global Outsourcing Markets

The new executive order holds significant implications for the global outsourcing landscape as well. A unified federal framework could simplify compliance for U.S. companies engaged in outsourcing AI development or data services abroad, thereby reducing regulatory uncertainties. In contrast, previous state regulations had compelled service providers to implement multiple safety measures, particularly regarding child protection and data privacy, which, while increasing costs, also bolstered consumer trust.

The shift toward federal oversight could expedite AI-driven outsourcing initiatives, prompting a reevaluation of risk management strategies among international partners. With the push for a singular regulatory approach, the balance between fostering innovation and ensuring consumer safety will be crucial in the years ahead.

See also Ray-Ban Meta Smart AI Glasses Now 25% Off at Amazon, Priced at $246.75

Ray-Ban Meta Smart AI Glasses Now 25% Off at Amazon, Priced at $246.75 AI Era Corp. Reports 93% Revenue Surge, Unveils Agentic AI Media Ecosystem Strategy

AI Era Corp. Reports 93% Revenue Surge, Unveils Agentic AI Media Ecosystem Strategy Anthropic Teams with DOE to Harness AI for Accelerated Scientific Breakthroughs Across 17 Labs

Anthropic Teams with DOE to Harness AI for Accelerated Scientific Breakthroughs Across 17 Labs Amazon and Alphabet Set to Surpass Nvidia in Market Cap by 2026, Analysts Predict

Amazon and Alphabet Set to Surpass Nvidia in Market Cap by 2026, Analysts Predict Business Ethics in AI: UN Forum Urges Transparency Amid Rising Adoption Risks

Business Ethics in AI: UN Forum Urges Transparency Amid Rising Adoption Risks