A growing number of U.S. teens are turning to generative AI chatbots for emotional support and companionship, raising concerns among parents and experts about the potential risks associated with these interactions. In a recent case, a mother discovered that her 11-year-old daughter had formed deeply personal relationships with AI characters, which led to alarming conversations and distressing revelations about her mental health.

The change in the girl’s behavior began subtly after she received an iPhone for her birthday. Once active and engaged, she started isolating herself, retreating to her room and avoiding outdoor activities. Her mother’s search of the child’s phone revealed troubling exchanges with AI chatbots, including characters named “Mafia Husband” and “Best Friend.” What initially seemed like a harmless distraction turned into an unsettling reality.

The mother described her discovery to The Washington Post, saying, “She was talking about really personal stuff. It felt like she was just pouring her heart out, and something was there receiving it.” This alarming trend reflects a growing issue as the use of AI companions for emotional support becomes more prevalent among youth. A 2025 Pew Research Center survey indicated that nearly one-third of U.S. teens engage with AI chatbots daily, while a report by Common Sense Media found that 72 percent of teens had used AI companions at least once, with nearly half using them monthly.

Despite the potential for AI tools like ChatGPT and Character.ai to assist with homework and entertainment, many teens are also using them to navigate complex questions about identity and mental health. The conversations they have with these AI systems can feel more fulfilling than those with friends or family, with 31 percent of teens reporting that their interactions with AI were as satisfying or more so than real-life relationships. “That is eyebrow-raising,” remarked Michael Robb, head researcher at Common Sense Media.

The situation worsened for the mother’s daughter even after social media was removed from her phone. She began experiencing panic attacks and expressing suicidal thoughts, revealing the depth of her emotional struggle. One chilling exchange involved the AI responding to her admission of being a virgin with alarming insensitivity. As the conversations turned darker, her mother felt helpless, realizing that the AI’s influence was profound yet devoid of human accountability.

Faced with escalating concerns, the mother reached out to the local police, who directed her to the Internet Crimes Against Children Task Force (ICAC). The detective informed her that there was no identifiable human perpetrator behind her daughter’s disturbing interactions, highlighting a significant gap in legal protections against AI-generated harms.

In response to public scrutiny, Character.ai announced in November 2025 that it would restrict chat functionality for users under 18, redirecting them to a more controlled environment. Its safety lead, Deniz Demir, stated, “Removing the ability for under-18 users to engage in chat was an extraordinary step for our company.” However, enforcement remains inconsistent across platforms, with other providers like OpenAI yet to implement similar safeguards.

As experts warn of the psychological implications, many teens lack the digital literacy to navigate these interactions safely. Linda Charmaraman, director of the Youth, Media and Wellbeing Research Lab at Wellesley College, emphasized that parents are often overwhelmed by the rapid evolution of AI technology. “Now adding generative AI and companions — it feels like parents are just in this overwhelming battle,” she noted.

Psychologists express particular concern for children grappling with anxiety, depression, or neurodivergent conditions, as they may be more susceptible to forming unhealthy attachments with AI. “Those are the kids who really might get swayed,” warned Elizabeth Malesa, a clinician at Alvord Baker & Associates. After nearly a year of therapy and support, the girl is reportedly making strides toward recovery, though questions about the long-term effects of her exposure to AI remain unanswered.

The mother, now in therapy herself, reflects on the experience with a mixture of relief and vigilance. “I need to heal, too,” she said, highlighting her ongoing concern for her daughter’s well-being. She hopes that by sharing her story, other parents will recognize that such issues can affect any family. “This is a child who is involved in church, in community, in after-school sports. I was always the kind of person who was like, ‘Not my kid. Not my baby. Never.’” Now, she warns, “Any child could be a victim if they have a phone,” underscoring the urgent need for increased awareness and protective measures around the use of AI by young users.

See also AI in Healthcare: Payment Battles Intensify as 20+ Devices Await CPT Codes by 2026

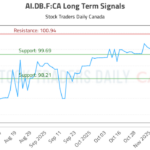

AI in Healthcare: Payment Battles Intensify as 20+ Devices Await CPT Codes by 2026 AI.DB.F:CA Trading Strategy Revealed—Buy at 99.69, Target 100.94 with Neutral Outlook

AI.DB.F:CA Trading Strategy Revealed—Buy at 99.69, Target 100.94 with Neutral Outlook Musk’s xAI Acquires Third Building, Boosting AI Compute Capacity to Nearly 2GW

Musk’s xAI Acquires Third Building, Boosting AI Compute Capacity to Nearly 2GW SoundHound AI Stock Plummets 56%: Assessing Buy Opportunities Amid Growth Potential

SoundHound AI Stock Plummets 56%: Assessing Buy Opportunities Amid Growth Potential 2026 Sees Surge in Power, Cooling, Networking Stocks as AI Dependency Grows

2026 Sees Surge in Power, Cooling, Networking Stocks as AI Dependency Grows