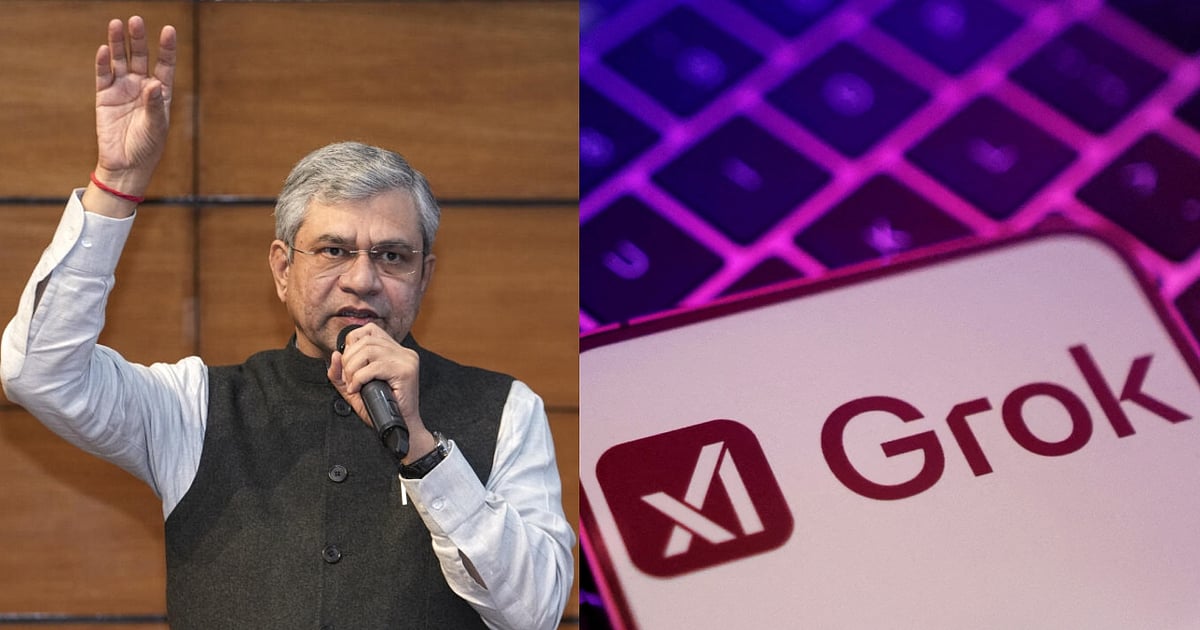

The Ministry of Electronics and Information Technology (MeitY) has officially communicated with the Chief Compliance Officer of X Corp. (formerly Twitter) regarding the company’s alleged failure to comply with statutory due diligence obligations mandated by the Information Technology Act, 2000. This letter, dated January 2, 2026, highlights concerns surrounding the platform’s adherence to regulations that govern the responsibilities of intermediaries operating in India.

In its correspondence, MeitY outlined specific instances where X Corp. purportedly fell short of its obligations outlined under the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. These rules require companies to proactively monitor and manage content on their platforms, ensuring that they do not serve as conduits for misinformation, hate speech, or other illegal activities. The ministry’s move signals a heightened scrutiny of social media platforms amid ongoing debates about accountability and responsibility in the digital landscape.

X Corp. has faced increasing pressure from regulators globally to reinforce its compliance frameworks, especially as governments seek to combat the spread of harmful content online. The Indian government’s action reflects broader concerns about the influence of social media on public discourse and its potential to incite violence or perpetuate false narratives. By holding X Corp. accountable, MeitY aims to underscore the importance of regulatory frameworks in safeguarding digital communication and maintaining public order.

This intervention by the Indian government comes at a time when X Corp. is navigating a complex regulatory environment worldwide. The company has faced similar challenges in other jurisdictions, where authorities have demanded stricter controls over user-generated content. As social media platforms grapple with balancing free expression and safety, the expectations from regulatory bodies are becoming increasingly stringent.

The letter from MeitY is not merely a procedural formality; it represents a significant step in the Indian government’s efforts to reinforce its regulatory framework for digital platforms. The ministry’s insistence on compliance is indicative of a broader shift towards greater accountability for tech companies, particularly in countries where misinformation and digital harassment have become pressing social issues.

As the situation unfolds, X Corp. will need to respond to the concerns raised by the Indian authorities. The company has previously indicated its commitment to comply with local laws and has taken steps to enhance its content moderation practices. However, the effectiveness of these measures will be scrutinized closely by both regulators and the public.

Looking ahead, the implications of this government intervention could have lasting effects on X Corp.’s operations in India. The Indian market remains one of the largest for social media platforms, and maintaining a compliant and cooperative relationship with local authorities is crucial for the company’s growth strategy. Furthermore, the outcome of this engagement may set a precedent for how other countries approach regulation of social media, particularly in light of increasing global concerns about the impact of digital platforms on society.

In conclusion, the letter from the Ministry of Electronics and Information Technology serves as a critical reminder of the evolving responsibilities that tech companies must navigate in their operations. As debates surrounding digital ethics and accountability continue to gain momentum, X Corp. and similar platforms will need to adapt swiftly to meet the expectations of regulators and the demands of their user base.

See also Congressional Leaders Announce 60 AI Governance Recommendations Impacting Rental Housing

Congressional Leaders Announce 60 AI Governance Recommendations Impacting Rental Housing South Carolina Chamber Calls for AI Regulation Amid Trump’s Federal Oversight Order

South Carolina Chamber Calls for AI Regulation Amid Trump’s Federal Oversight Order China Proposes New AI Chatbot Regulations to Mitigate Addiction and Ensure User Safety

China Proposes New AI Chatbot Regulations to Mitigate Addiction and Ensure User Safety One in Three South Africans Unaware of AI, Raising Urgent Policy Concerns

One in Three South Africans Unaware of AI, Raising Urgent Policy Concerns New York’s RAISE Act Mandates $500M Revenue Threshold for AI Compliance by 2027

New York’s RAISE Act Mandates $500M Revenue Threshold for AI Compliance by 2027