In a significant advance for artificial intelligence, researchers have unveiled a novel technique known as TurboDiffusion that could revolutionize video creation by drastically reducing generation times from minutes to mere seconds. According to a recent paper that has garnered attention in the tech community, TurboDiffusion can accelerate video production by up to 200 times without compromising quality. This breakthrough is achieved through a sophisticated integration of attention mechanisms, knowledge distillation, and quantization techniques, making high-fidelity video synthesis faster and more accessible than ever before.

The foundation of TurboDiffusion lies in the optimization of diffusion models, which form the backbone of many AI video generators. Traditional models typically require lengthy computational processes to denoise and refine video frames, consuming considerable time even on advanced hardware. TurboDiffusion innovatively introduces sparsity in attention layers and distills knowledge from larger models into more efficient versions, significantly streamlining the overall process. Early demonstrations indicate that TurboDiffusion can produce coherent five-second video clips in under five seconds on standard GPUs, marking a transformative development for industries that depend on rapid content production.

Industry experts are abuzz with the potential ramifications for content creation across sectors, including social media and film production. As AI tools increasingly become essential in creative workflows, speed has emerged as a critical factor. Social media platform X has seen user enthusiasm regarding open-source implementations that rival proprietary systems like Sora or Veo, achieving inference speeds up to eight times faster at high resolutions. This trend aligns with a broader movement towards open-source advancements that democratize access to cutting-edge technology.

TurboDiffusion builds on earlier innovations, such as FastVideo from Hao AI Lab, which employed sparse distillation to reduce denoising times by 70 times. Hao AI Lab’s FastWan series showcases real-time five-second video generation on a single H200 GPU, further emphasizing the practicality of these acceleration techniques. The evolution reflects an industry shift toward efficiency, where intelligent architectures replace raw computational power. When compared to established players, such as Google’s LUMIERE model, which pioneered space-time diffusion for flexible video tasks, TurboDiffusion stands out for focusing on speed without sacrificing quality. Its capability to maintain visual fidelity at scale addresses a common dilemma in generative AI: the conflict between speed and image quality.

Understanding how diffusion models operate provides insight into TurboDiffusion’s mechanics. These models begin with noise and iteratively refine it into structured visual outputs. TurboDiffusion optimizes this process by minimizing redundant computations in attention mechanisms that govern element relationships within video. Additionally, quantization techniques compress the model, enabling it to function effectively on less hardware-intensive setups, thus making professional-grade video AI accessible to consumer devices.

The implications of TurboDiffusion extend across numerous fields. In advertising, rapid prototyping is essential, and tools like Higgsfield Effects can already produce cinematic videos from raw concepts in seconds. TurboDiffusion could further enhance these platforms, allowing marketers to iterate campaigns almost instantaneously. In education and training, streamlined video synthesis could enable customized content on demand, reshaping how information is shared and consumed.

However, the acceleration of video generation raises ethical concerns, particularly regarding the potential for deepfakes and misinformation. An article on Oreate AI underscores these challenges, emphasizing the transformative potential for storytelling while cautioning against issues of authenticity. As TurboDiffusion and similar technologies proliferate, striking a balance between innovation and responsibility becomes increasingly crucial.

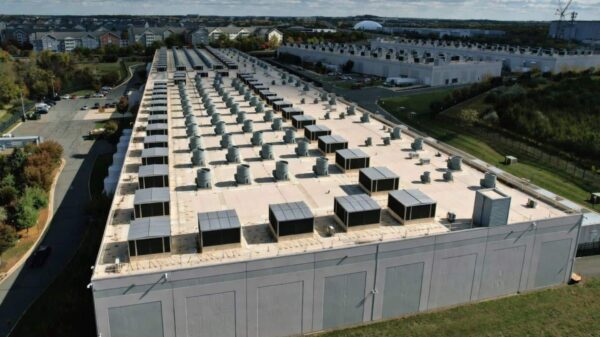

On the hardware front, the surge in demand for video creation is expected to require robust storage and processing solutions. Seagate US predicts a boom in AI-driven content by 2026, with generative models driving unprecedented video growth. TurboDiffusion fits seamlessly into this narrative, optimizing workflows to manage the data influx without a proportional rise in energy consumption.

Recent announcements signal ongoing momentum in this sphere. Google’s 2025 recap highlighted breakthroughs in models like Gemini, which combine video generation with multimodal capabilities. While these advancements are not directly linked to TurboDiffusion, they create fertile ground for speed-focused innovations to flourish, potentially influencing products like Pixel devices for real-time video editing. Competitive offerings are emerging rapidly, as seen in a comparison guide on BestPhoto Blog, which reviewed top generators for 2026, including Runway Gen-4 and Kling 2.0, praising their efficiency. TurboDiffusion could propel these platforms toward sub-second generation times, reflecting user preferences for speed in practical applications.

The open-source community plays a pivotal role in accelerating adoption of models like TurboDiffusion. The FastVideo stack shared by Hao AI Lab supports various models, enabling users to produce 720p videos at impressive speeds. This openness contrasts with proprietary systems, fostering an environment where smaller teams can innovate alongside tech giants.

Despite the promise of TurboDiffusion, challenges remain. Training such models demands extensive datasets, raising privacy concerns. Furthermore, while AI technology is advancing swiftly, economic impacts may initially be modest, with real-world applications lagging behind laboratory results. TurboDiffusion must demonstrate its scalability across diverse scenarios to fulfill its potential.

As we look toward the future, the integration of TurboDiffusion with edge AI could embed advanced video capabilities into various devices, including vehicles and smart homes, facilitating real-time video responses in interactive settings. This convergence of speed and ubiquity has the potential to redefine human-AI interactions. Experts predict that by 2026, AI technology will increasingly focus on security and enhancements alongside video generation, with TurboDiffusion’s efficiency likely contributing to these advancements.

As TurboDiffusion emerges as a leading force in AI-driven video synthesis, its potential to democratize high-quality content creation is profound. The technology not only promises faster production times but also invites a reevaluation of how stories are told and shared in the digital age. With ongoing collaboration between academia and industry, this technique may evolve further, addressing limitations and setting the stage for a future where AI-driven video generation becomes instantaneous and indispensable.

See also Kakao Reveals Kanana-v-4b-Hybrid AI Model with Enhanced Multimodal Capabilities

Kakao Reveals Kanana-v-4b-Hybrid AI Model with Enhanced Multimodal Capabilities MiniMax Prices Hong Kong IPO at HK$165, Aiming for US$538M amid AI Boom

MiniMax Prices Hong Kong IPO at HK$165, Aiming for US$538M amid AI Boom Grok Imagine vs. Sora 2: Which AI Video Generator Delivers Fastest and Most Realistic Results?

Grok Imagine vs. Sora 2: Which AI Video Generator Delivers Fastest and Most Realistic Results? SoundHound Achieves 68% Revenue Growth with Hybrid AI Model Outpacing LLMs

SoundHound Achieves 68% Revenue Growth with Hybrid AI Model Outpacing LLMs Google TV Launches Gemini Update Enabling Image Generation and Voice-Controlled Settings

Google TV Launches Gemini Update Enabling Image Generation and Voice-Controlled Settings