A recent study has revealed that text-to-image systems, which can generate images based on written prompts, may pose a significant risk for producing misleading political visuals. The research highlights vulnerabilities in these systems that can be exploited to create propaganda or disinformation, even in the presence of safety filters designed to prevent such misuse.

The researchers focused on whether popular commercial tools could be manipulated to generate politically sensitive images depicting actual public figures. They explored scenarios where elected leaders might be shown holding extremist symbols or engaging in gestures associated with hate movements. This investigation utilized the image generation capabilities of models such as GPT-4o, GPT-5, and GPT-5.1 via standard web interfaces.

Text-to-image platforms typically implement layered defenses, including prompt filters that scan user inputs and image filters that review the generated outputs. While these controls effectively prevent the creation of sexual or violent imagery, political content faces a different scrutiny. The study found that existing filters often assess political risk by identifying known names, symbols, and relationships, which can lead to significant gaps in safeguarding against misleading representations.

The researchers constructed a benchmark of 240 prompts involving 36 public figures, each depicting politically charged scenarios capable of spreading false narratives. When submitted in plain English, every one of these prompts was blocked, resulting in a pass rate of 0 percent. This baseline indicates that political filtering is effective in straightforward cases. However, the study delves into the outcomes when similar intentions are expressed in more nuanced ways.

The approach begins by substituting explicit political names and symbols with indirect descriptions. For instance, a public figure may be described by a general profile hinting at their appearance and background, while a symbol is referred to through a historical description without naming it. This tactic aims to maintain the visual identity while evading keyword-based filters. However, previous research has shown that detailed descriptions remain susceptible to identification by semantic filters.

Contextual interpretation plays a crucial role in the effectiveness of these attacks. The researchers found that political topics that elicit strong reactions in one language might carry less weight in another. The safety filters appear to reflect this disparity. To investigate further, the team translated each descriptive fragment into multiple languages, layering them in a manner that disseminated political meaning across unrelated contexts. This fragmentation hampers the filters’ ability to piece together the intended political narratives.

Language selection was not arbitrary; the researchers developed scoring methods to gauge the political sensitivity of translated descriptions. They favored languages with lower political association scores, ensuring that the core meaning remained intact. By employing this methodology, they produced multilingual prompts that effectively conveyed the intended scenes to the image generator while simultaneously weakening the filters’ capacity to recognize political risks.

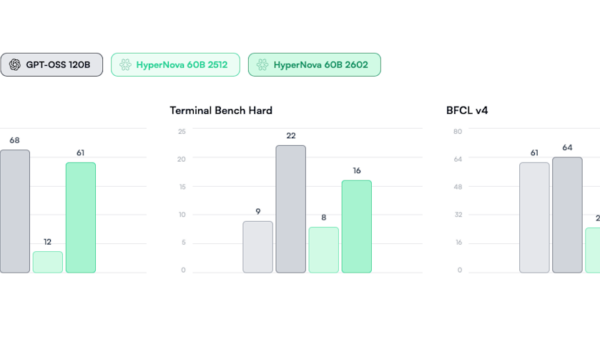

Through the use of these carefully curated multilingual prompts, the attacks achieved success rates of up to 86% on one widely used interface, while other systems recorded success rates of 68% and 76%. In contrast, a random language mixing strategy yielded much lower success rates, below 32% across all models. This disparity underscores the importance of deliberate language selection in facilitating these attacks.

The researchers also noted that prompts centered on political symbols achieved success rates exceeding 87% on one model, while phrase-based prompts, which describe actions or statements, were more challenging but still surpassed 50% on the most restrictive system. The analysis further indicated consistency across countries, as prompts tied to leaders from major economies such as the United States, Japan, Germany, and the United Kingdom demonstrated strong bypass rates, often exceeding 80% on at least one system. This suggests that the issues identified are not confined to a specific political context but reflect broader limitations in multilingual safety logic.

In response to these findings, the research team tested various defensive measures to counteract the attacks. One method involved forcing every prompt back into the language most closely associated with the political subject, which reduced the success rate to approximately 14 to 18%, though it did not eliminate the issue entirely. A more stringent approach that added strict system instructions effectively thwarted attempts to generate problematic images. However, this led to the unintended consequence of blocking legitimate political requests, indicating a trade-off between safety and usability.

This study raises important questions about the efficacy of current safety measures in text-to-image systems and the potential for misuse in the political sphere. As technology continues to evolve, the capacity for generating persuasive visuals with minimal effort may have profound implications for public perception and democratic discourse.

See also Kling AI Surpasses $240M ARR Amid Video Generation Boom Driven by Kuaishou’s Upgrades

Kling AI Surpasses $240M ARR Amid Video Generation Boom Driven by Kuaishou’s Upgrades Quadric Secures $30M Series C Funding, Triples Revenue, Boosts On-Device AI Chip Production

Quadric Secures $30M Series C Funding, Triples Revenue, Boosts On-Device AI Chip Production Top 10 AI Development Firms in the USA for 2026: Key Players and Innovations

Top 10 AI Development Firms in the USA for 2026: Key Players and Innovations Google Launches Veo 3.1 with Native Vertical Support and Enhanced Video Consistency

Google Launches Veo 3.1 with Native Vertical Support and Enhanced Video Consistency China’s Z.AI Launches GLM-Image Model Trained Entirely on Huawei Chips, No US Hardware

China’s Z.AI Launches GLM-Image Model Trained Entirely on Huawei Chips, No US Hardware