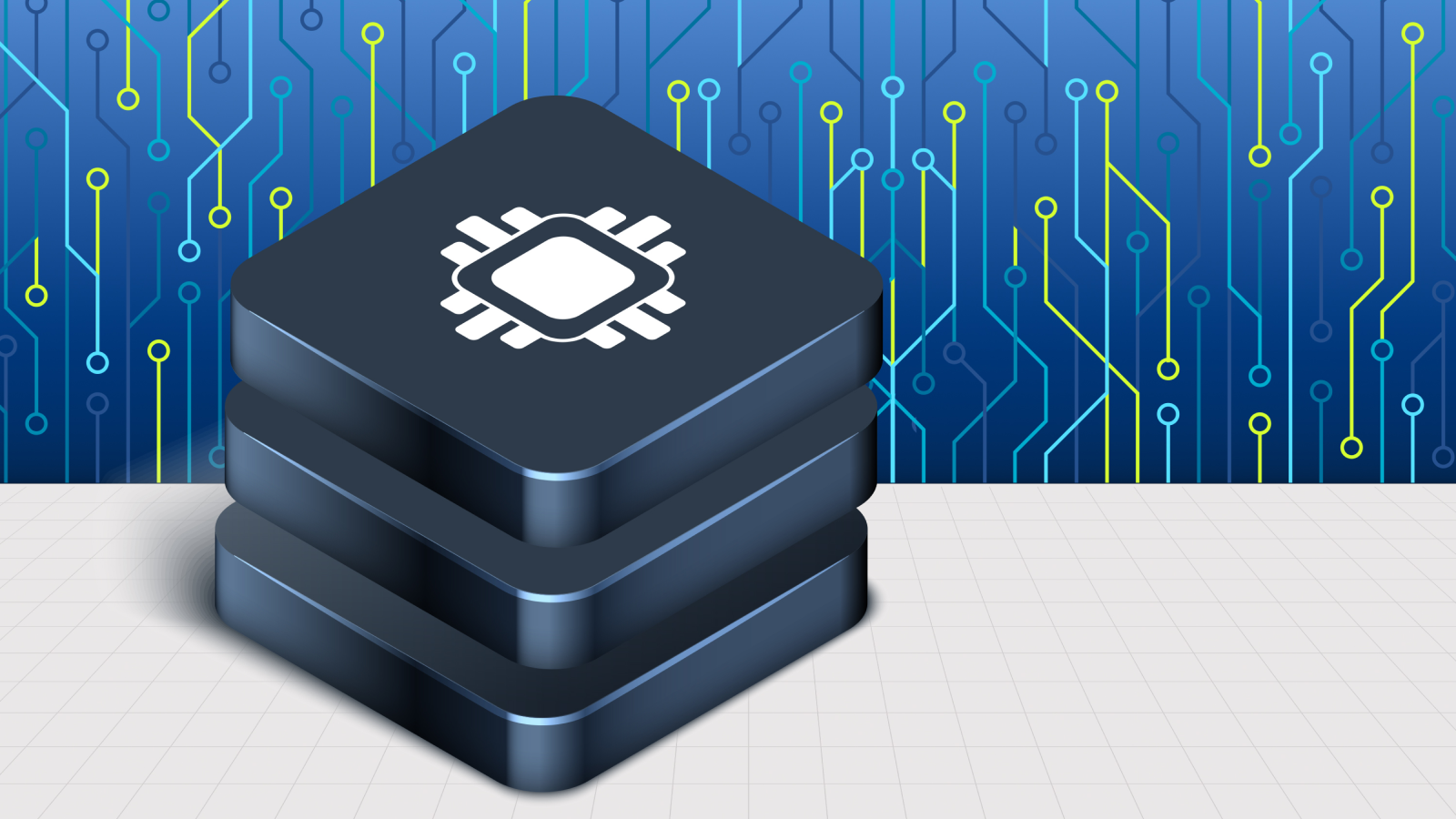

Engineers from MIT have developed a novel approach to enhance energy efficiency in artificial intelligence (AI) chips by stacking circuit components vertically. This innovation addresses the growing demand for efficient data processing, particularly in AI applications that are known to be energy-intensive.

The researchers introduced a “memory transistor” that integrates both logic and memory functionalities into a single nanoscale device. Presented at the International Electron Devices Meeting in San Francisco on December 9 and 10, the findings suggest that direct contact between logic and memory components facilitates easier data transfer, which ultimately minimizes energy consumption.

As the International Energy Agency (IEA) predicts a 130% increase in global electricity usage by data centers—expected to reach around 945 Terawatt-hours by 2030—largely driven by AI advancements, the timing of this research is critical. Current AI systems consume substantial energy, with a single interaction on platforms like ChatGPT requiring cooling equivalent to a bottle of water.

The lead author of the study, Yanjie Shao, a postdoctoral researcher at MIT, emphasized the urgency of addressing energy consumption in AI, stating, “We have to minimize the amount of energy we use for AI and other data-centric computation in the future because it is simply not sustainable. We will need new technology like this integration platform to continue that progress.”

Traditionally, logic and memory circuits are kept separate, necessitating data transfer through wires and interconnects—an approach that often wastes energy. Stacking these components presents a potential solution, yet challenges persist in achieving this without damaging the transistors. The researchers tackled this issue by utilizing indium oxide for the logic transistor, which can be deposited at a relatively low temperature of 302 degrees Fahrenheit (150 degrees Celsius), suitable for protecting the integrity of other transistors.

In addition to the indium oxide logic component, the team vertically stacked a memory layer made of ferroelectric hafnium-zirconium-oxide. This configuration enables the memory transistor to store and process data efficiently, achieving switching times of just 10 nanoseconds while operating at a voltage below 1.8 volts. In contrast, conventional ferroelectric memory transistors typically require 3 to 4 volts and exhibit significantly slower switching speeds.

The innovative memory transistor was incorporated into a chip-like structure for the studies, and while it has not yet been integrated into a functional circuit, the researchers aim to enhance its performance for future applications. Shao noted, “Now, we can build a platform of versatile electronics on the back end of a chip that enable us to achieve high energy efficiency and many different functionalities in very small devices.”

The implications of this research extend beyond the immediate improvements in chip design. As AI systems continue to proliferate, the need for sustainable energy practices becomes paramount. The successful integration of these stacked components could pave the way for advancements in various sectors reliant on AI, such as deep learning and computer vision, ultimately contributing to a reduction in the environmental impact of growing data center demands.

See also AI in Politics Panel to Explore Technology’s Role in Elections on January 27 in Texas

AI in Politics Panel to Explore Technology’s Role in Elections on January 27 in Texas Luxbit.AI Launches Streamlined Payout System for Faster, Transparent Withdrawals

Luxbit.AI Launches Streamlined Payout System for Faster, Transparent Withdrawals Local Cloud Infrastructure Boosts AI Performance for Australian Enterprises

Local Cloud Infrastructure Boosts AI Performance for Australian Enterprises OpenAI Partners with Cerebras in $10B Deal for 750MW AI Computing Power

OpenAI Partners with Cerebras in $10B Deal for 750MW AI Computing Power Bharati Vidyapeeth Launches AI Innovation Lab at 5-Day IT & AI Trends Programme

Bharati Vidyapeeth Launches AI Innovation Lab at 5-Day IT & AI Trends Programme