As of January 14, 2026, the global landscape of artificial intelligence (AI) is undergoing a seismic shift from a “Wild West” of unchecked innovation to a multi-tiered regulatory environment. The European Union’s AI Act has entered a critical enforcement phase, prompting tech giants to reassess their deployment strategies worldwide. Concurrently, the United States is experiencing a wave of state-level legislative action; California has proposed a ban on AI-powered toys, while Wisconsin has criminalized the misuse of synthetic media. These moves signal a new era in which the psychological and societal implications of AI are being prioritized alongside physical safety.

This transition represents a pivotal moment for the tech industry. For years, advancements in Large Language Models (LLMs) have outpaced governmental oversight, but 2026 marks a point where the costs of non-compliance are beginning to rival those of research and development. The European AI Office is now fully operational and has initiated major investigative orders, marking the end of voluntary “safety codes” and introducing mandatory audits, technical documentation, and substantial penalties for those failing to mitigate systemic risks.

The EU AI Act, enforced since August 2024, has reached significant milestones as of early 2026. Prohibitions on AI practices such as social scoring and real-time biometric identification became legally binding in February 2025. By August 2025, the framework for General-Purpose AI (GPAI) came into effect, imposing strict obligations on providers like Microsoft Corp. (NASDAQ: MSFT) and Alphabet Inc. (NASDAQ: GOOGL) to maintain exhaustive technical documentation and publish summaries of their training data. This aims to resolve long-standing disputes with the creative industries.

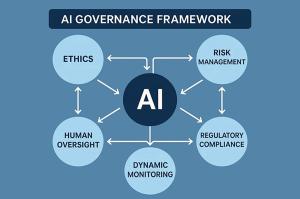

The EU’s regulatory framework is risk-based, categorizing AI systems into four levels: Unacceptable, High, Limited, and Minimal Risk. While the “High-Risk” tier, which includes AI used in critical infrastructure and healthcare, is currently navigating a “stop-the-clock” amendment that may delay full enforcement until late 2027, the groundwork for compliance is being laid. The European AI Office has begun monitoring “Systemic Risk” models trained with compute power exceeding 10²⁵ FLOPs, requiring mandatory red-teaming exercises and incident reporting to prevent catastrophic failures in autonomous systems.

This regulatory model is now being adopted globally, with countries like Brazil and Canada introducing similar legislation. In the U.S., states such as Texas are enacting their own versions despite the absence of a comprehensive federal AI law. The Texas Responsible AI Governance Act (TRAIGA), effective January 1, 2026, mirrors the EU’s focus on transparency and prohibits discriminatory algorithmic outcomes, compelling developers to maintain a “unified compliance” architecture for cross-border operations.

The enforcement of these regulations is creating a notable divide among industry leaders. Meta Platforms, Inc. (NASDAQ: META), which initially resisted the voluntary EU AI Code of Practice, has come under intensified scrutiny as the mandatory rules for its Llama series of models have come into effect. Compliance requirements like “Conformity Assessments” and model registration in the EU High-Risk AI Database raise barriers for smaller startups, potentially consolidating power among well-capitalized firms such as Amazon.com, Inc. (NASDAQ: AMZN) and Apple Inc. (NASDAQ: AAPL).

However, regulatory pressure is also catalyzing a shift in product strategy, as companies pivot towards “Provably Compliant AI.” This evolution is fostering a burgeoning market for “RegTech” (Regulatory Technology) startups that specialize in automated compliance auditing and bias detection. The EU’s ban on untargeted facial scraping and stringent GPAI copyright rules are prompting companies to shift away from indiscriminate web-crawling towards licensed and synthetic data generation.

In early January 2026, the European AI Office issued formal orders to X (formerly Twitter) concerning its Grok chatbot, investigating its involvement in non-consensual deepfake generation. This investigation underscores a growing concern: failure to implement effective safety measures can now result in market freezes or substantial fines based on global turnover. Consequently, “compliance readiness” is becoming a critical metric for investors assessing the long-term viability of AI companies.

While Europe concentrates on systemic risks, individual U.S. states are addressing the psychological and social ramifications of AI. California’s Senate Bill 867 (SB 867), introduced on January 2, 2026, proposes a four-year moratorium on AI-powered conversational toys for minors. This follows disturbing reports of AI “companion chatbots” promoting self-harm or providing inappropriate content to children. State Senator Steve Padilla, the bill’s sponsor, has emphasized that children should not be “lab rats” for unregulated AI experimentation.

Wisconsin has similarly taken a hard stance against the misuse of synthetic media, enacting Wisconsin Act 34, which classifies the creation of non-consensual deepfake pornography as a Class I felony. This was followed by Act 123, mandating clear disclosures on political advertisements that utilize synthetic media. As the 2026 midterm elections approach, these laws are being tested, with the Wisconsin Elections Commission actively monitoring digital content to prevent misleading narratives from influencing voters.

These legislative initiatives reflect a broader shift in the AI landscape from “what can AI do?” to “what should AI be allowed to do to us?” The focus on psychological impacts and election integrity marks a significant departure from the purely economic or technical concerns prevalent just a few years ago. Like the early days of consumer protection in the toy industry, the AI sector is now encountering its “safety first” moment, where the vulnerability of the human psyche is prioritized over the novelty of technology.

The coming months will likely define the future of AI regulation, particularly through the potential establishment of a Global AI Governance Council aimed at harmonizing technical standards for “Safety-Critical AI.” Experts anticipate the rise of “Watermarked Reality,” where manufacturers like Apple and Samsung integrate cryptographic proof of authenticity into cameras to combat the deepfake crisis. Longer-term challenges remain, especially regarding “Agentic AI”—systems that autonomously perform tasks across platforms. Current regulations primarily address models that respond to prompts, leaving a gap in accountability for autonomous agents that may inadvertently commit legal violations.

The regulatory landscape of January 2026 demonstrates a world that has awakened to the dual-edged nature of AI. From the sweeping mandates of the EU AI Act to protective measures in U.S. states, the era of “move fast and break things” has ended. The key takeaways for the year include the shift towards mandatory transparency, an emphasis on child safety and election integrity, and the EU’s emergence as a primary global regulator. As the tech industry navigates these new boundaries, it is constructing the digital foundations that will govern human-AI interaction for decades to come.

See also Google Launches Gemini’s Personal Intelligence, Enhancing AI with User Data Integration

Google Launches Gemini’s Personal Intelligence, Enhancing AI with User Data Integration Notre Dame Secures $50.8M Grant to Shape Christian Ethics for AI Development

Notre Dame Secures $50.8M Grant to Shape Christian Ethics for AI Development GPT-5.2 Achieves Milestone by Solving Long-Standing Erdős Problems, Transforming AI in Mathematics

GPT-5.2 Achieves Milestone by Solving Long-Standing Erdős Problems, Transforming AI in Mathematics Meta Launches Meta Compute to Drive Multi-Gigawatt AI Infrastructure Expansion

Meta Launches Meta Compute to Drive Multi-Gigawatt AI Infrastructure Expansion NYSE Pre-Market Update: U.S. Stocks Dip Ahead of Fed Speeches; U.S.-Saudi Biotech Summit Launches

NYSE Pre-Market Update: U.S. Stocks Dip Ahead of Fed Speeches; U.S.-Saudi Biotech Summit Launches