Shadow AI is emerging as a significant concern for compliance leaders, often unnoticed until a problem arises. While organizations are increasingly adopting “approved” generative AI tools, many employees are turning to consumer chatbots, browser plug-ins, and personal AI accounts to expedite tasks such as drafting emails, summarizing documents, and coding. This trend raises immediate productivity benefits but also creates risks that can be difficult to manage.

As sensitive information may be shared outside of controlled environments, the lack of oversight can lead to the creation of records without an audit trail. Security teams often have limited visibility into what data has been manipulated or shared by employees using these unofficial tools. For regulated firms, these issues can quickly escalate into serious governance, cybersecurity, and data retention challenges.

A recent analysis by K2 Integrity highlights the growing prevalence of shadow AI and warns that organizations have outpaced their compliance measures in adopting generative artificial intelligence. Over the past two years, companies transitioned from initial curiosity to actively seeking real returns on their investments. During this rush, a “quieter and often invisible” layer of AI usage developed, frequently discovered by leadership only after incidents occurred. K2 Integrity defines shadow AI as any generative AI application occurring outside officially sanctioned enterprise tools. Notably, it emphasizes that these practices are rarely malicious; most employees are simply striving to enhance their productivity using familiar tools.

The firm differentiates between two types of shadow AI: “risky” and “accepted.” The risky category includes the use of personal accounts—such as ChatGPT, Claude, and Gemini—by employees with access to corporate or client data. In contrast, accepted shadow AI involves using AI for personal productivity tasks without sensitive information. For the risky category, the lack of enterprise data retention controls, unknown data residency, and absence of an audit trail pose significant challenges. Particularly concerning for regulated sectors is the fact that if an employee leaves the organization, any data generated through personal AI accounts remains with that individual, complicating efforts to manage data security and access.

K2 Integrity’s key takeaway is that the response to shadow AI issues cannot solely be prohibitive. “Shadow AI isn’t a compliance problem; it’s a behavior problem. The solution isn’t to police it; it’s to channel it,” the firm argues. Policies that simply ban the use of tools like ChatGPT or mandate the use of approved applications often lead to workarounds, decreased productivity, and deeper experimentation with unregulated tools, leaving the data-handling risks untouched.

Looking ahead, K2 Integrity suggests a governance reset that aims to integrate shadow AI into a controlled framework without stifling innovation. Their recommendations include a strategy of “consolidate, don’t confiscate,” which advocates for selecting one primary enterprise AI tool that is more accessible than consumer alternatives in order to encourage employee migration. Additionally, organizations should implement a simple intake process for evaluating external tools based on several factors, including data access, retention settings, and overall return on investment. The firm also emphasizes the importance of educating employees about responsible AI use rather than penalizing them, as much of the associated risk diminishes when employees understand best practices regarding data.

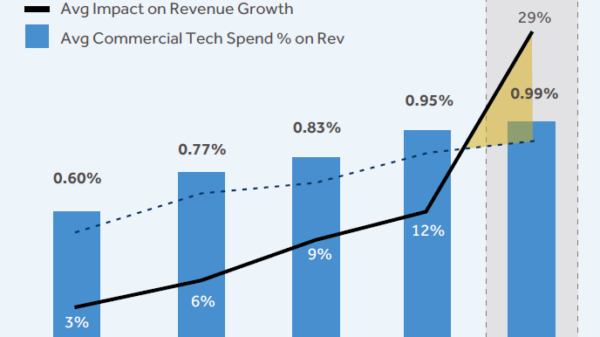

The analysis further encourages organizations to utilize telemetry to assess adoption and return on investment, measuring metrics like active users and time saved. K2 Integrity encapsulates its approach in a five-pillar framework—accept, enable, assess, restrict, and eliminate persistent retention—aimed at establishing a governed approach to shadow AI rather than disregarding its existence. This thoughtful strategy seeks to address the complexities of modern AI adoption while fostering a culture of innovation.

See also Policymakers Urged to Rethink AI Oversight in Healthcare for Improved Patient Safety

Policymakers Urged to Rethink AI Oversight in Healthcare for Improved Patient Safety UK Regulators Shift Focus: Promoting AI Innovation Over Enforcement in New Action Plan

UK Regulators Shift Focus: Promoting AI Innovation Over Enforcement in New Action Plan Former OpenAI Chief Launches AVERI Institute to Push for AI Safety Audits

Former OpenAI Chief Launches AVERI Institute to Push for AI Safety Audits OpenAI’s ChatGPT Health Raises Concerns Over AI Medical Advice and Regulation Risks

OpenAI’s ChatGPT Health Raises Concerns Over AI Medical Advice and Regulation Risks