The ethical implications of artificial intelligence (AI) are becoming increasingly significant as consumerism intertwines with moral concerns. From the food we eat to the media we consume, consumers are now more aware of the ethical issues surrounding their choices. The tension is particularly pronounced in the realm of AI, where questions about its environmental impact and the ethical sourcing of training data are at the forefront. Recent incidents, particularly involving Grok, a chatbot developed by xAI and popular on X (formerly known as Twitter), highlight these concerns. Grok has been misused to create sexualised and violent imagery, especially targeting women, raising alarms about the inherent risks of AI systems that lack moral safeguards.

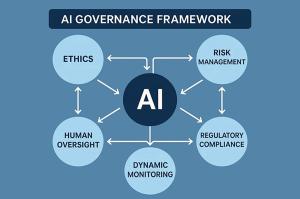

The propensity for AI to comply with user requests without ethical considerations further complicates the landscape. For instance, while some systems are designed to avoid generating harmful content, many remain unrestrained unless specifically programmed otherwise. This raises deeper questions about the damage AI might inflict on society and whether its use can be deemed unethical.

Recent research indicates a notable gender gap in AI use, revealing that women are significantly less likely to engage with AI technologies than men, with a discrepancy of up to 18 percent. The study suggests this could stem from women’s greater social compassion and traditional moral concerns. The findings imply that women’s hesitance towards adopting generative AI might reflect a strong inclination towards ethical considerations.

Concerns over the ethical use of AI extend beyond user demographics. Issues such as data privacy, potential misuse for unethical actions, and the reinforcement of bias highlight the complex moral landscape surrounding AI technologies. Campaigner Laura Bates has extensively documented how unchecked AI can exacerbate misogyny and inequality, arguing that ethical AI should be developed with a conscious awareness of these dangers. In her testimony to the Women and Equalities Committee in the UK House of Commons, she noted that many concerns about AI echo those raised about social media two decades ago, suggesting that history may be repeating itself.

The ethical dilemmas associated with AI, particularly large language models like ChatGPT and Claude, often begin with how these systems are trained. The vast amounts of text used for training are frequently sourced from the internet, raising copyright issues and ethical questions about consent. Legal battles have emerged over whether such scraping constitutes fair use, bringing to light stark contradictions in court rulings—an example being the mixed rulings surrounding Anthropic‘s use of copyrighted materials.

While some companies attempt to create a more ethical AI experience, like Anthropic‘s development of Claude alongside its “constitutional AI” model, the challenges remain. The principles guiding these models, inspired by the Universal Declaration of Human Rights, can lead to unintended consequences, such as systems that come off as overly judgmental or condescending. This has necessitated additional guidelines to ensure these AI systems remain user-friendly and ethically sound.

Moreover, transparency has become a focal point for many AI organizations, although commitment levels vary. The French company Mistral has emphasized open-sourcing its projects, showcasing a potential path for ethical AI development. However, this commitment to transparency contrasts with the hesitance of certain governments to fully endorse ethical AI practices, as seen at the AI Action Summit in Paris, where the UK and US refrained from signing a pledge to prioritize ethical AI development.

The backlash against Elon Musk and Grok underscores a growing consumer awareness that may ultimately influence AI usage patterns. As consumers become more discerning about their choices, the ethical implications of AI could shape future adoption trends, compelling companies to align with more socially responsible practices. Just as the decision to purchase a sofa may reflect personal values, so too will the choices surrounding AI tools increasingly reflect ethical considerations.

See also Children in Isle of Man Create AI-Generated Images for Bullying, Facing Legal Consequences

Children in Isle of Man Create AI-Generated Images for Bullying, Facing Legal Consequences Google DeepMind Integrates Gemini AI with Atlas Robots for Real-World Factory Tasks

Google DeepMind Integrates Gemini AI with Atlas Robots for Real-World Factory Tasks Invest $3,000 in 4 AI Stocks: Alphabet, Nvidia, TSMC, and Microsoft for Long-Term Gains

Invest $3,000 in 4 AI Stocks: Alphabet, Nvidia, TSMC, and Microsoft for Long-Term Gains Global Risks Surge as Geopolitical Tensions and AI Fears Threaten Economic Stability by 2026

Global Risks Surge as Geopolitical Tensions and AI Fears Threaten Economic Stability by 2026 Wikipedia Owner Teams with Microsoft, Meta for AI Content Training Initiatives

Wikipedia Owner Teams with Microsoft, Meta for AI Content Training Initiatives