In a notable shift, one of the largest financial institutions in the United States announced in January that it would cease its reliance on external proxy advisory firms and instead leverage an internal artificial intelligence (AI) system for guiding votes on shareholder matters. While this decision has been largely framed as a story of investor strategy, its repercussions extend deeply into the realm of corporate governance, raising fundamental questions about how boards will adapt to a landscape increasingly influenced by machine judgment.

Proxy advisory firms have emerged over time as essential players in corporate governance, originally designed to address the growing complexity of proxy voting as institutional investors amassed stakes in thousands of companies. These firms provided the necessary scale and coordination in voting across a wide spectrum of issues including director elections, executive compensation, and mergers. Their influence grew not out of mandates but through the efficiency and defensibility of their recommendations, becoming an indispensable resource for investors seeking to navigate an increasingly intricate environment.

Proxy advisors aimed to aggregate shareholder perspectives to provide a collective voice that could challenge corporate management, a concept rooted in the ideas of corporate governance activists such as Robert Monks. However, the systems designed to facilitate these processes gradually began to replace the nuanced judgment they once aimed to support, favoring standardized solutions that often lacked contextual understanding.

The current landscape reveals a growing tension between the efficiency of proxy advisory services and the depth of human judgment needed in governance decisions. As scrutiny from regulators and political bodies has intensified, asset managers are questioning the wisdom of continuing to outsource such a critical fiduciary responsibility. This has prompted a reevaluation of proxy advisory models, with firms beginning to offer more tailored recommendations and large investors investing in internal governance capabilities. The introduction of AI further complicates this transition.

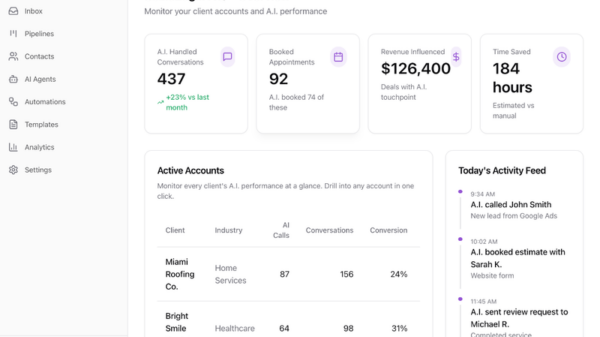

AI systems promise to deliver the same scalability, consistency, and speed that proxy advisors once provided, capable of efficiently processing vast amounts of data from meetings, filings, and disclosures. However, the role of judgment in governance is not eliminated; rather, it is shifted. AI algorithms depend on model design, data inputs, and variable weightings, decisions that are just as crucial as the voting policies of traditional proxy advisors but may lack transparency.

The reliance on AI for governance assessments implies that boards must now consider a new audience—algorithms that evaluate information devoid of the context often provided by human analysts. Governance signals are no longer confined to proxy season; they are continuously interpreted by machines, making it vital for boards to ensure clarity in their disclosures to avoid miscommunication.

This new paradigm raises critical questions that many boards have yet to address. How is governance being assessed in real-time? What nuances might be mischaracterized by AI systems that lack the ability to interpret language with the same discretion as humans? Furthermore, in the event of an erroneous judgment, accountability may rest with the asset manager, but the appeals process is often opaque or informal, leaving boards vulnerable to decisions made without adequate oversight.

Consider a scenario where a board chair shares a name with a previous executive involved in a governance controversy. An AI scanning public data might mistakenly associate this past issue with the current board chair, heightening perceived governance risks. Simultaneously, if the board decides to delay CEO succession to ensure stability during an acquisition—a decision that is thoughtfully made but scattered across various communications—the AI could flag this delay as a governance weakness without understanding the rationale behind it. Such errors may go unchecked due to the absence of human analysts to correct them before voting occurs.

While boards cannot dictate how asset managers construct their AI frameworks, they can adapt their governance practices. Some are already exploring clearer narrative disclosures that explicitly outline their governance philosophies, decision-making processes, and the factors that drive their judgments. This approach not only aids human analysts but also reduces the risk of misinterpretation by AI systems.

Engagement with investors should evolve to encompass discussions about the processes guiding decision-making, including how human judgment is applied and what protocols are in place for addressing factual disputes. This transition is not merely a technological challenge; it is fundamentally a governance challenge, requiring boards to document their decision-making processes and articulate their trade-offs in a coherent manner.

As corporate governance continues to evolve in an increasingly algorithmic age, the traditional assumptions that have governed board decisions are being upended. Silence, ambiguity, and inconsistency in disclosures can no longer be taken lightly. The boards that successfully navigate this changing landscape will be those that prioritize clarity and context in their communications, ensuring their governance narratives hold up against scrutiny, whether by human analysts or AI systems.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health