The eSafety Commissioner has raised alarms regarding the generative artificial intelligence system known as Grok on the social media platform X, amid concerns that the tool is being exploited to create sexualised or exploitative images of individuals. Over the past two weeks, officials have noted a concerning uptick in reports about the misuse of Grok, shifting from nearly no complaints to several, prompting the regulator to act.

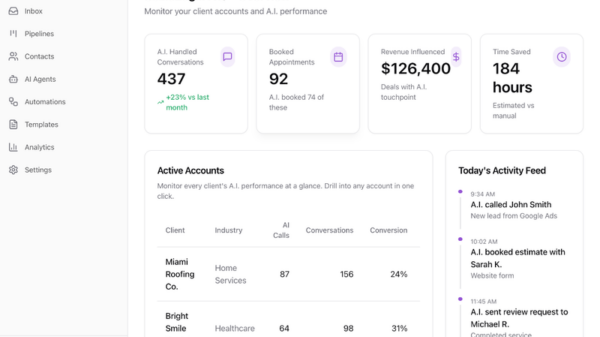

Although the volume of reports remains low, the eSafety Commissioner has indicated a readiness to utilize its legal powers, including issuing removal notices, whenever content crosses the thresholds set out in the Online Safety Act. Local families and schools are being urged to stay vigilant, as X and similar platforms are already bound by systemic safety obligations designed to detect and eliminate child sexual exploitation material and other unlawful content as mandated by Australia’s industry codes.

In response to the emerging trend, the commissioner has directly contacted X, demanding clarity on the safeguards in place to prevent Grok’s misuse. This comes on the heels of a significant crackdown in early 2025, when enforcement actions resulted in the withdrawal of several popular nudify services from Australia after they were found to be targeting school children.

Stricter regulations are on the horizon for these technology giants, with new mandatory codes set to take effect on March 9, 2026. These upcoming regulations will impose limitations on children’s access to sexually explicit or violent material through artificial intelligence services. Additionally, the new rules will also focus on content related to self-harm and suicide, reflecting a broader effort to enhance online safety.

Currently, the government expects all platforms to meet basic online safety expectations, emphasizing the need for proactive measures to halt harmful activities before they gain traction. The scrutiny faced by X is not unprecedented; the company has previously been subject to transparency notices concerning its handling of child abuse material and its deployment of generative AI features. Australian authorities are collaborating with international child protection groups that have observed similar patterns of misuse involving Grok and other advanced digital tools on a global scale.

This wave of scrutiny serves as a crucial reminder for parents to remain vigilant as the discourse around safety by design becomes increasingly central to protecting children from emerging digital threats. The growing concern over the misuse of generative AI highlights the need for stronger safeguards and more effective regulatory frameworks to address the evolving challenges posed by technology in the digital age.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health