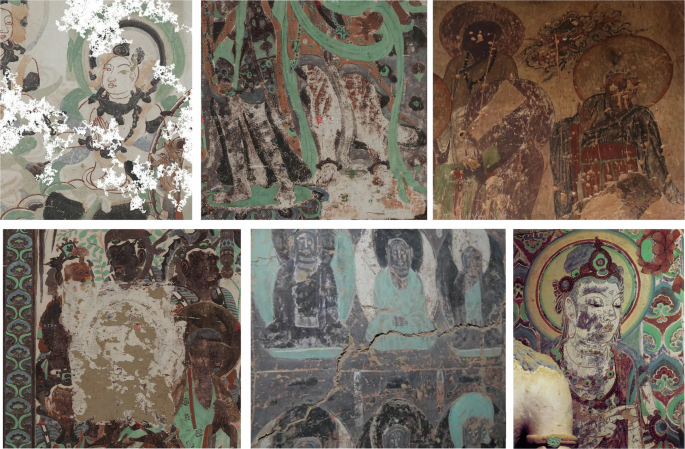

In a groundbreaking study, researchers have introduced a sophisticated method for restoring damaged murals using advanced artificial intelligence techniques. This innovative approach not only aims to reconstruct severely damaged mural images but does so by integrating cultural significance and artistic styles, marking a significant advancement in heritage conservation.

The restoration process, highlighted in the study, revolves around the reconstruction of mural areas lost to aging or physical erosion. By utilizing remaining visual context and optional auxiliary inputs—such as edge maps or structural sketches—the framework generates a restored version that is both visually coherent and semantically appropriate, adhering closely to the original artistic conventions. This endeavor transcends simple pixel recovery, as it demands a high-level understanding of visual structure, cultural symbolism, and stylistic patterns.

The research acknowledges that while the restoration task may resemble generic image completion, it fundamentally diverges in its purpose and constraints. The need to account for culturally significant iconography, compositional symmetry, and historical style continuity presents unique challenges, especially given the absence of ground-truth reference images that complicate supervised learning. To address these difficulties, the team initiated a meticulous data acquisition process, preserving crucial structural and stylistic information, before implementing a human-centered evaluation mechanism that integrates visual analysis with expert judgment.

Technical Details

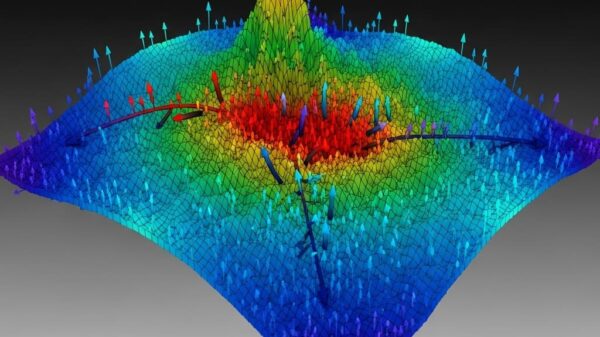

At the core of this study lies DiffuMural, a multi-stage, condition-guided diffusion framework designed for high-fidelity restoration. The model employs a multi-branch mask conditioning module to encode spatial locations of damaged areas, guiding the diffusion process. During training, the mask conditions are supervised to enhance alignment between generated outputs and structural conditions. Furthermore, a frequency-domain refinement module is utilized to optimize texture and color in areas suffering from ambiguity.

To facilitate an effective restoration, the model first extracts weakly-supervised contours from damaged regions using a simple K-Means clustering algorithm. This initial spatial prior is not directly fed into the generation process; rather, it acts as a weak supervision signal, allowing the model to focus on informative areas without the need for manually annotated masks.

The diffusion process operates as a Markov chain, gradually adding Gaussian noise to the clean image over a series of timesteps. The training objective aims to predict this added noise, thereby training the model to denoise effectively. Conditional inputs guide the denoising process, allowing for controllable and structure-aware generation. This is complemented by a structure-aware mask learning mechanism that leverages predictions from a learnable mask branch.

To further ensure that generated results adhere to structural conditions, the researchers implemented a reward consistency loss. A pretrained semantic decoder infers conditions from the output image, enabling an additional layer of assessment for generated content.

The overall training objective combines various loss functions to ensure that contour-derived spatial priors are effectively embedded throughout the restoration process. The integration of a Multi-head Self-Attention module enhances the model’s capacity to utilize long-range contextual dependencies, a particularly vital feature in the intricate task of mural restoration.

In preparation for the study, a custom dataset of mural images was meticulously compiled, utilizing a high-precision scanning system that captures mural details non-destructively. This system generated a substantial dataset comprising over 8 terabytes of raw data from 25 representative Tang-dynasty caves, resulting in more than 650,000 image patches for training. These patches, standardized and sliced into multi-scale resolutions, serve as a high-quality training source, rich in spatial redundancy and contextual variety.

The model was trained using a distributed setup across multiple GPUs, employing a UNet-based architecture and utilizing the Adam optimizer to fine-tune performance across several epochs. The training routine included a range of advanced techniques, such as data augmentation, to bolster robustness and generalization capabilities.

To validate the effectiveness of this restoration approach, researchers compared their method against a diverse array of contemporary inpainting techniques using established metrics like Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). This evaluation framework captured both the technical accuracy and cultural alignment of restoration results, providing a holistic assessment of performance.

The implications of this research extend beyond technical novelty; they pave the way for enhanced cultural heritage preservation practices. The integration of AI within the realm of artistic restoration not only aids in reconstructing lost history but also serves as a bridge between modern technology and traditional art forms. As these methodologies evolve, they promise to redefine the future of mural restoration and cultural conservation.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature