Researchers at the Nara Institute of Science and Technology (NAIST) in Japan, in collaboration with Osaka University, have developed a groundbreaking computational model aimed at understanding how humans form emotions. Led by Assistant Professor Chie Hieida and supported by Assistant Professor Kazuki Miyazawa and then-master’s student Kazuki Tsurumaki, the study was published in the journal IEEE Transactions on Affective Computing on December 3, 2025, following its online release on July 3, 2025. This research could pave the way for more emotionally aware artificial intelligence systems.

The study is based on the theory of constructed emotions, which posits that emotions are not instinctual responses but rather complex constructs formed by the brain in real time. This theory suggests that emotions arise from the integration of internal bodily signals (interoception), such as heart rate, with external sensory information (exteroception) like visual and auditory stimuli. As Dr. Hieida noted, while there are existing frameworks that address how emotions are conceptually formed, the underlying computational processes have not been thoroughly examined.

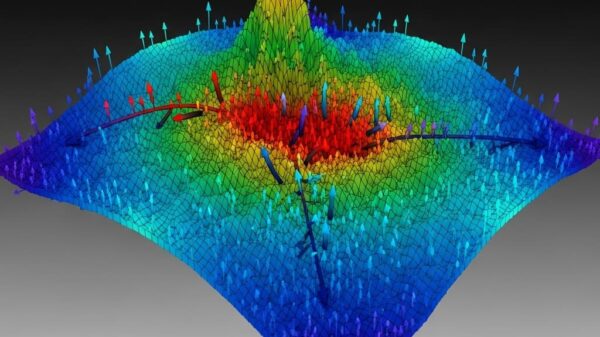

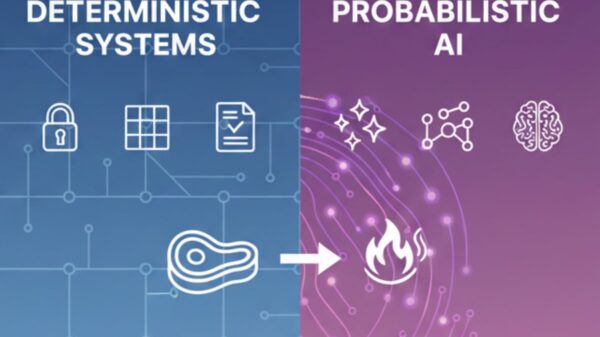

To investigate this phenomenon, the research team employed multilayered multimodal latent Dirichlet allocation (mMLDA), a probabilistic generative model designed to identify hidden statistical patterns in data. This model analyzes the co-occurrence of diverse data types without being pre-programmed with emotional labels, allowing it to discover emotional categories independently.

The model was trained on unlabeled data collected from 29 participants who viewed 60 images sourced from the International Affective Picture System—a widely recognized tool in psychological research. While engaging with the images, the researchers recorded participants’ physiological responses, such as heart rate, using wearable sensors, and gathered verbal descriptions of their emotional states. This comprehensive approach captured the complex interplay of visual interpretation, bodily reactions, and self-reported emotional experiences.

When the model’s inferred emotion concepts were compared with the participants’ self-reported emotional evaluations, a substantial agreement rate of about 75% was observed. This rate significantly exceeds what could be anticipated by chance, indicating that the model’s categorizations closely align with human emotional experiences.

The implications of this research extend beyond academic interest; it suggests a pathway toward creating more responsive and human-like AI systems. “Integrating visual, linguistic, and physiological information into interactive robots and emotion-aware AI systems could enable more human-like emotion understanding and context-sensitive responses,” Dr. Hieida emphasized. Such advancements could facilitate enhanced interactions between humans and machines, making technology more intuitive and relatable.

Moreover, the ability of the model to infer emotional states that individuals might find difficult to articulate verbally could yield significant benefits in fields such as mental health support, healthcare monitoring, and assistive technologies for individuals with developmental disorders or dementia. By providing a computational framework that connects theoretical understanding of emotions with empirical validation, this research addresses a critical question in the intersection of psychology and technology.

In conclusion, the work by Dr. Hieida and her team not only contributes to the academic understanding of emotion formation but also sets the stage for practical applications that could transform how we interact with AI and robotics, ultimately leading to more emotionally intelligent machines capable of responding to human needs with greater sensitivity. As the field of AI continues to evolve, the integration of emotionally aware systems may soon become a critical aspect of technological development.

Source: Nara Institute of Science and Technology

Journal reference: DOI: 10.1109/TAFFC.2025.3585882

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions