SEOUL, Jan. 22 (Yonhap) — South Korea has become the first country to enact a comprehensive law governing the safe use of artificial intelligence (AI) models, officially taking effect on Thursday. This landmark legislation, known as the Basic Act on the Development of Artificial Intelligence and the Establishment of a Foundation for Trustworthiness, establishes a regulatory framework aimed at combating misinformation and other hazards associated with AI technologies.

The adoption of this act marks a significant milestone as it introduces the first government-mandated guidelines on AI usage globally. Central to the legislation is the requirement for companies and AI developers to assume greater responsibility for addressing issues such as deepfake content and misinformation generated by their models. The South Korean government is granted the authority to impose fines and initiate investigations into violations of these new rules.

The act delineates “high-risk AI” as models whose outputs could significantly impact users’ daily lives and safety, particularly in areas like employment, loan assessments, and medical advice. Entities utilizing such high-risk AI models must clearly inform users that their services are AI-based and ensure the safety of these technologies. Furthermore, any content generated by AI models must feature watermarks to indicate its AI origin. A ministry official emphasized that “applying watermarks to AI-generated content is the minimum safeguard to prevent side effects from the abuse of AI technology, such as deepfake content.”

Under the new regulations, global companies offering AI services in South Korea that meet specific criteria—such as global annual revenue of 1 trillion won (approximately US$681 million), domestic sales of at least 10 billion won, or a user base of at least 1 million daily users—are required to appoint a local representative. Currently, major companies like OpenAI and Google fall within these requirements.

Violations of the new act could lead to fines of up to 30 million won. To facilitate compliance, the government plans to implement a one-year grace period before penalties are enforced, allowing the private sector to adapt to the new legal landscape. The legislation also includes provisions for the government to promote the AI industry, requiring the science minister to present a policy blueprint every three years.

This pioneering legal framework not only aims to safeguard users from potential risks posed by AI technologies but also positions South Korea as a leader in the global discourse on AI regulation. As countries around the world grapple with the implications of rapidly advancing AI technologies, South Korea’s proactive approach could serve as a model for future regulations worldwide.

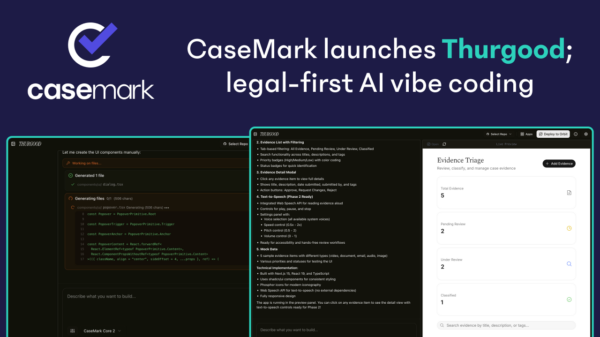

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health