SAN FRANCISCO, Jan. 22, 2026 /PRNewswire/ — FlashLabs, an applied AI research and engineering lab, has launched Chroma 1.0, touted as the world’s first open-source, end-to-end, real-time speech-to-speech AI model capable of personalized voice cloning. This innovative system aims to eliminate latency issues that have long hampered human-AI interaction, enabling more fluid and immediate conversations.

Chroma operates natively in voice, bypassing the traditional pipeline of automatic speech recognition (ASR), large language models (LLM), and text-to-speech (TTS) technologies. This architecture allows for natural conversational exchanges that feel more human-like and responsive, according to the company.

“Voice is the most universal interface in the world, yet it has remained closed, fragmented, and delayed,” stated Yi Shi, Founder and Chief Research & Engineering at FlashLabs. “With Chroma, we’re open-sourcing real-time voice intelligence so builders, researchers, and companies can create AI systems that truly work at human speed.”

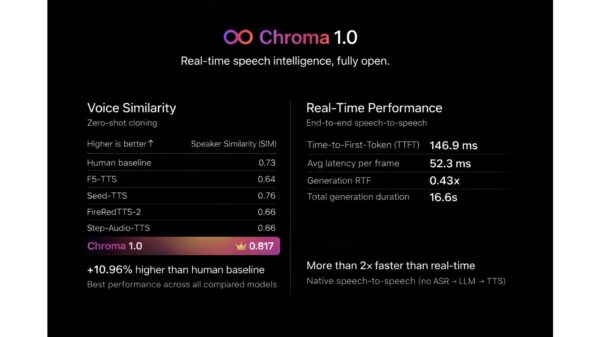

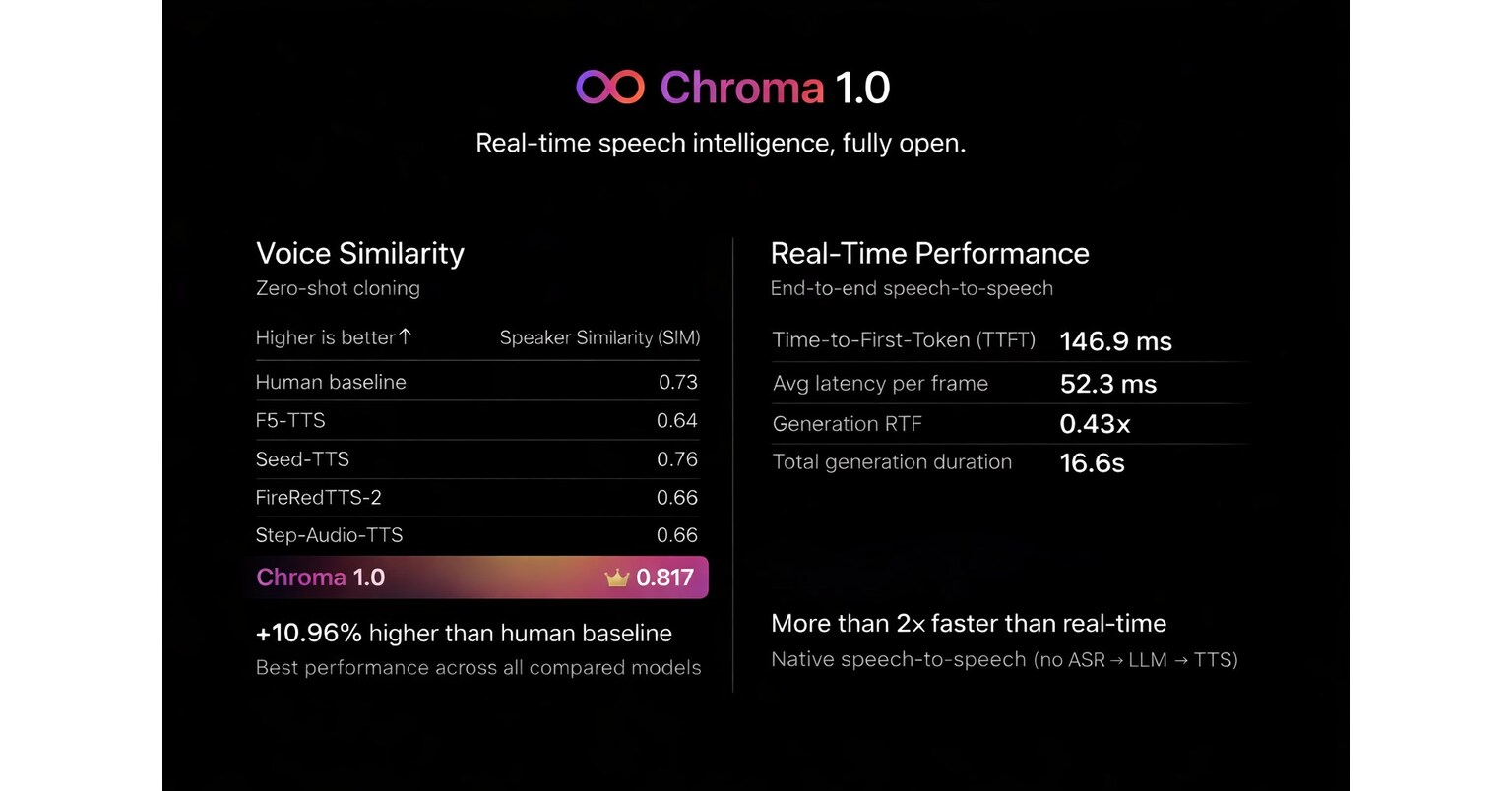

Chroma is designed specifically for real-time applications, achieving an impressive end-to-end time-to-first-token (TTFT) of under 150 milliseconds. This capability supports features such as natural conversational turn-taking, low-latency emotional and prosodic control, and stable real-time inference free from cascading delays. With the introduction of Day-0 SGLang support, the model further reduces latency, achieving approximately 135ms TTFT, making it suitable for live deployment.

One of the standout features of Chroma is its ability to perform few-second reference voice cloning. This allows users to create highly realistic, personalized voices from minimal audio input. Internal evaluations report a speaker similarity score of 0.817, which is over 10.96% above the human baseline and demonstrates best-in-class performance among both open and closed systems.

Despite using a compact architecture of approximately 4 billion parameters, Chroma offers robust reasoning and dialogue capabilities. The model leverages modern multimodal backbones and optimized real-time inference, making it suitable for various applications, including edge deployment, virtual agents, call centers, and interactive systems where latency and cost are critical factors.

Chroma enables a new range of real-time voice applications, from autonomous voice agents and AI call centers to real-time translators and conversational assistants. Its potential also extends to interactive characters and non-player characters (NPCs) in gaming, as well as multimodal AI systems that require seamless communication across different modes.

Chroma 1.0 is available today, representing a significant advancement in voice-based AI. FlashLabs continues to focus on building open and production-grade systems that enhance agency across voice, text, and actions in various domains.

For more information, media inquiries can be directed to Koki Kobayashi at 650-609-7501 or via email at [email protected].

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech