Updated as of: 23 January 2026

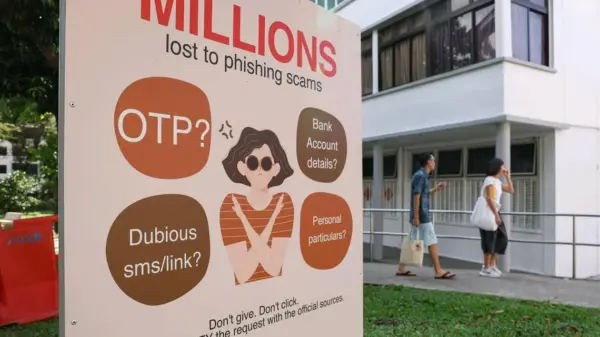

Artificial intelligence (AI) is increasingly being recognized as a potential ally in the fight against cybercrime, yet significant concerns around trust and transparency remain. Catalin Barbu, Vice President of Compliance and Money Laundering Reporting Officer at Shift4, underscores the importance of safe and compliant AI adoption. In a landscape where cyber threats are proliferating, organizations are faced with the dual challenge of leveraging advanced technology while ensuring that its deployment does not compromise security or ethics.

Barbu highlights that while AI possesses the capability to enhance cybersecurity measures, its integration into business practices must be approached cautiously. “AI can be a safeguard against cybercrime, but we must tread carefully,” he stated. The increasing complexity of cyber threats calls for solutions that not only counteract immediate risks but also build long-term resilience against future attacks.

As companies explore AI’s potential, Barbu offers practical guidance to navigate the myriad challenges associated with its adoption. Transparency in how AI systems operate is paramount. Organizations are urged to establish clear frameworks that demystify AI processes, ensuring that stakeholders understand the mechanics behind decision-making algorithms. This transparency can help to bolster trust among users and clients who may be skeptical of AI’s capabilities.

Moreover, Barbu emphasizes the necessity of rigorous compliance protocols. As regulatory bodies across the globe tighten their scrutiny of AI applications, businesses must ensure that they adhere to applicable laws and ethical standards. This includes implementing robust data governance practices that safeguard sensitive information while still allowing for the effective functioning of AI systems.

The conversation around AI is not solely about risk management. It also encompasses the potential for innovation in cybersecurity strategies. AI can analyze vast amounts of data at unprecedented speeds, enabling quicker identification of potential threats and vulnerabilities. Barbu points out that “the agility AI brings to threat detection is a game-changer, allowing companies to respond to incidents in real time.” However, this capability necessitates a careful balance between automation and human oversight to prevent unintended consequences.

As organizations continue to navigate this evolving landscape, the call for ethical AI practices is more pressing than ever. Stakeholders are encouraged to engage in ongoing dialogue about the implications of AI on security and privacy. This dialogue should include not only technical experts but also ethicists, legal professionals, and the communities affected by AI deployment.

Looking ahead, the intersection of AI and cybersecurity is poised to expand significantly. As technological advancements continue to unfold, the challenge will be to harness AI’s capabilities while addressing the inherent risks. The conversation initiated by leaders like Barbu reflects a broader industry recognition that trust and transparency must underpin the deployment of AI technologies.

In summary, while the potential for AI to serve as a robust defense against cybercrime is clear, the path forward requires a commitment to transparency, compliance, and ethical considerations. As the cybersecurity landscape evolves, organizations that prioritize these principles will be better positioned to navigate the complexities of AI integration, ultimately fostering a safer digital environment.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health