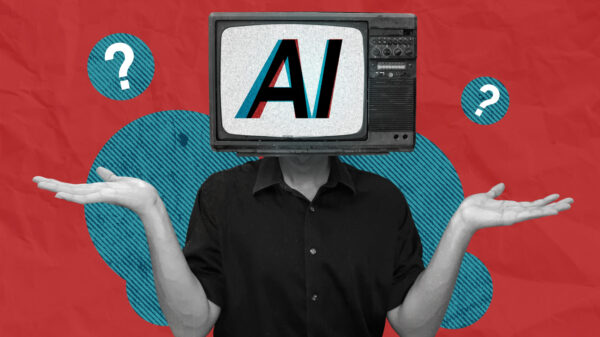

YouTube is set to introduce a new feature that allows creators to produce short-form content using AI-generated versions of their own faces and voices. This announcement, made by YouTube CEO Neil Mohan in his annual letter, aims to enhance creative tools using generative artificial intelligence (AI) while promoting what the company describes as “safe creation.” The feature is expected to be rolled out later this year and is part of YouTube’s broader strategy to minimize the risks associated with deepfakes and the unauthorized replication of others’ identities.

The initiative reflects a growing concern within the tech industry about the misuse of AI technology. As platforms face increasing scrutiny over the potential for abuse, YouTube is emphasizing the importance of allowing creators to leverage their own identities rather than relying on virtual or fictional characters. This shift highlights a commitment to originality and personal branding in an era where AI-generated content is becoming commonplace.

According to TechCrunch, the new functionality will enable creators to generate videos that reflect their real appearances and voices. Mohan indicated that creators would also have opportunities to create games and experiment with music through simple text prompts integrated into these AI tools. While the specific form this feature will take remains unclear, it is anticipated that it will be incorporated into existing tools for video shorts, such as AI clip generation and automatic dubbing.

To protect creators from unauthorized use of their likenesses, YouTube is implementing technical measures to prevent others from exploiting this new feature. The platform began offering a similarity detection technology in October 2022, which identifies AI-generated content that mimics the looks and sounds of creators. If a creator finds content that uses their appearance or voice without permission, they will have the option to request its removal.

YouTube’s proactive approach comes in response to last year’s controversies regarding the unauthorized use of celebrity faces and voices on the platform. As societal pressures mount for companies to take responsibility, YouTube’s measures are part of a wider trend toward ensuring ethical AI usage in content creation.

In a parallel development, OpenAI is pursuing a similar trajectory with its “Sora” image-generating platform, which gained attention for its “Cameo” feature. This allows users to upload their own faces and voices, which are then incorporated into AI-generated videos. OpenAI has emphasized that this functionality is designed to provide users with complete control over their likenesses, allowing for revocation or deletion of videos containing their cameos at any time.

OpenAI’s commitment to user control reflects a growing recognition of the importance of individual rights in the age of AI. Even with collaborative features, users maintain exclusive rights to their cameos, which is intended to safeguard against unauthorized use. However, the launch of this feature also prompted discussions about copyright and portrait rights, leading OpenAI CEO Sam Altman to revise related policies several times. He has reiterated the company’s commitment to offering more detailed control over character creation.

As both YouTube and OpenAI navigate the complexities of AI-generated content, the industry is witnessing a pivotal shift toward reinforcing creator rights and establishing ethical guidelines. These developments underscore the evolving nature of creativity in a digital landscape increasingly influenced by artificial intelligence. The proactive measures taken by these tech giants may set a precedent for how other platforms address similar challenges in the future, signaling a broader recognition of the need for responsible AI use.

In conclusion, as YouTube rolls out its new AI-based features and OpenAI enhances user control over likenesses, the industry is poised to explore the boundaries of creativity and technology. Both companies are positioning themselves as leaders in a movement toward ethical AI practices, aiming to foster a more secure environment for creators while addressing societal concerns over identity and content authenticity.

See also ChatGPT 5.2 Cites Elon Musk’s Grokipedia, Raising Misinformation Concerns

ChatGPT 5.2 Cites Elon Musk’s Grokipedia, Raising Misinformation Concerns Davos Tech Leaders Warn AI’s Geopolitical Role Could Shift U.S.-China Power Balance

Davos Tech Leaders Warn AI’s Geopolitical Role Could Shift U.S.-China Power Balance 2026 Growth Strategies: AI Integration, Partnerships, and Resilience Amid Economic Shifts

2026 Growth Strategies: AI Integration, Partnerships, and Resilience Amid Economic Shifts AI Integration in Nuclear Strategy Raises Escalation Risks Among US, Russia, and China

AI Integration in Nuclear Strategy Raises Escalation Risks Among US, Russia, and China Combat AI Slop: 6 Essential Steps to Safeguard Your Business’s Productivity and Quality

Combat AI Slop: 6 Essential Steps to Safeguard Your Business’s Productivity and Quality