BlackRock Deploys AI Framework to Tackle Dirty Data Across Financial Modeling Pipelines

BlackRock has unveiled a new research framework aimed at addressing a significant issue in finance: dirty data. The firm’s initiative focuses on enhancing data quality within financial modeling pipelines, a persistent challenge that has often been underestimated in regulated environments.

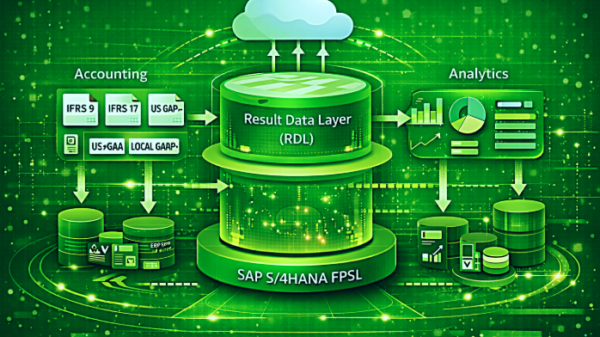

The framework is designed to continuously monitor data quality throughout the entire modeling pipeline, shifting away from traditional methods that treat data cleaning as a one-off task. BlackRock researchers emphasize that in highly automated and regulated systems, even minor errors—such as misplaced decimals or missing identifiers—can lead to significant system failures. Their proposed solution integrates governance and quality checks directly into production workflows to mitigate these risks.

The framework introduces a governed quality layer at three critical stages of data handling. The first stage, data ingestion, focuses on standardizing incoming vendor feeds, enforcing schema rules, and identifying duplicates or missing identifiers, such as CUSIPs. This proactive approach aims to catch structural issues before the data enters downstream systems.

At the model check stage, the framework shifts its focus from raw inputs to monitoring the behavior of the models themselves. For example, if an asset pricing model produces implausible yield gaps or displays inconsistent relationships, the framework will flag this as a local anomaly, even if the data itself appears valid. This level of scrutiny is essential for maintaining data integrity in complex financial models.

The final stage involves validating outputs before they reach downstream systems or decision-makers. This final check is crucial for preventing contaminated signals from affecting trading risk management or reporting processes. The comprehensive approach underscores BlackRock’s commitment to ensuring data integrity at every phase.

One notable outcome from BlackRock’s research is the improved quality of alerts generated by its AI-based data completion module. Benchmark tests revealed that using AI to fill in missing values, rather than dropping incomplete records, reduced false positive rates from approximately 48% to about 10%. The system also achieved around 90% recall and 90% precision, reflecting an improvement of over 130% compared to baseline methods.

The framework is already operational, functioning across both streaming and tabular data and spanning multiple asset classes. This implementation indicates that BlackRock’s research is not merely theoretical but represents a practical solution embedded within one of the world’s largest asset management firms.

This development illustrates a broader trend within the financial sector, where the growing complexity of models and increasing volumes of data have made robust data integrity a necessity. BlackRock’s work highlights that data quality is evolving into a core component of modern financial systems, rather than being treated as a preprocessing step relegated to the sidelines.

For those interested in further insights, Dr. Dhagash Mehta, Head of BlackRock Applied Artificial Intelligence Research for Investment Management, will co-write a paper presented at QuantVision 2026: Fordham’s Quantitative Conference.

See also Finance Ministry Alerts Public to Fake AI Video Featuring Adviser Salehuddin Ahmed

Finance Ministry Alerts Public to Fake AI Video Featuring Adviser Salehuddin Ahmed Bajaj Finance Launches 200K AI-Generated Ads with Bollywood Celebrities’ Digital Rights

Bajaj Finance Launches 200K AI-Generated Ads with Bollywood Celebrities’ Digital Rights Traders Seek Credit Protection as Oracle’s Bond Derivatives Costs Double Since September

Traders Seek Credit Protection as Oracle’s Bond Derivatives Costs Double Since September BiyaPay Reveals Strategic Upgrade to Enhance Digital Finance Platform for Global Users

BiyaPay Reveals Strategic Upgrade to Enhance Digital Finance Platform for Global Users MVGX Tech Launches AI-Powered Green Supply Chain Finance System at SFF 2025

MVGX Tech Launches AI-Powered Green Supply Chain Finance System at SFF 2025