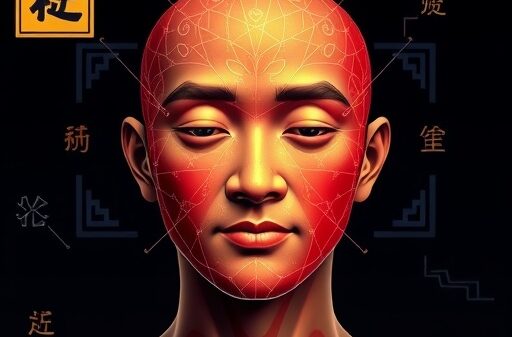

In a striking incident from 2024, a finance employee at a multinational firm in Hong Kong unwittingly authorized over $25 million in transfers after joining what he believed was a legitimate video conference. The call featured individuals he recognized, including the company’s chief financial officer, but unbeknownst to him, every participant was a deepfake created using advanced artificial intelligence. This sophisticated AI-enabled attack demonstrates how attackers can now manipulate trusted communication channels to exploit organizational weaknesses.

This incident is indicative of a broader trend in cybersecurity, where traditional defenses struggle to keep pace with rapidly evolving threats. According to Bitdefender’s 2025 Cybersecurity Assessment, 63 percent of IT and cybersecurity professionals reported encountering AI-driven attacks in the past year. Similarly, Microsoft’s 2025 Digital Defense Report revealed that threat actors are leveraging AI to automate phishing schemes, enhance social engineering efforts, generate malware, and rapidly identify system vulnerabilities.

The reality is stark: cybercriminals are already harnessing artificial intelligence, and organizations that fail to adopt similar strategies risk falling behind. Ten years ago, crafting high-quality phishing emails and executing social engineering attacks required specialized skills and time. Today, these tasks can be accomplished in mere seconds using consumer-grade AI models, enabling attackers to scale their operations beyond what human capabilities allow.

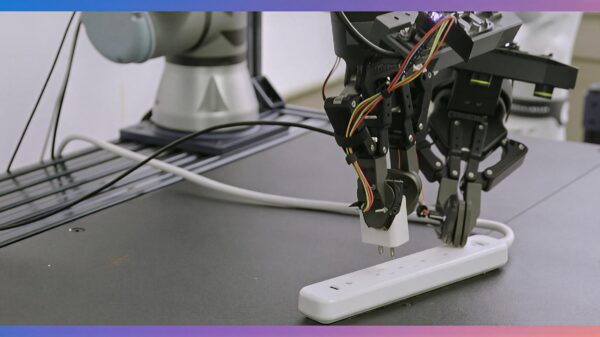

Among the most prevalent AI-driven attack methods are deepfakes and synthetic identity fraud. AI technologies are now capable of convincingly replicating voices, images, and videos, enabling attackers to impersonate executives and employees with remarkable accuracy. This level of deception complicates detection efforts and increases the likelihood of successful attacks.

AI has also transformed phishing and social engineering tactics. By generating personalized, natural-sounding messages that mimic an organization’s communication style, attackers can create phishing attempts that are nearly indistinguishable from legitimate correspondence. Furthermore, once inside a network, attackers can employ living-off-the-land tactics, utilizing legitimate tools and services to conceal their activities, a method made easier by AI’s ability to identify and exploit these resources swiftly.

These AI-enhanced attack vectors contribute to heightened financial and reputational risks for organizations. Phishing attacks alone can average nearly $5 million per breach, while ransomware groups increasingly resort to leaking sensitive data publicly to exert pressure on victims.

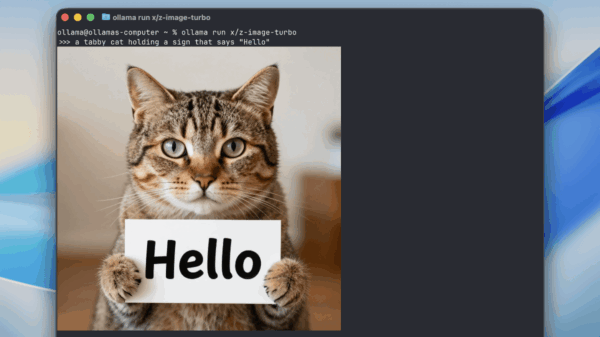

To counter these AI-driven threats, organizations must leverage AI themselves. Human analysts cannot match the speed of machine-driven intrusions, and traditional rule-based systems are ill-equipped to detect rapidly changing threats. By integrating AI into cybersecurity strategies, organizations can enhance their defenses significantly.

AI systems, for instance, can provide real-time intrusion detection by analyzing network traffic and user behavior to flag anomalies before they escalate into serious breaches. Additionally, AI can facilitate cross-domain threat correlation, ingesting and analyzing data from various sources to identify genuine threats amidst a multitude of alerts. Furthermore, AI enables automated incident response, allowing security teams to isolate compromised devices, block malicious activity, and notify relevant personnel within seconds—often thwarting attacks before they spread.

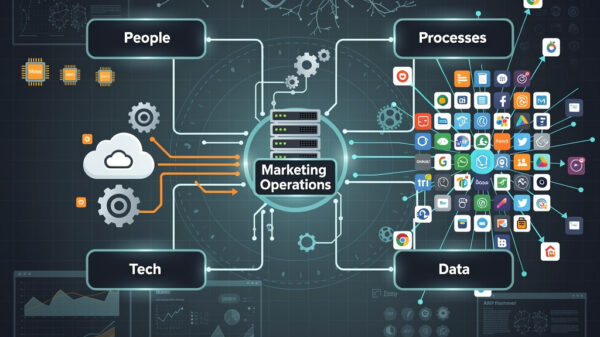

While adopting AI in cybersecurity is crucial, it should not replace human security teams. Instead, the technology should augment them, providing the speed, precision, and scalability needed to combat AI-enabled adversaries. A strong foundation for AI-enhanced cybersecurity typically includes Extended Detection and Response (XDR) and Security Information and Event Management (SIEM) systems. These frameworks unify threat detection across different platforms and utilize AI to prioritize alerts and uncover anomalies.

Despite the advancements, traditional security measures, such as robust firewalls, network segmentation, and phishing-resistant multi-factor authentication, remain essential. Multi-factor authentication alone can block over 90 percent of unauthorized access attempts and should be considered a fundamental component of any security strategy.

As organizations navigate this evolving landscape, preparation becomes paramount. Security teams must ensure that their incident response plans are tested and refined continually. AI tools can simulate attacks, pinpoint weaknesses, and bolster response protocols well in advance of a real cyber threat.

The contest between attackers and defenders has transitioned to a new battleground: AI versus AI. Cybercriminals have already embraced this shift, and organizations must follow suit to maintain their defensive edge. While AI may not eliminate cyber risks, it will be instrumental in determining which organizations can respond quickly, adapt intelligently, and effectively defend against increasingly automated threats. Protecting data, customers, and reputations now hinges on an organization’s ability to harness AI in their cybersecurity arsenal.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks