In a transformative development for artificial intelligence, researchers from Google and the University of Chicago have revealed that recent advances in the reasoning abilities of large models are driven by a more complex internal interaction structure rather than merely an increase in computational steps. This insight comes as models like OpenAI’s O series and DeepSeek-R1 have begun to outperform traditional instruction-tuned models in intricate tasks such as mathematics and logical reasoning.

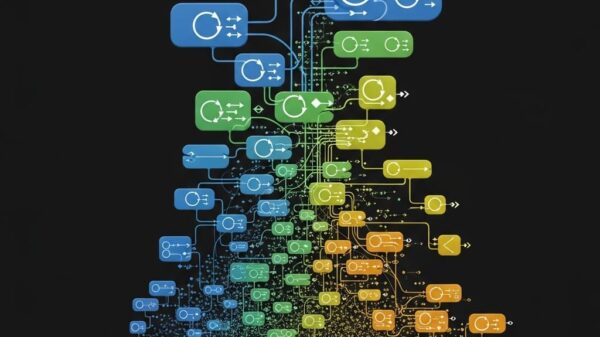

The research, published in a recent paper, explores what the authors describe as a “society of thought” within these advanced models. Rather than simply processing more calculations, these models internally simulate dialogues akin to those found in a debate team, allowing them to express diverse viewpoints, correct one another, and ultimately arrive at more accurate solutions. This resembles the way human intelligence evolved through social interactions, suggesting that similar processes may be at play in artificial intelligence.

The findings indicate that models like DeepSeek-R1 and QwQ-32B exhibit significantly greater perspective diversity and richer conversational behaviors compared to baseline models and those solely subjected to instruction tuning. The researchers identified four key types of conversational behaviors that these models employ during reasoning processes: question-answer behavior, perspective switching, viewpoint conflict, and viewpoint reconciliation. This multi-agent-like structure not only enhances the models’ cognitive strategies but also contributes to their superior performance in reasoning tasks.

Further experimentation using controlled reinforcement learning demonstrated that models can spontaneously increase conversational behaviors even when only reasoning accuracy is rewarded. By introducing conversational scaffolding during training, researchers found significant improvements in reasoning abilities over untuned baseline models and those fine-tuned with monologue-style reasoning. These results underline the importance of social dynamics in cognitive processes, as Google’s research proposes a new direction for harnessing “collective wisdom” through systematic agent organization.

The study also sheds light on the social emotional roles displayed in reasoning trajectories, using the Bales Interaction Process Analysis framework to categorize various interaction types. The research classifies these roles into categories such as information-giving, information-seeking, and positive and negative emotional expressions. Models that utilized a more balanced interaction of these roles demonstrated superior reasoning capabilities, contrasting sharply with instruction-tuned models that exhibited monologue-like reasoning with limited interactive engagement.

Technical Insights

By employing the Gemini-2.5-Pro model to assess conversational behaviors, the authors reveal that models like DeepSeek-R1 not only outperform in question-answer sequences but also actively switch perspectives and reconcile conflicting viewpoints during complex reasoning tasks. In contrast, more traditional models often present information in a linear, one-dimensional manner, which limits their cognitive flexibility.

In specific tests, such as graduate-level scientific reasoning and advanced mathematical problems, the conversational characteristics of these enhanced models became particularly evident. Through mechanisms such as result verification and path backtracking, these models demonstrated a higher frequency of conversational behaviors, effectively allowing them to explore solution spaces more thoroughly. For instance, positive guidance of conversational features can significantly boost the accuracy of tasks, nearly doubling performance in some instances.

Overall, these findings suggest that the integration of conversational features within reasoning models fundamentally enhances their ability to solve complex problems. By simulating dialogue and diverse perspectives, these systems not only exhibit improved reasoning accuracy but also reflect a more nuanced approach to problem-solving that echoes the social dimensions of human intelligence. As the field continues to evolve, the implications of this research may pave the way for even more sophisticated AI systems that leverage collective intelligence for enhanced cognitive performance.

See also Dark Fleet Tankers in Mediterranean Raise Alarm Over Marine Safety and Environmental Risks

Dark Fleet Tankers in Mediterranean Raise Alarm Over Marine Safety and Environmental Risks Aligning CEOs and IT Leaders is Crucial to Avoid AI Project Failures in Businesses

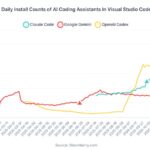

Aligning CEOs and IT Leaders is Crucial to Avoid AI Project Failures in Businesses Anthropic’s Claude Code Achieves $2B ARR, Surges in Developer Adoption and Market Share

Anthropic’s Claude Code Achieves $2B ARR, Surges in Developer Adoption and Market Share Tech Earnings Awaited: Big Players Face Pressure to Show AI Investment Returns

Tech Earnings Awaited: Big Players Face Pressure to Show AI Investment Returns Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere