Gartner has projected that by 2028, half of all organizations will adopt a **zero-trust posture** for data governance due to the increasing prevalence of unverified **AI-generated data**. This shift highlights the growing challenges in distinguishing between data created by machines and that produced by humans, necessitating stronger authentication and verification measures to safeguard business outcomes.

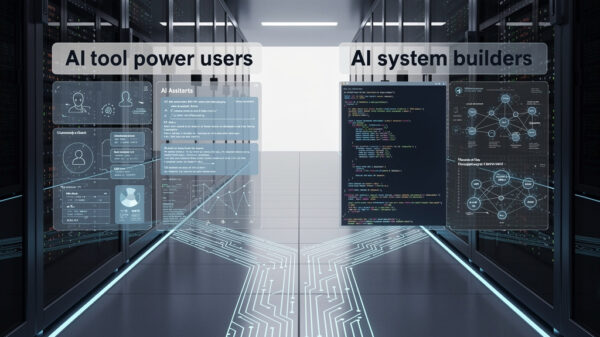

As businesses continue to expand their use of **Generative AI (GenAI)**, the volume of AI-generated content is expected to surge. According to Gartner’s **2026 CIO and Technology Executive Survey**, a significant 84% of respondents anticipate an increase in funding for GenAI within the next year. This uptick in AI-generated material entering common training datasets raises concerns about future language models potentially learning from previous model outputs. Such a scenario could lead to **model collapse**, where the reliability and veracity of model responses deteriorate.

In response to these challenges, Gartner indicates that the requirements for verifying or labeling AI-generated data may escalate in certain regions, though the methods for achieving this will likely differ across geographies. Organizations will need to bolster their capabilities to identify, categorize, and manage AI-generated data, which can be supported through effective metadata management and information governance skills.

To mitigate the risks associated with unverified AI-generated data, Gartner recommends several strategic measures. These include designating clear ownership for AI governance, forming cross-functional teams that integrate cybersecurity and data stakeholders to evaluate risks, and updating existing governance policies to address emerging AI-related challenges. Moreover, organizations should adopt active metadata practices that can flag outdated or unverified data and initiate recertification processes.

As the landscape of data governance evolves alongside advancements in AI technologies, organizations must prioritize robust frameworks to navigate the complexities of unverified data. The commitment to a zero-trust approach will not only enhance data integrity but also build trust among stakeholders who rely on accurate and reliable information. In the coming years, as more entities embrace GenAI, the conversation around data governance will likely intensify, underscoring the critical need for effective oversight mechanisms in a rapidly changing digital environment.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health