Compliance teams at banks and asset managers in Singapore, Malaysia, and Australia remain heavily reliant on manual processes, despite a growing interest in artificial intelligence (AI). This was revealed in a joint survey conducted by Fenergo and Risk.net, which assessed the views of 110 risk, financial crime, and compliance professionals.

The survey indicated that a significant 66% of respondents described their compliance workload as “heavy” and predominantly manual. Additionally, over half of the participants, or 54%, reported experiencing periodic backlogs in know your customer (KYC) reviews, while 45% cited high false-positive rates in areas such as screening and transaction monitoring.

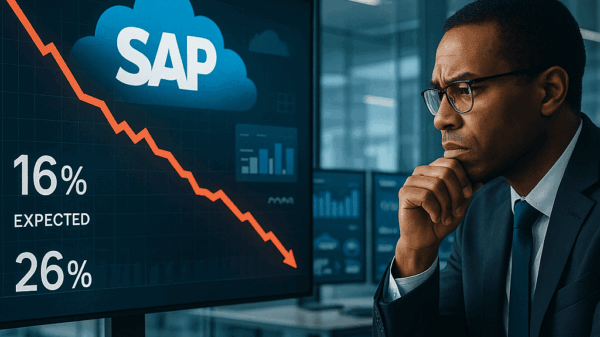

Despite interest in AI, the research highlighted a notable gap between exploration and actual deployment within compliance functions. While 54% of respondents are exploring AI use cases, only 34% have initiated implementation, and 13% are not using AI at all.

This reliance on manual processes underscores significant workload constraints in critical areas such as customer reviews and ongoing monitoring. Respondents linked these pressures to backlogs and to the need for human intervention in operational processes that still depend on staff judgment. Bryan Keasberry, the APAC Head of Market Development at Fenergo, noted that the operating environment in the region contributes to these complexities, citing varying languages and regulations across markets that affect data consistency.

“Compliance in APAC continues to feel unusually manual, largely due to the region’s linguistic and regulatory complexity,” said Keasberry. “Institutions are operating across fragmented regulatory regimes, diverse languages, and complex data environments. That makes data consistency and quality difficult to achieve, and without those foundations, AI adoption inevitably slows.”

Respondents identified operational efficiency as the primary driver for AI investment, surpassing cost reduction and task automation. However, the survey revealed that data quality stands as the foremost obstacle to AI adoption, followed closely by integration with legacy systems. Regulatory compliance concerns were also raised as significant barriers.

The findings suggest that many institutions perceive foundational work as necessary before a broader deployment of AI can take place. Data inconsistencies and system integration challenges can impede the performance of AI models and hinder the operationalization of results in production environments.

The survey also uncovered limited familiarity with agentic AI among compliance teams, with only 6% claiming to be “very familiar” with the concept. A further 53% described themselves as “somewhat familiar,” while 29% stated they were “not familiar at all.” This cautious approach extended to overall attitudes toward automation, as 66% of respondents expressed a preference for partial automation, with no participants selecting full automation.

“The challenges faced by firms today reflect the realities of legacy operating models and the need to balance innovation with regulatory accountability,” Keasberry commented. “For organizations at earlier stages of AI adoption, keeping human oversight in the loop remains essential. Regulators expect AI systems to be explainable and well governed. The findings suggest institutions want to demonstrate control over how AI models operate and how decisions are reached, particularly in high-risk areas such as KYC, AML, and fraud.”

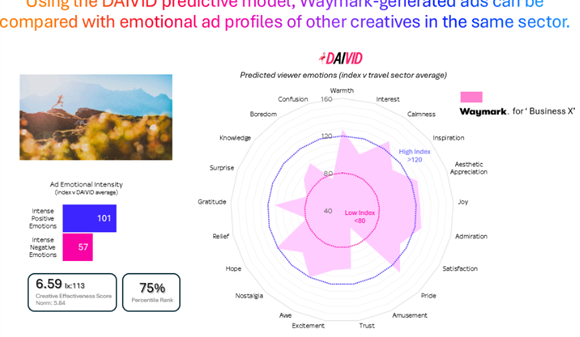

Despite a cautious stance towards full automation, the survey indicated an increasing interest in advanced AI applications. Notably, 44% of respondents were considering agentic AI, with transaction monitoring, fraud detection, and sanctions screening identified as key areas of focus. These results imply that institutions see potential for AI systems to enhance investigative and monitoring workflows, although adoption decisions still hinge on governance models, explainability requirements, and the ability to demonstrate accountability over AI-generated outputs.

Moreover, the research framed AI adoption within the context of heightened regulatory scrutiny and expectations regarding controls. Firms in the region must navigate various regulatory frameworks and supervisory approaches. Respondents consistently emphasized the importance of explainability, governance, and oversight as institutions assess AI systems for compliance applications.

“What this means for markets such as Singapore, Malaysia, and Australia, is that compliance transformation cannot be rushed,” Keasberry stated. “Regulators are supportive of innovation, but expectations around governance, explainability, and accountability are rising. Institutions need to strengthen data foundations and embed controlled, human-led automation before scaling more advanced AI capabilities as confidence and regulatory clarity evolve.” The next phase of compliance transformation in the APAC region will depend on steady advancements in data quality, platform integration, and trust in automation as institutions strive for scalable, regulator-ready AI deployment.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health