As organizations increasingly adopt Generative AI (GenAI) technologies to enhance efficiency, they face a new array of compliance risks that could undermine these benefits. Risks such as incorrect outputs, data privacy issues, and biases in decision-making are complicating the landscape for compliance leaders, necessitating a proactive approach to governance. The challenge lies in fostering innovation while ensuring that the deployment of these technologies adheres to regulatory standards and ethical norms.

Among the most pressing concerns are the risks of “hallucinations” where AI generates inaccurate but authoritative-sounding outputs, and the phenomenon known as “Shadow AI,” where employees use unauthorized AI tools to meet business needs more quickly than approved alternatives. This unauthorized use, often driven by convenience rather than malice, requires organizations to move beyond mere prohibition and develop practical, risk-based strategies to manage it effectively.

Compliance professionals are tasked with enabling responsible GenAI adoption without stifling innovation. A practical governance playbook is essential, starting with an understanding of how GenAI technologies are utilized throughout the organization. Establishing a comprehensive inventory of GenAI use cases allows compliance teams to apply oversight proportionate to the associated risks, thereby focusing resources where they are most critical.

Before deploying any GenAI application, organizations are advised to register it with the compliance team. This registration should detail the business purpose, the data types involved, and the specific model and version used, thereby establishing a clear baseline for oversight. This approach enables the identification of higher-risk applications early, allowing compliance functions to focus their efforts where they are most needed.

A tiered risk classification system can further refine oversight efforts. For instance, Tier 1 might encompass low-risk applications, such as internal brainstorming sessions using GenAI to draft ideas for training presentations. Tier 2 could involve moderate-risk uses where AI is employed for internal research, with human oversight before the results are utilized. Tier 3 would cover high-risk applications, such as customer-facing outputs that necessitate documented human approval prior to execution. This classification allows compliance teams to concentrate on material risks while facilitating innovation in lower-risk areas.

Despite implementing formal registries and tiered models, Shadow AI remains a significant hurdle. Organizations must provide secure, enterprise-approved GenAI platforms that meet data protection and compliance standards. Blocking public tools without offering legitimate alternatives may push employees to seek unauthorized solutions, exacerbating the issue. Therefore, companies should implement technical guardrails to restrict access to unauthorized AI tools while clarifying acceptable use policies regarding data handling.

Education plays a crucial role in addressing compliance risks associated with GenAI. Continuous, role-based training should equip employees with knowledge about the risks and proper data management practices, thus reinforcing compliance expectations. Moreover, enforcing clear consequences for policy violations is essential. Low-risk infractions might be addressed through targeted coaching, while repeated or high-risk violations should trigger formal investigations and disciplinary actions aligned with existing data protection policies.

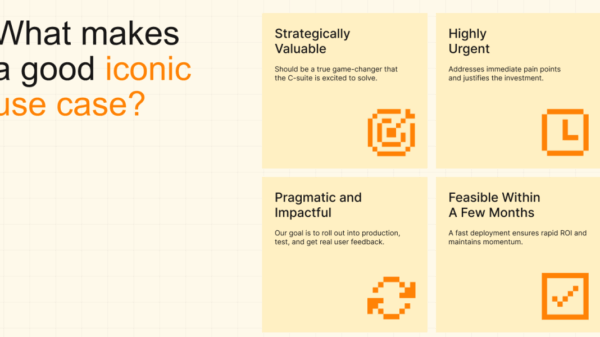

Balancing compliance with leadership pressure is another challenge compliance leaders face. The demand for rapid deployment of AI technologies often positions compliance as a bottleneck, potentially jeopardizing regulatory adherence. To counter this perception, compliance can engage early in the adoption process, shaping initiatives that allow for both speed and control. By providing clear guidance for low-risk use cases and pre-approving certain applications, organizations can streamline the deployment process while maintaining necessary oversight.

As regulatory frameworks surrounding AI continue to evolve, organizations must proactively create internal guardrails grounded in existing compliance frameworks. In the absence of comprehensive regulations, strong documentation and oversight become crucial. For higher-risk applications, requirements such as logging AI outputs and maintaining explicit human review processes are essential to ensure accountability.

Ultimately, embedding compliance throughout the lifecycle of AI initiatives is vital for sustainable governance. By incorporating compliance considerations early in the design and deployment phases, organizations can better manage the risks associated with AI technologies. As GenAI matures beyond a phase of experimentation, the expectation for organizations to demonstrate transparency and control will only intensify. By adopting a structured approach rooted in risk-based classification and clear accountability, compliance leaders can facilitate responsible AI adoption while preparing for potential regulatory scrutiny.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health