Singapore unveiled a groundbreaking governance framework for autonomous artificial intelligence on January 22, positioning itself as a leader in public sector AI regulation. The Infocomm Media Development Authority (IMDA) introduced the Model AI Governance Framework for Agentic AI, marking it as the first comprehensive guide for AI systems that operate independently rather than simply assist users.

While regulatory bodies in Europe and the United States grapple with fragmented approaches, many developing nations find themselves in a state of inertia, waiting for an ideal regulatory structure before taking action. Singapore’s new framework offers a robust alternative: a model that can evolve alongside technology, encouraging proactive governance.

The framework addresses a common challenge faced by technology agencies worldwide: the rapid pace of technological advancement often outstrips policy development cycles. By the time regulations are drafted and implemented, the technology in question may have undergone several iterations. Singapore’s approach prioritizes an iterative governance architecture, promoting adaptability over perfection. Agencies can implement an initial version of the framework within six months, assess its performance, and then update it promptly based on real-world deployment.

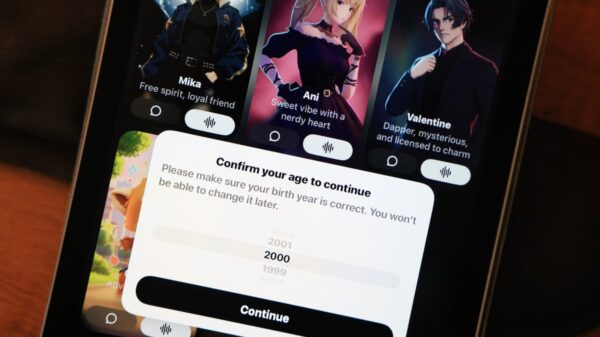

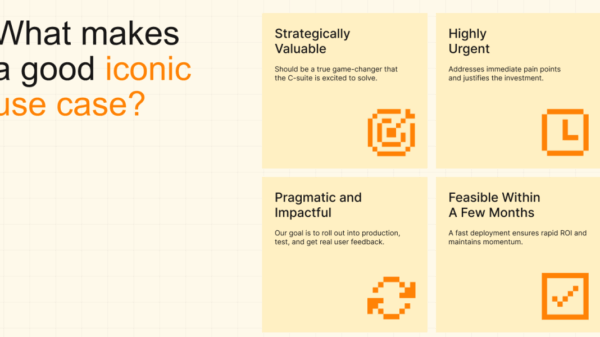

This iterative model favors fast-evolving AI technologies over traditional comprehensive legislation, which can quickly become obsolete. What sets Singapore’s framework apart is its detailed and actionable guidelines. It provides clear criteria for assessing risks associated with AI systems, focusing on two key dimensions: the system’s action space—what data it can access—and its degree of autonomy—how independently it can operate.

Moreover, the framework tackles the challenge of automation bias, where increased AI capability can complicate human oversight. It outlines practical steps for defining approval checkpoints and conducting audits to ensure their continued effectiveness. The framework also emphasizes technical controls with specific benchmarks for testing systems on task execution accuracy and compliance with established policies, offering valuable guidance for agencies that may lack deep AI expertise.

For smaller nations and developing countries, the framework presents an opportunity to redesign their AI governance from the ground up. Recognizing that many governments may lack the capacity for continuous monitoring, the framework encourages the design of AI agents that require less oversight. This emphasis on narrower action spaces and built-in rollback mechanisms can enhance system reliability rather than being viewed as compromises.

Singapore is already demonstrating the viability of this model through initiatives such as the Agentic AI Primer, published by GovTech Singapore in April 2025. The government is experimenting with multi-agent setups, where AI agents are modeled as government officials engaged in specialized tasks, beginning with internal processes before extending to public-facing services. Through strategic partnerships, including collaboration with Google Cloud, Singapore’s agencies have begun testing these systems in isolated environments to ensure reliability before full-scale deployment.

This experimental approach to governance allows Singapore to reverse the typical sequence of AI deployment, where the private sector often leads and government follows with regulation. By prioritizing public sector implementation, Singapore aims to cultivate a pool of practitioners who truly understand AI’s capabilities and limitations, thus transforming regulatory discussions from theoretical to practical matters.

As nations invest in building digital infrastructure, there lies a strategic window to integrate agentic AI governance from the outset. The cost of embedding good governance during the design phase is significantly lower than retrofitting systems after deployment. For governments, trust is critical infrastructure; a poorly designed AI system can jeopardize years of digital transformation efforts. The framework’s focus on human accountability, rigorous testing, and transparency can help foster public confidence in AI systems.

Singapore’s Model AI Governance Framework aligns with international standards such as the OECD AI Principles and the Global Partnership on AI Standards, offering competitive advantages for developing countries seeking regional market access. As the IMDA actively solicits feedback and case studies, it encourages a form of governance that evolves through shared learning rather than through centralized authority.

For nations ready to embrace this framework, the path is clear: adapt the guidelines within months, deploy in controlled environments within a year, and expand to external services within two years. The urgency for governments is to reject the false dichotomy of speed versus responsibility in AI governance. Agentic AI’s potential to revolutionize public service delivery is undeniable; the question is whether governments will keep pace with its rapid evolution. Singapore’s framework demonstrates that such agility is possible, and the opportunity for meaningful change is now.

See also AI Technology Enhances Road Safety in U.S. Cities

AI Technology Enhances Road Safety in U.S. Cities China Enforces New Rules Mandating Labeling of AI-Generated Content Starting Next Year

China Enforces New Rules Mandating Labeling of AI-Generated Content Starting Next Year AI-Generated Video of Indian Army Official Criticizing Modi’s Policies Debunked as Fake

AI-Generated Video of Indian Army Official Criticizing Modi’s Policies Debunked as Fake JobSphere Launches AI Career Assistant, Reducing Costs by 89% with Multilingual Support

JobSphere Launches AI Career Assistant, Reducing Costs by 89% with Multilingual Support Australia Mandates AI Training for 185,000 Public Servants to Enhance Service Delivery

Australia Mandates AI Training for 185,000 Public Servants to Enhance Service Delivery