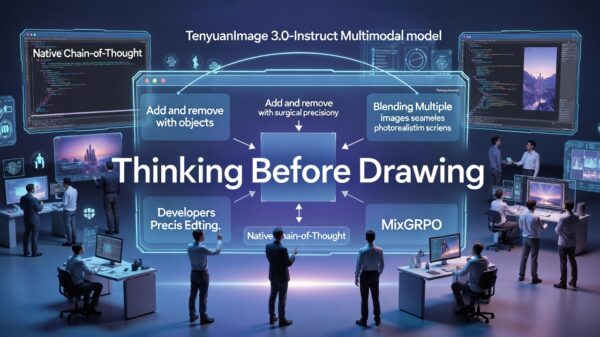

By 2026, artificial intelligence has transitioned from a nascent concept to a critical component of organizational operations, fundamentally reshaping how companies communicate, analyze data, serve customers, and make decisions. Email platforms now summarize conversations, customer relationship management systems forecast outcomes, and accounting software identifies anomalies, while generative tools can draft a range of content in seconds. However, as organizations increasingly integrate AI, many are discovering that while implementing the technology is straightforward, governing it presents significant challenges.

Managed service providers, responsible for supporting AI deployments, report that the most prevalent failures stem from a lack of strategic foresight rather than technical deficiencies. AI is frequently introduced without a comprehensive understanding of organizational readiness, ownership, or long-term accountability, leading to a proliferation of tools that outpace the establishment of necessary guardrails. This situation often culminates in a predictable cycle of initial enthusiasm followed by confusion, heightened risk, and stalled progress.

To build an effective AI strategy in 2026, organizations must engage in a methodical journey that starts with assessing readiness, proceeds through responsible implementation, and culminates in sustained governance. A common misstep is the assumption that AI strategy should begin with identifying use cases—determining which departments could benefit or processes that could be automated. In reality, this approach overlooks a critical foundational step: evaluating whether the organization is genuinely prepared for AI integration.

True AI readiness encompasses not just technical capabilities but also data discipline, security posture, decision ownership, and cultural alignment. Organizations that advance without establishing this groundwork often uncover issues only after AI has become entrenched in their workflows. Common gaps in readiness include unclear data ownership, inconsistent access controls, unmanaged third-party AI features, and a lack of accountability for decisions driven by AI.

This is why assessing AI readiness should serve as the initial step in any serious AI strategy. A structured framework can help organizations pinpoint where AI is already in use, what data it interacts with, and which risks need addressing before scaling further. Without this clarity, AI strategy devolves into guesswork.

Responsible Use as a Competitive Advantage

AI readiness is often misinterpreted as a purely technical checklist; it is, in fact, a multifaceted business capability encompassing people, processes, and technology. At a minimum, readiness should include a clear understanding of where AI is integrated into existing platforms, defined ownership for AI decisions and outcomes, data governance practices to mitigate exposure, security controls aligned with AI data flows, and leadership consensus on acceptable risks and ethical boundaries.

While organizations lacking any of these elements may still implement AI, they do so with increasing vulnerability. Readiness does not necessitate perfection; rather, it requires awareness. When leaders understand their current maturity, they can make informed decisions about where to invest and where to exercise caution. Once readiness is firmly established, organizations can shift from abstract goals to intentional use cases.

Successful AI strategies focus on areas that meaningfully support business outcomes without introducing disproportionate risk. Prioritized areas typically include high-volume, low-judgment tasks, decision support rather than autonomous decision-making, internal efficiency before external impacts, and pilot projects with clearly defined success metrics. This targeted approach not only yields early wins but also fosters trust and provides real operational insights into AI’s performance within specific organizational contexts.

Responsible AI use, often framed as a compliance obligation, emerges as a strategic advantage. Organizations that embed responsibility within their AI strategy can navigate challenges more swiftly because they avoid rework, regulatory surprises, and reputational damage. Responsible use entails understanding limitations, documenting decisions, monitoring outcomes, and intervening when systems produce unexpected results. This necessitates intentional design rather than complex governance structures, particularly for small to medium-sized businesses.

Effective governance acts as a bridge between strategy and execution, addressing questions that strategy alone cannot answer, such as who approves new AI use cases, who owns data and outputs, how risks are evaluated, and what happens when AI generates unintended outcomes. Without robust governance, AI initiatives risk becoming fragmented, with varying teams adopting disparate tools and data sources reused without oversight.

As organizations expand their AI capabilities, they must remain vigilant about scaling responsibly. Success at scale is contingent on regularly revisiting readiness and risk assumptions, integrating AI into existing security and compliance frameworks, monitoring outputs, and treating AI as a lifecycle rather than a one-time deployment. A lifecycle mindset ensures that governance evolves alongside AI systems, preventing stagnation and maintaining alignment as complexity increases. As organizations continue to operationalize AI through integrated assistants, the focus must remain on amplifying human capability rather than eroding judgment.

In 2026, the prevailing question is not whether to adopt AI but how to do so deliberately and responsibly, with a focus on long-term goals. Organizations that successfully navigate this landscape will be the ones able to innovate continuously, leveraging AI as a strategic capability rather than merely a collection of tools, positioning themselves to thrive amidst the complexities of the digital age.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health