Cybersecurity researchers have uncovered a sophisticated malware campaign that exploited the trusted AI infrastructure of Hugging Face to distribute Android banking trojans at scale. The operation, identified by Bitdefender, utilized the popular machine learning platform to host thousands of malicious payloads, effectively evading traditional security filters.

Attackers initiated a multi-stage infection chain starting with a fake security application named TrustBastion. Users were confronted with pop-ups indicating their devices were infected, which prompted them to install what appeared to be legitimate security software. Once installed, the application immediately requested an update using dialog boxes that closely resembled official Google Play and Android system interfaces.

However, instead of connecting to Google’s servers, the app contacted an encrypted endpoint at trustbastion.com, receiving an HTML file that contained a redirect link to Hugging Face repositories. This method allowed attackers to exploit the platform’s trusted reputation, as security tools typically do not flag traffic from established domains like Hugging Face.

The campaign generated new payloads approximately every 15 minutes through a technique known as server-side polymorphism. This method created thousands of slightly different malware variants, effectively evading hash-based detection mechanisms. At the time of investigation, the repository was around 29 days old and had accumulated more than 6,000 commits, according to Bitdefender’s analysis.

Once the second-stage payload was installed, it sought Accessibility Services permissions under the guise of “Phone Security” features. These permissions granted the remote access trojan (RAT) broad visibility into user interactions on compromised devices, allowing it to record screens in real time, capture lock screen passwords, and display fraudulent authentication interfaces.

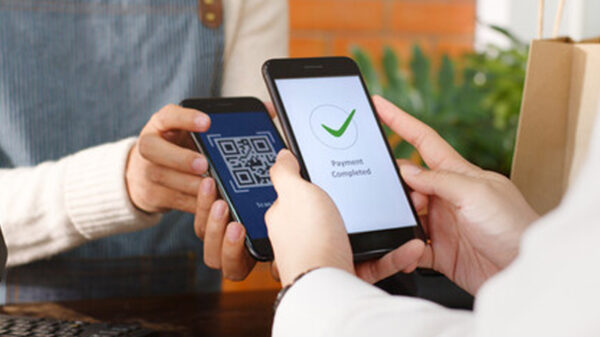

The banking trojan specifically targeted financial applications, including Alipay and WeChat, by displaying deceitful authentication interfaces to steal user credentials. Additionally, the malware could intercept SMS messages containing two-factor authentication codes, enabling attackers to bypass multi-factor authentication systems and conduct unauthorized transactions while maintaining the illusion of normal device operation.

Hugging Face, which primarily utilizes the open-source ClamAV antivirus engine to scan uploads, lacked adequate content vetting mechanisms to detect such malicious activity. The platform’s minimal barriers to contribution and community-driven validation, while beneficial for legitimate AI development, created exploitable trust mechanisms for sophisticated threat actors.

When the TrustBastion repository vanished in late December 2025, a new operation known as “Premium Club” emerged almost immediately using the same underlying code. Bitdefender contacted Hugging Face prior to publishing its research, prompting the platform to quickly remove the malicious datasets. However, the campaign had already infected thousands of victims across multiple continents.

The operation focused on regions with high smartphone banking adoption but potentially lower mobile security awareness, maximizing potential financial gains. Forensic analysis indicated connections to previously known cybercriminal operations, suggesting involvement by an established threat actor group rather than opportunistic amateurs. Security experts contend that this campaign serves as a proof of concept for a much larger issue, indicating that if attackers can successfully exploit Hugging Face’s infrastructure, similar tactics could be applied to other AI platforms and collaborative development environments.

The economic landscape favors cybercriminals, who can lower operational costs while increasing infection success rates by leveraging the infrastructure and reputation of trusted platforms. Traditional perimeter security and signature-based detection proved inadequate against malware distributed through these trusted channels. Experts recommend that organizations implement behavioral analysis systems to identify anomalous application activities, including monitoring for unusual permission requests and unexpected network communications.

For individual users, this campaign emphasizes the necessity of maintaining skepticism even toward applications from seemingly legitimate sources. Security professionals advise verifying application authenticity through multiple channels and closely reviewing permission requests before granting access, particularly for applications seeking excessive permissions relative to their stated functions.

This incident has ignited broader discussions regarding content moderation and security verification for AI platforms. Unlike traditional software repositories that can scan for known malware signatures, AI model repositories face unique challenges in distinguishing between legitimate models, poorly documented projects, and deliberately malicious uploads.

Industry experts suggest that AI platforms may need to consider implementing tiered trust systems akin to established software repositories, which would require additional verification for specific content types and involve automated scanning for known malicious patterns. As artificial intelligence continues its rapid integration into daily technology, the security implications of AI infrastructure abuse will likely grow more severe. The Hugging Face campaign serves as a case study in how trusted platforms can be weaponized, underscoring the need for adaptive security practices in an increasingly complex digital ecosystem.

See also Bitcoin Plummets to 2024 Low Amid AI Disruption Fears and Geopolitical Tensions

Bitcoin Plummets to 2024 Low Amid AI Disruption Fears and Geopolitical Tensions Fei-Fei Li Highlights Urgent Need for Spatial Intelligence in Advancing AI Applications

Fei-Fei Li Highlights Urgent Need for Spatial Intelligence in Advancing AI Applications Lincoln County Proposes Year-Long Moratorium on AI Hyperscale Data Centers

Lincoln County Proposes Year-Long Moratorium on AI Hyperscale Data Centers Grok Still Generates Non-Consensual Sexualized Images Despite Promised Safeguards

Grok Still Generates Non-Consensual Sexualized Images Despite Promised Safeguards Global AI Legislation Surge: EU’s AI Act Faces Delays Amid Global Deregulation and New Frameworks

Global AI Legislation Surge: EU’s AI Act Faces Delays Amid Global Deregulation and New Frameworks