As discussions surrounding the adoption of artificial intelligence (AI) agents intensify, many enterprises may overlook critical risks related to data security, integrity, and costs. At the India AI Impact Summit 2026, Indian AI-native transformation foundry Arinox AI and agentic AI company KOGO unveiled what they claim is India’s first sovereign AI product — a sophisticated system dubbed “AI in a box.”

CommandCORE, the product of Arinox AI and KOGO’s collaboration, promotes a counterintuitive vision for the future of AI: one that is private, sovereign, and physically compact. This system is designed to operate locally, without the need for an internet connection. Leveraging partnerships with Nvidia and Qualcomm, the latest iteration of CommandCORE runs on Nvidia hardware.

“The future of AI is private, on an enterprise level too. You simply cannot farm out your intelligence. The only way an organization can exponentially increase its own intelligence and learning is by keeping AI private. It must own the AI,” explained Raj K Gopalakrishnan, CEO and Co-Founder of KOGO AI, during an interview.

This “AI in a box” concept is both ideological and technical, encouraging organizations to look beyond large language models (LLMs) and graphical processing units (GPUs). Gopalakrishnan emphasized that organizations utilizing public foundational models are not merely processing prompts but are also exposing operational insights. “Sensitive industries, when they share data with foundational models and cloud-based AI services, are also sharing intelligence,” he stated.

According to an AI Threat Landscape 2025 analysis by security platform HiddenLayer, 88% of enterprises express concern over vulnerabilities arising from third-party AI integrations, including popular tools like OpenAI’s ChatGPT, Microsoft Copilot, and Google Gemini. A report from MIT in August last year revealed that 95% of generative AI pilots at companies encountered failures, with privacy being a significant concern.

The architecture of this private AI solution consists of four essential layers: specialized hardware from Nvidia, KOGO’s agentic operating system, and an Enterprise Agent Suite equipped with over 500 connectors for enterprise workflows, utilizing open-source models for sovereign AI. Variations include Nvidia’s Jetson Orin-class edge systems for field deployments, DGX Spark for compact on-premises development, and configurations for enterprise data centers featuring Nvidia RTX Pro 6000 Blackwell Server Edition graphics.

“This box is designed to cut through the complexities of hardware, software, and application layers, which an enterprise would have to independently orchestrate. It will handle focused workloads, repeatable tasks, and can expand to large clusters for an entire workflow,” remarked Angad Ahluwalia, chief spokesperson of Arinox AI. Scalability is achieved by linking multiple units together, with three model configurations currently available and more iterations anticipated in the forthcoming months. Pricing begins at ₹10 lakh.

The small option of CommandCORE can manage models ranging from 1 billion to 7 billion parameters, making it suitable for enterprises deploying several agents for batch processing or human resource onboarding. The medium model encompasses 20 billion to 30 billion parameters, catering to complex agents with advanced inference capabilities.

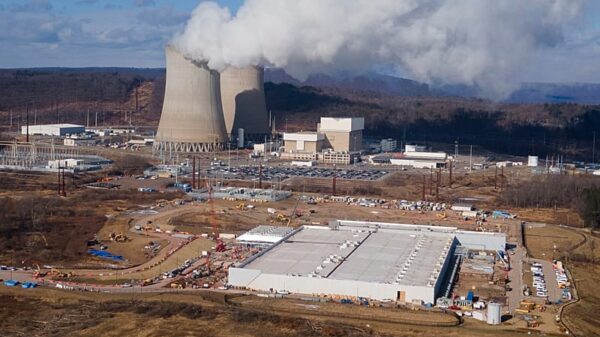

“As AI adoption expands across regulated and sensitive environments, organizations require accelerated computing platforms that can function entirely on-premise and under strict security controls,” stated Vishal Dhupar, Managing Director of Nvidia India. Ahluwalia elaborated that the very large configurations, akin to Nvidia’s DGX clusters based on the Grace Blackwell series, are capable of driving enterprise-wide transformations, supporting models of up to 405 billion parameters when two units are interconnected.

But beyond sovereignty and security arguments, Gopalakrishnan highlighted an economic rationale for private and local AI systems. He cited the example of commercial electric vehicle (EV) charging and battery swap stations, each capable of generating up to 30 terabytes of data daily. He emphasized, “If there are 1,000 stations owned by the same organization and they have to send all this data to the cloud, think of the cost.” The alternative lies in edge processing, where a small device at each station can operate without internet connectivity, transmitting only 200GB of data to the cloud for further processing, thereby reducing both bandwidth and cloud computing expenses.

Arinox and KOGO are targeting sensitive sectors such as finance, banking, government services, and defense. As the demand for secure, scalable, and efficient AI solutions continues to grow, the introduction of CommandCORE may represent a significant shift in how organizations approach AI technology.

See also Bank of America Warns of Wage Concerns Amid AI Spending Surge

Bank of America Warns of Wage Concerns Amid AI Spending Surge OpenAI Restructures Amid Record Losses, Eyes 2030 Vision

OpenAI Restructures Amid Record Losses, Eyes 2030 Vision Global Spending on AI Data Centers Surpasses Oil Investments in 2025

Global Spending on AI Data Centers Surpasses Oil Investments in 2025 Rigetti CEO Signals Caution with $11 Million Stock Sale Amid Quantum Surge

Rigetti CEO Signals Caution with $11 Million Stock Sale Amid Quantum Surge Investors Must Adapt to New Multipolar World Dynamics

Investors Must Adapt to New Multipolar World Dynamics