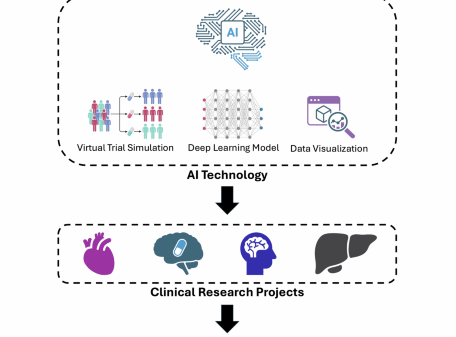

The integration of artificial intelligence (AI) into qualitative research is gaining traction, with recent findings indicating that large language models (LLMs) can complement human analysis rather than merely replicate it. A study conducted by a team from the University of Southampton, in collaboration with Ipsos UK, explored this dynamic by analyzing 138 short stories written by adolescents aged 13 to 25 in Southampton. The research delved into the connections between identity, food choices, and social media, revealing rich data that typically demands significant time for human interpretation.

The results were striking. Both OpenAI’s GPT-o1 and Anthropic’s Claude 3 Opus delivered analyses closely mirroring those of human researchers, yet they also provided unexpected insights that challenged initial assumptions. The LLMs processed the narratives in approximately 12 hours, compared to the 64 hours required by the human researchers over 16 weeks, showcasing the efficiency of AI tools in qualitative contexts.

To achieve these results, the research team developed a structured four-step framework that included setting clear roles for human and LLM analysts, selecting the most suitable models for the task, formatting data for optimal processing, and employing prompt engineering to refine the models’ outputs. By treating the LLMs as collaborators rather than infallible sources, the researchers aimed to strike a balance between human subjectivity and the computational efficiency offered by AI.

As the team compared the outputs from the LLMs and the human researcher, they noted that while the models quickly provided narrative groupings, the initial approach faced challenges. The models struggled with the context window, leading to inaccuracies when processing all 138 stories at once. By reformulating their approach—utilizing JSON for data input and processing stories individually—the researchers significantly improved the accuracy and depth of the analyses.

The collaboration transformed the LLMs from a basic analytical tool into interactive partners, offering alternative interpretations that prompted deeper reflection on the researchers’ part. This dynamic raises essential questions about the roles of reflexivity and subjectivity in qualitative research. The findings suggest that LLMs can support researchers in thinking reflexively, providing diverse interpretations that can expose biases in conventional analysis.

Despite these advancements, the researchers underscore the need for caution when utilizing LLMs. They advise a skeptical approach to reviewing AI-generated content, emphasizing the importance of interrogating the models’ outputs for accuracy and credibility. Data security also remains paramount, with researchers required to adhere to GDPR and institutional ethics requirements when employing AI tools in their work.

Transparency in methodology is crucial; researchers should keep detailed records of how LLMs are integrated into their analyses, ensuring they can explain their processes when presenting findings. The overarching question for qualitative researchers is not about how AI will replace them, but rather how they can safely and effectively collaborate with AI to enhance their research.

Ultimately, the study highlights the potential of LLMs as valuable collaborators in qualitative research, capable of maximizing the impact of studies aimed at understanding complex human experiences. As AI continues to evolve, its integration into social sciences offers exciting opportunities for innovation, provided researchers approach these tools with responsibility and mindfulness regarding their ethical implications.

Sarah Jenner is a lecturer in child and adolescent health at the University of Southampton. Dimitris Raidos is an associate director at Ipsos UK.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions