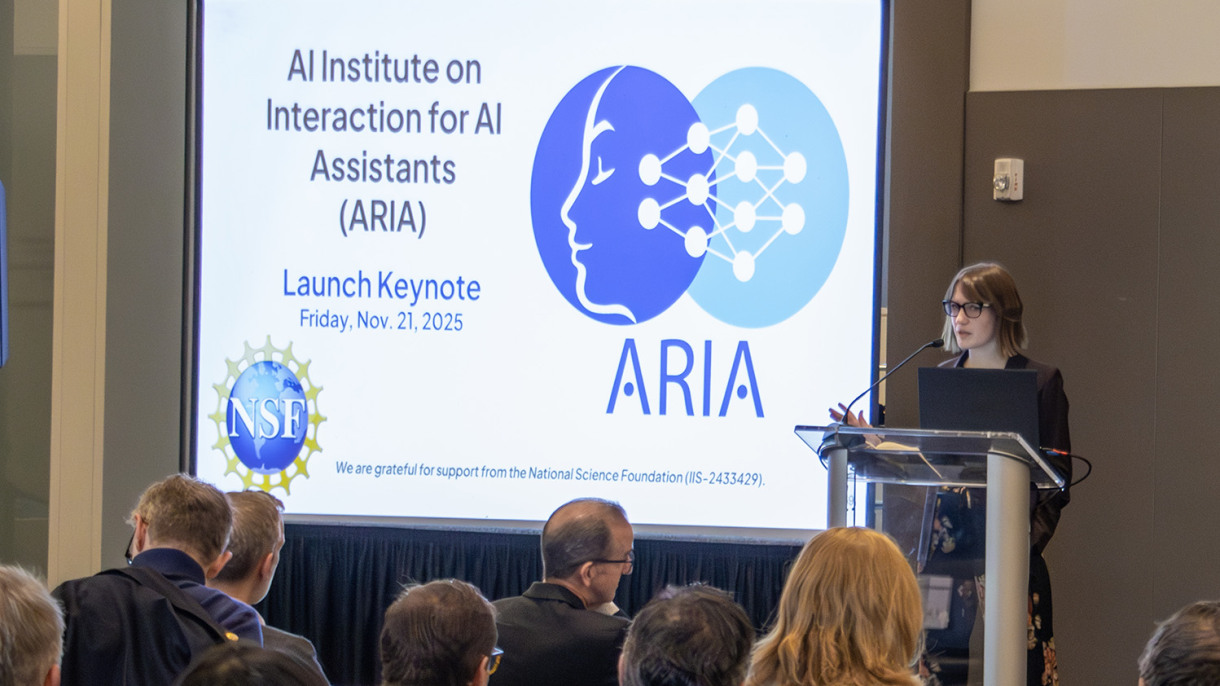

Researchers from a diverse array of disciplines, including computer science, psychology, and neuroscience, convened at Brown University on November 20-21 to launch the new AI Research Institute on Interaction for AI Assistants (ARIA), funded by a five-year, $20 million grant from the National Science Foundation (NSF). Led by Ellie Pavlick, an associate professor of computer science at Brown, ARIA aims to develop a novel generation of AI assistants designed for trustworthy, sensitive, and context-aware interactions with users. A focal point of this research is mental and behavioral health, where the need for AI systems that can foster trust and ensure safety is increasingly vital.

The late-November gathering served as an initial meeting for the ARIA team, allowing researchers from various institutions to collaboratively outline their strategic direction for the next five years. Pavlick emphasized that the goal was to identify key research themes that not only leverage their collective strengths but also address pressing challenges in AI development. “With a group like this addressing a problem that’s so enormous, there are probably 40 different research themes that we could choose,” she noted. “We wanted to start thinking about which five or so themes are ones that best build on our research strengths and are also really important to the problem.”

During the first day of discussions, team members engaged in brainstorming sessions to delineate critical research questions and methodologies for tackling them. On the second day, a public forum was held at Brown’s Engineering Research Center’s Hazeltine Commons, where the ARIA team aimed to introduce their research scope to the broader academic community.

Pavlick remarked on the importance of public engagement in this initiative, stating, “This was never meant to be — nor does NSF want it to be — something where we just go back to our labs and let people know in five years what we came up with. So this event was about letting people know what we’re thinking about and how to interact with us.”

A highlight of the public event was a presentation by Julian Jara-Ettinger, a psychology professor at Yale University, whose research investigates human social intelligence. He discussed the cognitive mechanisms humans employ to discern the mental states of others, a complex task that is instrumental for architects of artificial systems aiming for similar capabilities. Jara-Ettinger’s insights into social cognition could provide a foundational framework for enhancing AI’s ability to navigate intricate human interactions.

Following Jara-Ettinger’s talk, clinical psychologist and Brown professor of psychiatry and human behavior Nicole Nugent offered a response, praising ARIA for its multidisciplinary framework. “One of the things that I’m so excited about for your center is that you have built, very intentionally, these interdisciplinary connections,” she stated. “You have cognitive scientists, computer scientists, and mental health professionals all collaborating. I would encourage everyone here today to think about how you can take this interdisciplinary approach and keep moving it forward.”

Researchers representing institutions such as Colby College, Dartmouth College, Carnegie Mellon University, the University of California, San Diego, and the University of New Mexico contributed to the discussions, presenting mini-talks that outlined the thematic focus areas of ARIA’s research. Among these themes are:

- AI interpretability: Understanding the underlying processes through which AI systems formulate responses.

- Adaptability: Investigating how AI systems can appropriately respond to varying user needs and contexts.

- Participatory design: Engaging stakeholders in the design process of AI systems to enhance usability and effectiveness.

- Trustworthiness: Developing methodologies akin to a Consumer Reports-style evaluation framework to measure the trustworthiness of AI systems in mental health applications.

In his opening remarks, Brown Provost Francis J. Doyle expressed confidence in the university’s ability to foster ARIA’s collaborative ethos. “To be successful, institutes like ARIA really need to be nexus points for scientific innovation — building collaborations on and off campus, and facilitating connections between researchers and practitioners alike, both within and outside the academy,” he stated. Doyle emphasized that Brown’s strengths in interdisciplinary collaboration and boundary transcending uniquely position it to advance ARIA’s mission over the coming years.

The convergence of experts across different fields at ARIA underscores the growing recognition that the future of AI development, particularly in sensitive domains like mental health, hinges on interdisciplinary collaboration. As AI systems become increasingly integrated into our lives, the imperative to ensure they are trustworthy, adaptable, and interpretable is more crucial than ever. The ARIA initiative stands as a promising endeavor to tackle these multifaceted challenges, laying the groundwork for future advancements in AI-human interaction.

See also AI Model Achieves 95% Accuracy in Cardiovascular Disease Prediction Using Longitudinal Data

AI Model Achieves 95% Accuracy in Cardiovascular Disease Prediction Using Longitudinal Data Apple Showcases AI Advancements at NeurIPS 2025 with 10 Innovative Papers and Demos

Apple Showcases AI Advancements at NeurIPS 2025 with 10 Innovative Papers and Demos Google DeepMind Launches New AI Research Lab in Singapore to Enhance Local Impact

Google DeepMind Launches New AI Research Lab in Singapore to Enhance Local Impact NIU Innovation Showcase Reveals Advances in AI, Bioplastics, and Nanomanufacturing

NIU Innovation Showcase Reveals Advances in AI, Bioplastics, and Nanomanufacturing Conformal Deep Learning Model Predicts Core Body Temperature Non-Invasively in Extreme Environments

Conformal Deep Learning Model Predicts Core Body Temperature Non-Invasively in Extreme Environments