Researchers from Stony Brook University have uncovered significant limitations in the capabilities of Diffusion Transformers, a prominent method for generating images and videos. The team, consisting of Haoyu Wu, Jingyi Xu, Qiaomu Miao, along with Dimitris Samaras and Hieu Le, found that standard positional encoding methods often lead to failures when processing data at mixed resolutions. This results in blurred images and noticeable artifacts, fundamentally challenging the generative process of these models. Their study reveals that mismatches in positional information interpretation across different resolutions are at the heart of this instability.

To combat this issue, the researchers introduced a novel technique aimed at aligning positional encodings, which ensures a consistent interpretation of spatial relationships regardless of the resolution. This approach not only restores stability but also enhances the quality and efficiency of mixed-resolution image and video generation, outperforming existing state-of-the-art techniques. The implications of this development could be significant for industries reliant on high-quality visual content, such as film and gaming.

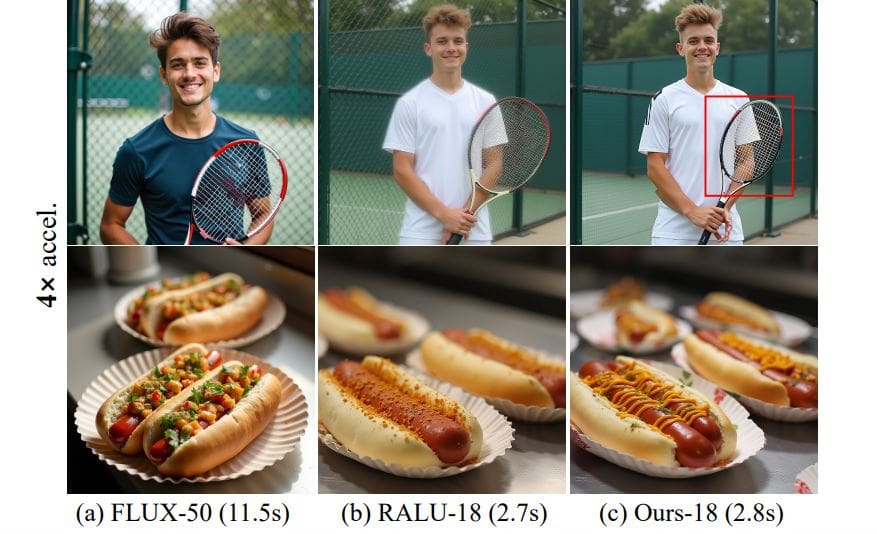

The research expands on methods for generating images and videos at varying resolutions, striking a balance between efficiency and quality. A core concept revolves around selectively rendering parts of an image or video in high resolution while processing the rest at lower resolutions. This selective approach is intelligently guided by techniques such as rotary positional encoding (RoPE) interpolation and saliency prediction. Specifically, diffusion models like FLUX and Wan2.1 are utilized to efficiently resize latent representations through convolutional networks, enhancing both speed and fidelity.

A critical failure mode was identified within Diffusion Transformers when handling mixed-resolution data. Researchers discovered that standard linear interpolation of rotary positional embeddings resulted in attention mechanism collapse, leading to blurred outputs. This structural challenge arises because the interpolation forces attention heads to compare RoPE phases sampled at incompatible rates, creating what is known as phase aliasing. This unique issue renders pretrained Diffusion Transformers particularly vulnerable, with their performance sensitive to specific phases.

To mitigate this failure, the team developed a training-free method called Cross-Resolution Phase-Aligned Attention (CRPA). This innovative approach modifies the RoPE index map, expressing all key and value positions in relation to the query’s stride. Notably, CRPA ensures that equal physical distances correspond to identical phase increments, thus restoring precise phase patterns across the model. The researchers also introduced a Boundary Expand-and-Replace step, which enhances local consistency near resolution transitions by facilitating a smooth exchange of features between low- and high-resolution components.

The introduction of CRPA represents a significant advancement in addressing the core limitations of Diffusion Transformers, ultimately enabling high-fidelity image and video generation. This technique ensures consistent phase patterns across varying resolutions by revising the indexing of positional information. Together with the Boundary Expand-and-Replace procedure, it effectively resolves issues related to mixed resolutions, promising improvements in both the quality of generated content and the efficiency of the processes involved.

As the field of artificial intelligence continues to evolve, these findings underscore the importance of refining model architectures for practical applications. The developments in mixed-resolution generation with saliency guidance could pave the way for more sophisticated systems, impacting various sectors that depend on high-quality visual outputs. The research team’s findings highlight the ongoing quest to enhance generative models and their potential applications in real-world scenarios.

👉 More information

🗞 One Attention, One Scale: Phase-Aligned Rotary Positional Embeddings for Mixed-Resolution Diffusion Transformer

🧠 ArXiv: https://arxiv.org/abs/2511.19778

Alibaba Cloud Recognized as Emerging Leader in All Four Gartner Generative AI Quadrants

Alibaba Cloud Recognized as Emerging Leader in All Four Gartner Generative AI Quadrants Black Forest Labs Launches FLUX.2 VAE, Enabling Custom AI Image Generation for Enterprises

Black Forest Labs Launches FLUX.2 VAE, Enabling Custom AI Image Generation for Enterprises Slop Evader Launches Tool to Filter Web Content to Pre-GPT Era, Combat AI Overload

Slop Evader Launches Tool to Filter Web Content to Pre-GPT Era, Combat AI Overload Lei Unveils Improved GAN-LSTM Method Boosting Fake Face Detection Accuracy by 30%

Lei Unveils Improved GAN-LSTM Method Boosting Fake Face Detection Accuracy by 30% Generative Adversarial Networks Market Projected to Grow 27-30% CAGR by 2030

Generative Adversarial Networks Market Projected to Grow 27-30% CAGR by 2030