Meta’s potential shift towards Google’s tensor processing units (TPUs) has intensified the competitive landscape for AI hardware, a sector long dominated by Nvidia. Reports this week indicate that Meta is in discussions with Google to invest billions in TPUs for its data centers starting in 2027, stirring significant reactions in the market. Following the news, Nvidia’s shares fell more than 2%, while Alphabet’s stock rose over 4%, as investors perceived Meta’s interest in TPUs as a validation of Google’s chip strategy. AMD also experienced a drop of more than 4%, raising concerns that a Meta-Google alliance could heighten competition for its AI accelerators.

The reported negotiations highlight a significant pivot in the market dynamics, particularly as Meta plans an ambitious $72 billion in infrastructure spending this year. The news underscores a growing tension around Nvidia’s extensive influence, as several of its key customers increasingly seek to reduce their reliance on its chips by developing their own alternatives.

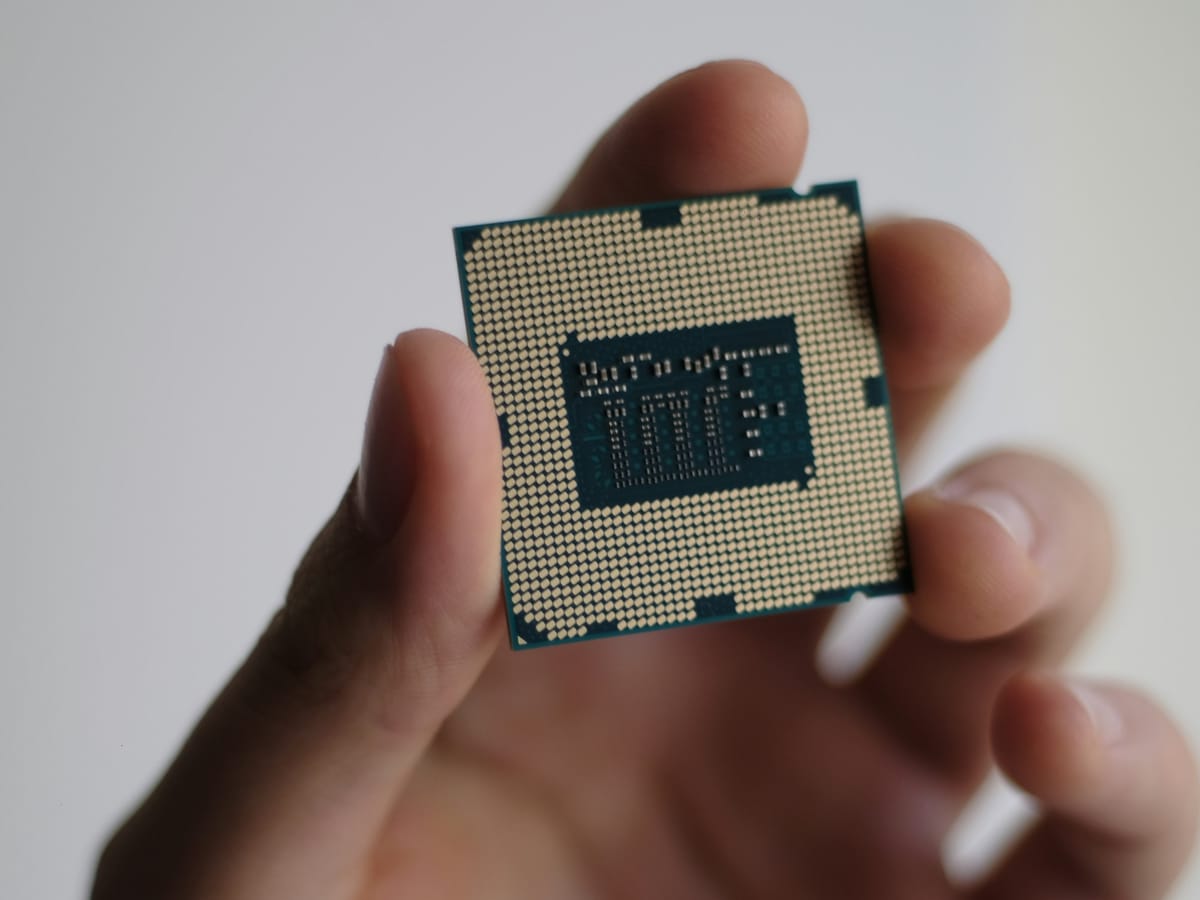

In a related development, Intel and Google Cloud announced the launch of a co-designed chip aimed at enhancing the security and efficiency of data centers. The E2000 chip is engineered to package data for networking from the central processing units (CPUs) responsible for primary computations, further expanding the competitive field for Nvidia.

In response to the evolving situation, Nvidia publicly addressed its position in the AI hardware market shortly after the Meta-Google news broke. The company expressed support for Google, stating, “We’re delighted by Google’s success, they’ve made great advances in AI and we continue to supply to Google.” Nvidia emphasized its competitive edge, asserting that it remains “a generation ahead of the industry” and is the only platform supporting every AI model across varied computing environments.

Additionally, Nvidia pointed out a key technical distinction between its GPUs, which are general-purpose processors capable of handling a wide array of AI workloads, and Google’s TPUs, designed as application-specific integrated circuits (ASICs) for more specialized tasks. This distinction reinforces Nvidia’s assertion of greater “performance, versatility, and fungibility,” suggesting that the company believes its hardware ecosystem is built for broader adoption.

Google’s TPUs are gaining traction, particularly following the successful training of its newest AI model, Gemini 3, solely on TPUs, which garnered favorable reviews upon release. This accomplishment has bolstered the credibility of Google’s chip ecosystem. In another sign of momentum, Anthropic has expanded its collaboration with Google, indicating plans to utilize up to one million TPUs, while reports suggest that OpenAI tested Google’s hardware earlier this year. These advancements have positioned TPUs as serious contenders in discussions traditionally dominated by Nvidia.

Investor sentiment surrounding Google’s TPU business is also shifting, with research firm DA Davidson estimating a potential valuation of around $900 billion for Google’s TPU operations and DeepMind. This perception is further fueled by reports of substantial interest from leading AI labs in acquiring TPUs directly, aligning with the timing of Meta’s discussions with Google.

Meanwhile, other tech giants are also moving to establish their own chip solutions, with Amazon operating its Trainium and Inferentia chips, recently renting out half a million to Anthropic, while Microsoft has introduced its Maia processor for AI workloads. Even as these companies continue to source significant volumes of Nvidia GPUs, they are actively working to mitigate dependence on a single supplier.

In the backdrop of these developments, Nvidia has faced scrutiny, especially from investors like Michael Burry, who have expressed skepticism regarding the sustainability of the AI market, likening it to the dot-com era. In response, Nvidia issued a memo emphasizing transparency and strong fundamentals, distancing itself from past accounting scandals such as Enron or WorldCom. The company maintains that its strategic investments are sound, with portfolio companies generating real revenue and significant demand.

Despite currently commanding over 90% of the AI chip market and benefiting from its widely used CUDA ecosystem, the recent events signal a shift in how major tech companies view their hardware strategies. Google, Amazon, and Microsoft are actively shaping their own futures in hardware, and Meta’s exploration of TPUs places Google directly into competition with Nvidia. The landscape for AI infrastructure is evolving, with multiple hardware paths emerging as viable alternatives, indicating a broader transformation in AI computing as major players diversify their options.

See also By 2030, AI-Native Distribution Will Reshape Travel; Hoteliers Must Invest Now to Compete

By 2030, AI-Native Distribution Will Reshape Travel; Hoteliers Must Invest Now to Compete Nvidia Declares AI Chip Dominance Amid Meta’s Reports of Google’s Competitive TPUs

Nvidia Declares AI Chip Dominance Amid Meta’s Reports of Google’s Competitive TPUs Alibaba Launches Quark AI Glasses with Qwen Integration Starting at $540 in China

Alibaba Launches Quark AI Glasses with Qwen Integration Starting at $540 in China Investors Warn of AI Bubble as $5.2T Infrastructure Spending Surge Looms by 2030

Investors Warn of AI Bubble as $5.2T Infrastructure Spending Surge Looms by 2030 Global Leaders Convene in Paris to Address AI, Energy Transition, and Economic Resilience

Global Leaders Convene in Paris to Address AI, Energy Transition, and Economic Resilience