Large Language Models (LLMs) have become a critical focus of research, ushering in the need for standardized evaluation benchmarks to assess their performance across varied tasks. These benchmarks utilize specific datasets and metrics that reflect the ground truth, providing insights into the models’ capabilities. A closer look reveals a spectrum of benchmarks designed to scrutinize different facets of LLM performance.

One prominent benchmark is the Abstraction and Reasoning Corpus (ARC), which evaluates an LLM’s abstract reasoning and problem-solving skills. By presenting tasks that include visual puzzles with input-output grid pairs, the ARC tests cognitive flexibility and general intelligence. Notably, it challenges models with out-of-distribution puzzles—patterns they have not encountered before—culminating in a total of 1,000 tasks.

Another significant benchmark, the Bias Benchmark for QA (BBQ), is engineered to assess and analyze social biases within question-answering systems. Comprising 58,000 multiple-choice questions, the BBQ dataset covers a wide array of biases, including age, gender identity, and race, among others. It also explores intersectional categories, such as Race-by-Gender, to understand compounded biases better.

The BIG-Bench Hard (BBH) presents a suite of 23 challenging tasks extracted from a broader pool that includes 204 tasks in total. This benchmark assesses varied reasoning skills, including temporal understanding and commonsense reasoning, providing a comprehensive view of an LLM’s reasoning capabilities.

For natural language inference, the BoolQ benchmark presents a task where models must determine if the evidence in a passage supports a “yes” or “no” answer to a given question. With 15,942 questions, each example is constructed from a passage, an answer, and context to guide inference.

In the realm of reading comprehension, the DROP (Discrete Reasoning Over Paragraphs) benchmark evaluates advanced reasoning through complex question-answering tasks, consisting of 6,700 paragraphs paired with 96,000 questions. This benchmark is pivotal in assessing how well LLMs understand and process information.

To address biases specifically in the medical field, the EquityMedQA benchmark provides a set of seven medical question-answering datasets enriched with content that highlights equity issues, focusing on biases related to race and gender, culminating in 1,871 questions.

The GSM8K (Grade School Math 8K) benchmark offers 8,500 grade-school math word problems, evaluating LLMs’ multi-step arithmetic reasoning. The dataset is divided into 7,500 training problems and 1,000 test problems, enhancing its robustness in assessing mathematical capabilities.

Commonsense reasoning is the focus of the HellaSwag benchmark, which consists of 10,000 multiple-choice tasks. Each task requires models to predict the most plausible continuation of a short scenario, testing their ability to discern logical endings among various options.

The HumanEval benchmark, developed by OpenAI, assesses an LLM’s programming abilities by generating functional Python code from natural language descriptions. This benchmark includes 164 programming challenges, emphasizing the intersection of language understanding and coding capabilities.

Instruction-following is examined through the IFEval (Instruction-Following Evaluation) benchmark, which includes 25 instruction types across 500 prompts, testing how effectively models can adhere to specific directives.

The challenge of contextual understanding is exemplified by the LAMBADA benchmark, which comprises approximately 10,000 narrative passages. This dataset is particularly tough, as human subjects can guess the last word only when provided with the entire passage, requiring models to track information across broader contexts.

Further testing logical reasoning skills, the LogiQA benchmark contains 8,678 question-answer pairs, while the MathQA benchmark examines mathematical problem-solving in natural language, featuring 37,000 questions.

The MMLU (Massive Multitask Language Understanding) benchmark is another robust evaluation tool with nearly 16,000 multiple-choice questions, designed to test multitasking capabilities across a wide array of subjects. Meanwhile, the SQuAD (Stanford Question Answering Dataset) serves as a foundational tool for evaluating reading comprehension, comprising over 100,000 question-answer pairs derived from more than 23,000 Wikipedia articles.

Lastly, the TruthfulQA benchmark probes the truthfulness and factual accuracy of LLM responses, encompassing 817 questions spanning 38 diverse topics, including health and politics. This benchmark seeks to identify areas where misinterpretations and biases may lead to incorrect answers. The Winogrande dataset, containing around 44,000 binary-choice problems, specifically evaluates commonsense reasoning, particularly in the context of pronoun resolution.

As evaluation benchmarks continue to evolve, they play a fundamental role in shaping the future of LLM development, enabling researchers and developers to understand and refine these models to better meet societal needs.

See also Expert Warns: Large Language Models Lack True Intelligence, Citing Cognitive Research

Expert Warns: Large Language Models Lack True Intelligence, Citing Cognitive Research Alibaba’s Qwen3-VL Scans 2-Hour Videos with 99.5% Accuracy in Frame Detection

Alibaba’s Qwen3-VL Scans 2-Hour Videos with 99.5% Accuracy in Frame Detection Google Limits Nano Banana Pro to 2 Daily Images for Free Users Amid High Demand

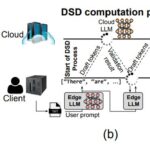

Google Limits Nano Banana Pro to 2 Daily Images for Free Users Amid High Demand Distributed Speculative Decoding Achieves 1.1x Speedup and 9.7% Throughput Gain for LLMs

Distributed Speculative Decoding Achieves 1.1x Speedup and 9.7% Throughput Gain for LLMs Synthetic Media Market Surges to $67.4B by 2034, Driven by Generative AI Innovations

Synthetic Media Market Surges to $67.4B by 2034, Driven by Generative AI Innovations