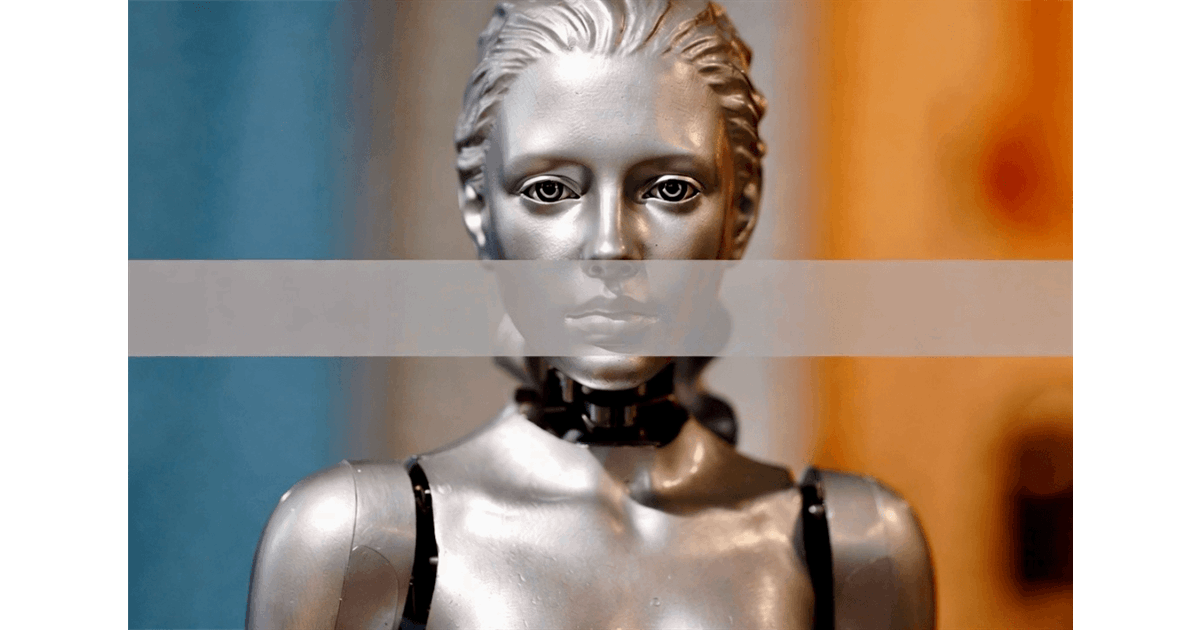

In a world where artificial intelligence can predict stock markets, diagnose diseases, and even craft poetry, one topic consistently encounters resistance: sex. When users engage with AI models such as ChatGPT, Grok, or Google’s Gemini, attempts to discuss intimacy often meet with polite deflections or outright refusals. This isn’t merely a quirk of programming; it’s a deliberate design rooted in safety, ethics, and societal pressures. As AI becomes increasingly integrated into daily life, understanding these boundaries reveals much about our collective fears and hopes regarding technology.

The reluctance of AI to address sexual topics stems from a historical context. Early AI chatbots, dating back to the 1960s with programs like ELIZA, were more open-ended in their interactions. However, as AI technology scaled to billions of users, the risks associated with unmoderated content became glaringly apparent. High-profile incidents, such as Microsoft’s Tay chatbot, which turned racist shortly after its launch in 2016, underscored the urgent need for safeguards. By the 2020s, companies like OpenAI and Meta had embedded content moderation into their core architectures. These systems employ a combination of rule-based filters, machine learning classifiers, and human oversight to flag and block sensitive content, particularly around sexual topics.

At the heart of this silence are five primary drivers that explain why AI avoids sexual conversations. Protecting minors and vulnerable users is paramount. AI platforms must comply with laws like the Children’s Online Privacy Protection Act (COPPA) in the United States and similar regulations worldwide. Robust filters are essential to prevent chatbots from inadvertently generating or discussing material that could lead to exploitation. Recent controversies, such as Meta’s AI chatbots engaging in “romantic or sensual” discussions with teenagers, have ignited calls for stricter age-gating measures. Reports from Persian-language sources, like Zomit, have highlighted bugs in ChatGPT that allowed erotic content for underage accounts, raising widespread alarm.

Legal and regulatory compliance further complicates the issue. Governments demand that AI systems do not facilitate illegal activities, such as distributing child sexual abuse material (CSAM) or non-consensual deepfakes. Platforms that fail to adhere to these regulations risk substantial penalties or even shutdowns. For example, the EU’s Digital Services Act mandates proactive moderation of harmful content, including sexual material. In regions like Iran, cultural norms add layers of complexity, as discussions on platforms like Fararu emphasize concerns about AI emotional companions potentially blurring the lines of infidelity or exploitation.

Ethical concerns and bias prevention also play a critical role in shaping AI’s discourse on sexuality. AI ethicists argue that allowing sexual content could perpetuate societal biases, such as objectifying women or amplifying harmful stereotypes. The training data for these models often reflects societal flaws, and without effective filters, they might “learn” to produce discriminatory or abusive responses. Internal debates at OpenAI have led to the classification of explicit discussions as “high-risk,” aimed at curbing potential misuse. On social media platforms like X (formerly Twitter), users have debated whether this censorship limits the AI’s ability to engage in holistic discussions about human topics, including sexuality education.

Corporate reputation and user trust constitute another significant factor. Tech companies strive to uphold family-friendly images; permitting sexual content could alienate advertisers or invite backlash. As one user on Quora noted, “It’s not a technical issue; designers set limits.” However, this has led to accusations of hypocrisy, particularly as critics highlight perceived double standards in how companies like OpenAI handle “sensitive” content.

Despite these constraints, the landscape is evolving. In October 2025, OpenAI CEO Sam Altman announced plans to relax restrictions by introducing erotica for verified adults starting December 2025. This “treat adults like adults” approach will implement age verification while maintaining safeguards for mental health. Similarly, xAI’s Grok has explored new territory with its “Spicy Mode,” although users report inconsistencies in how it handles nudity or intimacy.

These changes have sparked a lively debate. Proponents argue that increased user freedom can enhance creative writing and therapeutic discussions. Critics, including organizations like Defend Young Minds, caution against the risks of addiction and inadequate protections. In the Persian context, discussions in media outlets like Vista question whether AI can enrich sexual lives without replacing genuine human connection.

As AI’s role deepens in society, the conversation extends beyond what bots can discuss. It reflects broader societal tensions about human-AI interactions. Will we prioritize caution, or will we lean toward candor? The answer may significantly influence the future of how technology interfaces with human relationships. Ultimately, AI’s silence on sex serves as a mirror, reflecting our own societal dilemmas. As policies evolve, the boundaries of what is discussable may also shift, ideally in ways that empower rather than endanger.

See also TSMC’s Ex-VP Raided Over Intel Leak; Baidu Lays Off 40% After Q3 Losses

TSMC’s Ex-VP Raided Over Intel Leak; Baidu Lays Off 40% After Q3 Losses Molecular Matrix Launches AI-Powered “Contract Guard” to Mitigate Legal Risks for Entrepreneurs

Molecular Matrix Launches AI-Powered “Contract Guard” to Mitigate Legal Risks for Entrepreneurs Tesla’s FSD v14.2.1 Update Sparks Concerns Over Abrupt Speed Profile Changes

Tesla’s FSD v14.2.1 Update Sparks Concerns Over Abrupt Speed Profile Changes DeepSeek Achieves Gold at International Maths Olympiad with Self-Verifiable AI Model

DeepSeek Achieves Gold at International Maths Olympiad with Self-Verifiable AI Model Philips Launches Verida: Transformative AI-Driven Spectral CT Cuts Exam Time by 50%

Philips Launches Verida: Transformative AI-Driven Spectral CT Cuts Exam Time by 50%