The Albanese government has introduced Australia’s first National AI Plan, which favors voluntary transparency measures over mandatory regulations regarding businesses’ disclosure of their artificial intelligence usage. The 37-page document outlines a comprehensive strategy aimed at fostering what the government describes as “an AI-enabled economy,” while striving to maintain a “careful balance between innovation and protection from potential risks.”

Positioned as a “key pillar” of the broader Future Made in Australia agenda, the plan emphasizes the role of AI in enhancing economic capability and fostering long-term industry development. This connection has been a consistent theme in the government’s narrative around AI.

Importantly, the National AI Plan does not impose new legal obligations on businesses employing AI technologies. Instead, it relies on voluntary guidance, partnerships within the industry, and existing laws to provide oversight. The report asserts that Australia’s approach is designed to be “proportionate, targeted, and responsive,” introducing regulation only “where necessary.”

For businesses, the specifics of how support will be delivered are primarily integrated into separate programs that the plan aggregates. Notably, the government identifies small and medium enterprises (SMEs) as critical to addressing challenges in AI adoption.

The plan encourages stakeholders—including businesses, content creators, and AI developers—to label AI-generated content through visible notices, watermarking, and metadata. However, it stops short of mandating these disclosures, echoing earlier guidance from the National AI Centre that promotes similar voluntary best practices.

The National AI Plan explicitly states that the government will not pursue a standalone AI Act, opting instead to regulate within the framework of Australia’s existing legal system. The report maintains that the country’s robust legal and regulatory frameworks will serve as the foundation for addressing and mitigating AI-related risks. The government emphasizes a sector-by-sector approach to regulation, asserting it will be “proportionate, targeted, and responsive” based on specific challenges.

“Regulators will retain responsibility for identifying, assessing, and addressing potential AI-related harms within their respective policy and regulatory domains,” the report states. This strategy contrasts with international models, particularly those of the European Union, by reinforcing that Australia’s legal system is already “largely technology-neutral,” capable of managing new risks without necessitating new legislation. This direction effectively rules out the introduction of an EU-style AI Act, solidifying a light-touch, incremental oversight model.

Minister of Industry and Innovation Tim Ayres highlighted that the plan aims to foster public trust in AI deployment while keeping innovation barriers low. “This plan is focused on capturing the economic opportunities of AI, sharing the benefits broadly, and keeping Australians safe as technology evolves,” Ayres remarked. Assistant Minister for Science and Technology Andrew Charlton noted that the agenda is designed to attract positive investment, support Australian businesses in adopting and developing new AI tools, and address real risks faced by everyday Australians.

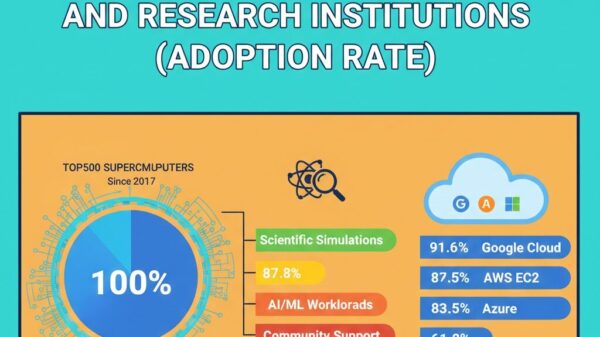

The National AI Plan is anchored by three primary goals: capturing opportunities, distributing benefits, and ensuring safety for Australians. Strategies include improving digital and physical infrastructure, expanding AI skills across educational institutions, and establishing frameworks for responsible innovation. The government also detailed three immediate “next steps”: establishing the AI Safety Institute, finalizing a new GovAI Framework to guide secure and responsible adoption within the Australian Public Service, and appointing chief AI officers in every government department.

At the heart of the announcement is the establishment of the AI Safety Institute (AISI), which will be funded with $29.9 million, set to commence operations in early 2026. The Institute will “monitor, test, and share information on emerging AI capabilities, risks, and harms,” providing guidance to government, regulators, unions, and industry stakeholders. It aims to ensure compliance with Australian laws while upholding standards of fairness and transparency in AI practices.

According to the plan, the AISI will focus on both “upstream AI risks,” concerning the development and training of advanced models, and “downstream AI harms,” which pertain to the tangible impacts on individuals. It will also engage internationally, collaborating with partners, including the newly formed International Network of AI Safety Institutes.

Government officials present the strategy as a long-term, adaptable framework. Ayres asserted, “As the technology continues to evolve, we will continue to refine and strengthen this plan to seize new opportunities and act decisively to keep Australians safe.” Charlton framed it as a people-centered approach, underscoring, “This is a plan that puts Australians first… promoting fairness and opportunity.”

While the plan outlines significant financial commitments, including the pipeline of private data center investments, these largely fall outside the newly proposed measures. Instead, the government emphasizes that it has “more than $460 million” already allocated across existing AI initiatives, aiming for better coordination and guidance in their implementation.

See also OSTP Faces Backlash for Deregulating AI Amidst Growing Public Safety Concerns

OSTP Faces Backlash for Deregulating AI Amidst Growing Public Safety Concerns SAI360 Acquires Plural Policy to Enhance AI-Driven Regulatory Compliance Solutions

SAI360 Acquires Plural Policy to Enhance AI-Driven Regulatory Compliance Solutions Federal Government Announces National AI Plan, Relies on Existing Laws to Manage Risks

Federal Government Announces National AI Plan, Relies on Existing Laws to Manage Risks Trump Administration Considers Executive Order to Ban State AI Regulations Amid Bipartisan Backlash

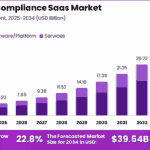

Trump Administration Considers Executive Order to Ban State AI Regulations Amid Bipartisan Backlash AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034

AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034