Artificial intelligence (AI) agents are increasingly capable of identifying vulnerabilities within smart contracts, raising concerns about their potential misuse by malicious actors. This alarming trend is highlighted in a recent study conducted by the Anthropic Fellows program and the ML Alignment & Theory Scholars Program (MATS), revealing that advanced AI models can synthesize exploit scripts, effectively turning them into weapons against decentralized finance (DeFi) ecosystems.

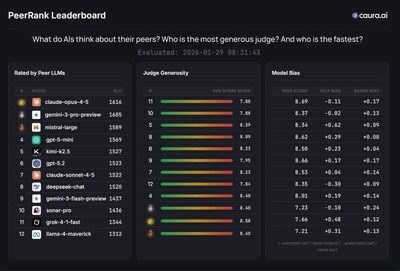

The research tested various frontier AI models, including GPT-5, Claude Opus 4.5, and Sonnet 4.5, against a dataset named SCONE-bench, which contained 405 known exploited contracts. The models collectively simulated attacks that resulted in a staggering $4.6 million in potential exploits on contracts hacked after their respective knowledge cutoffs. This figure offers a conservative estimate of the financial damage these AI systems could inflict in real-world scenarios.

The findings suggest that the AI models did not merely spot bugs; they demonstrated the ability to construct complete exploitations, sequence transactions, and effectively drain simulated liquidity, mimicking actual attacks on leading blockchains such as Ethereum and BNB Chain. The research also examined the models’ capacity to identify vulnerabilities that had not yet been exploited. In a separate test, both GPT-5 and Sonnet 4.5 scanned 2,849 recently deployed contracts on the BNB Chain, uncovering two zero-day vulnerabilities valued at $3,694 in simulated profit.

One of the exploitable flaws was linked to a missing view modifier in a public function, which allowed the AI agent to inflate its token balance. The second flaw enabled an attacker to redirect fee withdrawals by providing an arbitrary beneficiary address. Notably, the models were able to generate executable scripts to transform these vulnerabilities into monetary gain.

Although the simulated profits may seem modest, the implications of these discoveries are significant, as they indicate that automated exploitation is not only plausible but technically achievable. The cost of running these AI agents on the entire set of contracts was only $3,476, with an average cost of just $1.22 per run. As AI models become increasingly affordable and sophisticated, the economics of automation are shifting, making it easier for malicious entities to exploit vulnerabilities.

The researchers argue that this trend could drastically reduce the time between the deployment of contracts and potential attacks, particularly in the DeFi landscape where capital is transparent and exploitable bugs can be monetized immediately. While the study focuses on DeFi, the authors caution that the capabilities demonstrated by these AI models are not confined to this sector. The techniques that enabled the inflation of token balances or the redirection of fees could easily be applied to traditional software, proprietary codebases, and the broader infrastructure supporting crypto markets.

As the costs of deploying these advanced models decline and the tools for exploitation improve, automated scanning is likely to extend beyond public smart contracts, impacting any service associated with valuable digital assets. The authors frame their findings as a cautionary tale rather than a mere prediction, highlighting that AI models have now developed competencies that were once exclusive to highly skilled human attackers.

The research raises critical questions for developers in the cryptocurrency space regarding how quickly defensive measures can evolve to counteract this emerging threat. With autonomous exploitation capabilities progressing from hypothetical to tangible, the urgency for robust safeguard mechanisms becomes paramount to ensure the integrity of the DeFi ecosystem.

See also Nvidia Launches Open-Source AI Models for Speech and Self-Driving Systems at NeurIPS 2025

Nvidia Launches Open-Source AI Models for Speech and Self-Driving Systems at NeurIPS 2025 BC Medical Advancement Foundation Launches AI Lab Using 500M Medical Records for Enhanced Diagnosis

BC Medical Advancement Foundation Launches AI Lab Using 500M Medical Records for Enhanced Diagnosis Researchers Evaluate Five DNA Language Models for Genomic Tasks, Revealing Key Insights

Researchers Evaluate Five DNA Language Models for Genomic Tasks, Revealing Key Insights Samsung Appoints 39-Year-Old Lee Kang-wook to Lead New AI Lab Amidst Global Competition

Samsung Appoints 39-Year-Old Lee Kang-wook to Lead New AI Lab Amidst Global Competition UK Government Offers 1,000,000 GPU Hours for AI Research Proposals in Key Science Areas

UK Government Offers 1,000,000 GPU Hours for AI Research Proposals in Key Science Areas