Following Google, Amazon Web Services (AWS) has launched its own energy-efficient artificial intelligence (AI) chip, marking a potential shift in a market long dominated by Nvidia. As demand for training massive AI models surges, rising costs, power consumption, and supply chain constraints have prompted companies to develop their own computing architectures. Analysts are closely monitoring whether these in-house AI chips, optimized for performance per watt, can effectively challenge Nvidia’s longstanding dominance.

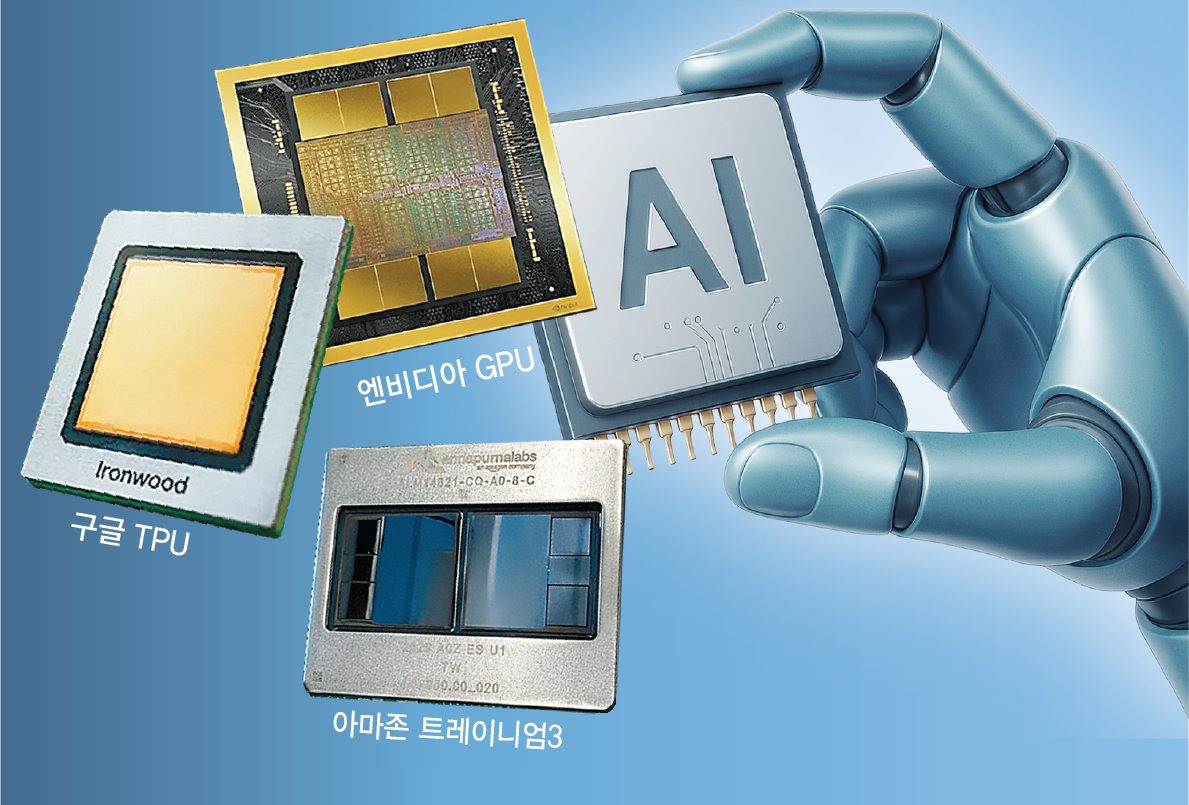

At its annual “AWS re:Invent 2025” event in Las Vegas on December 2, AWS officially unveiled its custom AI chip, Tranium 3. The company showcased ultra servers capable of accommodating up to 144 Tranium 3 chips, which are available for immediate deployment. AWS claims that Tranium 3 delivers four times the computational performance of its previous-generation chips while consuming 40 percent less power. The company further noted that utilizing Tranium 3 could reduce AI model training and operational costs by up to 50 percent compared to systems using comparable graphics processing units (GPUs). During his keynote, AWS CEO Matt Garman emphasized that Tranium 3 offers the industry’s best cost efficiency for AI training and inference.

Google’s custom-developed tensor processing units (TPUs) mirror these advantages, featuring low power consumption and reduced operational costs. The TPUs powered the training and deployment of Google’s recently unveiled AI model, Gemini 3, developed in collaboration with U.S. semiconductor fabless company Broadcom. AI startup Anthropic plans to utilize up to one million TPUs for model development, while Meta is reportedly adopting Google TPUs within its own data centers. OpenAI is also collaborating with Broadcom to co-develop custom AI chips for training and operating its models, including ChatGPT.

The primary motivation behind tech giants developing custom AI chips is to secure a stable supply and mitigate costs. Nvidia GPUs, which are capable of processing massive amounts of data simultaneously, are vital to the AI ecosystem but remain chronically scarce, even for well-funded companies. As global AI investment escalates, Nvidia, the first company to market GPUs, has solidified its status as the dominant player in the field, controlling approximately 90 percent of the GPU-based AI chip market.

Each GPU is priced between $30,000 and $40,000, or about 44 million to 59 million won. When energy expenses are factored in, companies have concluded that purpose-built chips optimized for specific computations are more efficient over the long term. The distinct requirements of individual companies also drive the development of custom AI chips. AWS needs chips tailored for cloud services, while Google seeks processors specifically for training large language models such as Gemini. Although general-purpose GPUs can handle most computations, chips designed for a company’s unique model architecture can perform similar tasks using less power.

Despite these advancements, industry analysts caution that Nvidia’s dominance is unlikely to be challenged in the immediate future. The global AI research and development ecosystem is heavily centered on Nvidia GPUs and its CUDA software platform. Given the scale of existing infrastructure investments and the potential costs associated with switching, companies are not expected to replace Nvidia hardware soon. Nvidia has acknowledged Google’s strides in AI but maintains that its products remain a generation ahead of competitors.

As the competitive landscape intensifies, the move by major tech firms to develop proprietary AI chips reflects a broader trend aimed at enhancing efficiency and reducing dependency on a singular supplier. This trend not only signals a potential reshaping of the AI market but also underscores the increasing importance of customized solutions tailored to specific operational needs. The ongoing evolution of AI technology and infrastructure will be instrumental in determining whether companies can effectively challenge Nvidia’s supremacy in the near future.

See also Humanities Education Gains Importance as AI Challenges Ethical Decision-Making

Humanities Education Gains Importance as AI Challenges Ethical Decision-Making Australian Cyber Security Centre Reveals Key Principles for Safe AI Integration in OT Systems

Australian Cyber Security Centre Reveals Key Principles for Safe AI Integration in OT Systems Li Auto Launches Affordable AI Glasses Livis, Targeting 1.5 Million Car Owners

Li Auto Launches Affordable AI Glasses Livis, Targeting 1.5 Million Car Owners Microsoft’s Jenny Lay-Flurrie Reveals Key Strategies for Building Inclusive AI Tech

Microsoft’s Jenny Lay-Flurrie Reveals Key Strategies for Building Inclusive AI Tech Quantum Computing Faces Potential Bubble as 2026 Prototype Deadline Approaches

Quantum Computing Faces Potential Bubble as 2026 Prototype Deadline Approaches