The increasing reliance on facial recognition technology for remote authentication is raising concerns over security vulnerabilities, particularly against advanced video injection attacks. A team of researchers from Verigram, including Daniyar Kurmankhojayev, Andrei Shadrikov, and Dmitrii Gordin, has developed a novel machine learning model aimed at detecting manipulated video feeds. This innovative approach seeks to bolster the integrity of facial recognition systems, safeguarding them against malicious bypass attempts that are becoming increasingly sophisticated.

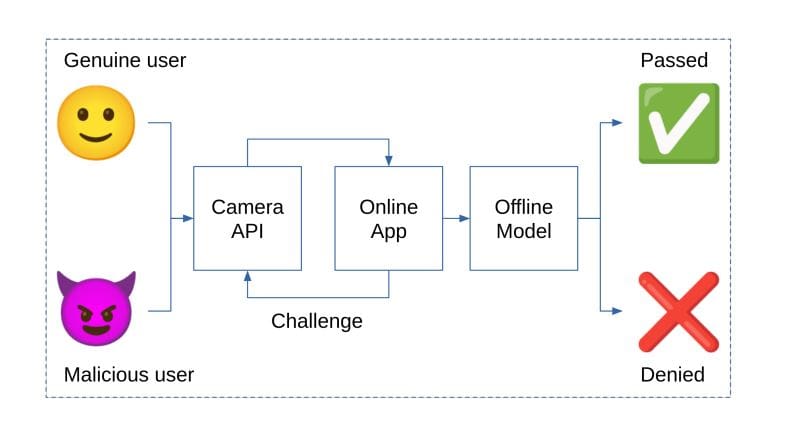

The researchers have focused on the crucial issue of virtual camera detection, which addresses a significant gap in current anti-spoofing literature. By meticulously gathering and analyzing metadata collected during user authentication sessions, their method distinguishes between authentic camera inputs and those originating from software-based virtual devices. This approach circumvents the complex image processing typically associated with presentation attack detection, allowing for a more streamlined and effective solution.

To train their detection model, the researchers amassed a dataset that accurately reflects genuine user interactions and potential spoofing scenarios. By capturing metadata during real-world authentication attempts, they engineered a machine learning model that can classify video sources as either physical or virtual cameras. Experiments utilizing both real cameras and various virtual camera software enabled a comprehensive assessment of the system’s capabilities, confirming its effectiveness in identifying malicious video injection attempts.

The findings indicate that this model can effectively enhance face anti-spoofing systems, addressing the growing threat presented by deepfakes and other digital deceptions. The research highlights a pressing consumer concern, with 72% of individuals expressing daily worries about being misled by synthetic media. As the prevalence and realism of such content escalate, the need for robust security measures becomes increasingly critical.

By prioritizing the source of video input, the team’s detection method supplements traditional liveness detection techniques, which can falter in the face of advanced video manipulations. The researchers found that their model successfully identifies video streams from virtual cameras by analyzing responses to challenges issued to the camera driver via the browser API, potentially offering a more efficient solution to remote biometric authentication security.

This study stands as a significant contribution to the field of biometric security, showcasing the potential of machine learning in creating a protective layer against video injection threats. The model’s ability to identify virtual camera use, derived from authentic user session data, effectively mitigates risks associated with such attacks. The researchers emphasize that while virtual camera detection is most effective when combined with other security measures, it also shows promise as a standalone solution.

Looking ahead, the authors note that their research primarily focuses on virtual camera software, indicating that other attack vectors—such as session hijacking—require distinct mitigation strategies. Future work will aim to enhance detection methods by incorporating richer metadata, exploring temporal patterns, and applying adaptive learning techniques. These advancements may lead to a more integrated approach that combines virtual camera detection with complementary security layers, thereby strengthening the resilience of remote biometric authentication systems against an expanding array of threats.

See also Google Launches Gemini Deep Research API as OpenAI Reveals Powerful GPT-5.2 Model

Google Launches Gemini Deep Research API as OpenAI Reveals Powerful GPT-5.2 Model Machine Learning Enhances Lumbar Disc Degeneration Classification, Promises Personalized Treatment

Machine Learning Enhances Lumbar Disc Degeneration Classification, Promises Personalized Treatment TCU Launches $10M AI² Initiative with Dell to Expand AI Research and Education

TCU Launches $10M AI² Initiative with Dell to Expand AI Research and Education University of Surrey Reveals AI Tool Predicting Osteoarthritis Progression in Knee X-Rays

University of Surrey Reveals AI Tool Predicting Osteoarthritis Progression in Knee X-Rays