Runway has upgraded its Generative Model to Gen-4.5 and introduced GWM-1, the company’s inaugural “General World Model.” The newly launched Gen-4.5 enhances user experience with native audio generation and editing capabilities, along with a multi-shot editing feature that allows changes made to a single scene to propagate across an entire video. This upgrade aims to streamline video production and enhance creative possibilities for users.

GWM-1 builds on the Gen-4.5 architecture, creating an internal representation of environments to simulate events in real-time. This innovative model generates video frame by frame, enabling interactive control through various inputs such as camera movements, robot commands, or audio cues. This capability marks a shift towards more immersive and dynamic content creation.

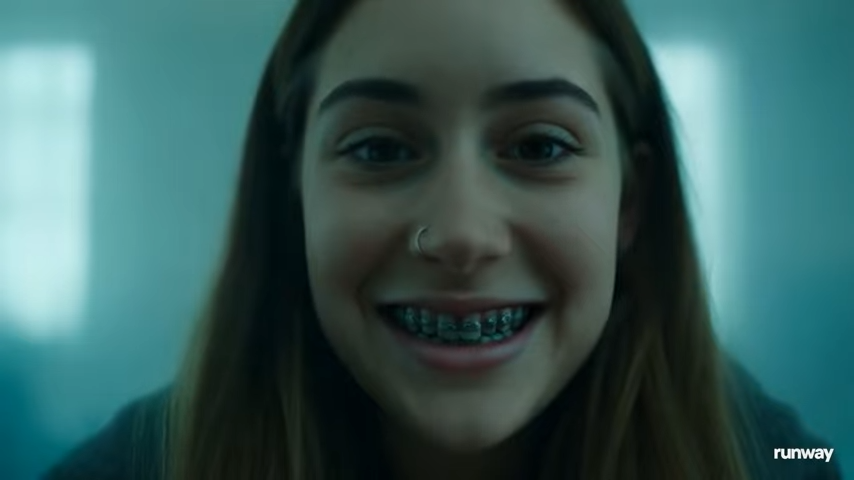

The GWM-1 is available in three versions: GWM Worlds, designed for creating explorable environments; GWM Avatars, which generates speaking characters with realistic facial expressions and lip sync; and GWM Robotics, focused on producing synthetic training data for robots. Runway’s long-term vision includes merging these functionalities into a singular unified model, enhancing the versatility of its offerings.

Runway’s advancements come as competition intensifies within the AI sector. Other labs, including Google DeepMind and a startup helmed by AI researcher Yann LeCun, are developing their own world models. These systems are seen as a crucial evolution beyond traditional language models, which often lack a fundamental grasp of the physical world. DeepMind’s CEO, Demis Hassabis, has emphasized that building such models is central to the company’s strategic goal of achieving Artificial General Intelligence (AGI).

In addition to Runway, the field is expanding with significant investments and innovations. World Labs, a startup founded by Fei-Fei Li, recently raised $230 million to develop “Large World Models” (LWMs) equipped with spatial intelligence. The company has unveiled “Marble,” a prototype capable of rendering persistent 3D environments from multimodal prompts. Meanwhile, Munich-based startup Spaitial is creating Spatial Foundation Models intended to generate and interpret 3D worlds with consistent physical dynamics.

The competitive landscape is further populated by startups like Etched and Decart, which have introduced the “Oasis” project. This system is designed to generate playable, Minecraft-style 3D worlds in real-time at 20 frames per second. While it allows for basic interactions, such as jumping and picking up objects, it still grapples with consistency issues, as players sometimes find themselves in different environments with simple movements.

In August, Chinese technology giant Tencent launched its Hunyuan World Model 1.0, an open-source generative AI model capable of creating 3D virtual scenes from text or image prompts. This move is part of a broader trend among tech companies to explore the capabilities of world models, which are seen as pivotal for enhancing machine understanding of spatial and environmental contexts.

As the race to develop world models accelerates, the implications for artificial intelligence are profound. These advancements could redefine how machines comprehend and interact with the physical world, paving the way for more sophisticated and autonomous systems. The integration of spatial and temporal awareness into AI models may eventually lead to breakthroughs in fields ranging from robotics to entertainment, illustrating the vast potential of this emerging technology.

See also AI Revolutionizes Science: From Drug Discovery to Weather Forecasting, Boosting Economic Growth

AI Revolutionizes Science: From Drug Discovery to Weather Forecasting, Boosting Economic Growth EPAM Launches AI Agents, Approves $1B Buyback, Reshaping Investment Landscape

EPAM Launches AI Agents, Approves $1B Buyback, Reshaping Investment Landscape AWS Unveils Strands Agents and Bedrock AgentCore for Advanced Physical AI Integration

AWS Unveils Strands Agents and Bedrock AgentCore for Advanced Physical AI Integration University of Florida Launches 40,000-Sq-Ft AI Hub to Revolutionize Farming and Combat Food Insecurity

University of Florida Launches 40,000-Sq-Ft AI Hub to Revolutionize Farming and Combat Food Insecurity Microsoft Invests $23B in AI, Raises Microsoft 365 Prices Ahead of 2026 Rollout

Microsoft Invests $23B in AI, Raises Microsoft 365 Prices Ahead of 2026 Rollout