In a significant advancement for artificial intelligence, the “Diffusion Large Language Model (dLLM)” has evolved from a niche concept at the beginning of the year to a robust model boasting hundreds of billions of parameters. Recent developments have revealed two new models on the HuggingFace platform: LLaDA2.0-mini and LLaDA2.0-flash. These models are the result of a collaborative effort involving Ant Group, Renmin University, Zhejiang University, and Westlake University, both employing the Mixture of Experts (MoE) architecture. LLaDA2.0-mini comprises 16 billion parameters, while LLaDA2.0-flash features a remarkable 100 billion parameters, marking a milestone in the dLLM landscape.

As the scale of these models expands, their capabilities have also significantly improved. In 47 benchmark tests that encompass various dimensions, including knowledge, reasoning, coding, mathematics, and agent alignment, LLaDA2.0-flash achieved an average score of 73.18, closely rivaling the well-known autoregressive model Qwen3-30B-A3B-Instruct-2507, which scored 73.60. The LLaDA2.0-flash model particularly excelled in complex tasks, such as coding challenges like HumanEval and MBPP.

The landscape of large model generation has historically been dominated by the autoregressive approach, which generates text sequentially from start to finish. However, this method has inherent limitations, including high computational costs for long text generation, slow inference speeds, and challenges in capturing bidirectional token dependencies. Errors in previously generated content can lead to cascading issues in subsequent outputs, resulting in cumulative inaccuracies. The successful scaling of dLLMs, particularly through models like LLaDA2.0, demonstrates a promising alternative pathway. Notably, the rapid advancement in this domain does not adhere to a single method of continuous scaling; instead, it emerges from a diversified exploration by researchers.

In September, LLaDA researchers verified the potential of training a dLLM from scratch using the MoE framework, launching the 7B LLaDA-MoE as a novel approach to the diffusion paradigm. Just three months later, the team achieved a breakthrough by smoothly transitioning from an established autoregressive model to a diffusion framework, scaling it to an impressive 100 billion parameters.

LLaDA2.0 has provided a structured solution to the widely recognized challenges associated with scaling dLLMs. Rather than commencing training from scratch, LLaDA2.0 opted for a “smooth” transformation from an existing autoregressive model. This approach involved a systematic solution encompassing the reconstruction of the training paradigm, enhanced collaboration between pre-training and post-training processes, and optimization of both training and inference infrastructure.

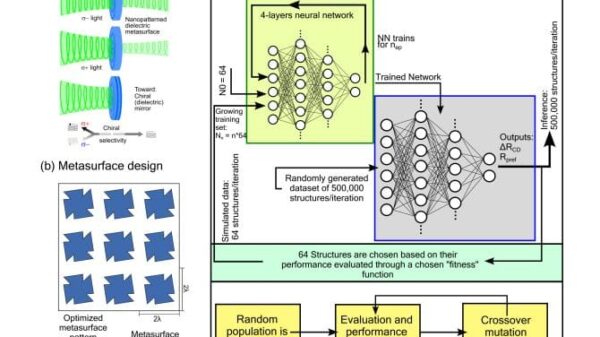

Through the process of Continuous Pre-training (CPT), an AR base model was transformed into a Masked Diffusion Language Model (MDLM), enabling bidirectional denoising capabilities. This shift allowed the model to maintain the geometric structure of the original AR representation while learning through larger text segments. Subsequently, Block Diffusion Pre-training was introduced, enhancing long-range generation consistency and computational efficiency. The model’s training culminated in a comprehensive post-training phase that included Supervised Fine-Tuning (SFT), Confidence-Aware Parallel Training (CAP), and Direct Preference Optimization (DPO), which align the model more closely with user instructions and improve inference efficiency.

The engineering optimizations employed during LLaDA2.0’s pre-training, post-training, and inference stages have further addressed issues like training stability and scalability. By leveraging Megatron-LM as the training backend, the team employed multi-parallel strategies, enabling high throughput for models with hundreds of billions of parameters. Innovations in attention implementation have significantly accelerated the training process, achieving end-to-end speed improvements and reducing memory consumption.

As the dLLM field continues to evolve, LLaDA2.0 stands as a transformative example, demonstrating a stable and scalable approach for training diffusion models with extensive parameters. This development not only enhances the capabilities of language models but also positions them to better serve complex tasks in various domains, paving the way for a new era in AI research and applications.

See also Kling 2.6 API Launches with Native Audio Generation for Seamless Video Creation

Kling 2.6 API Launches with Native Audio Generation for Seamless Video Creation Generative AI Transforms Election Campaigns: Enhanced Voter Engagement and Risks Ahead

Generative AI Transforms Election Campaigns: Enhanced Voter Engagement and Risks Ahead Innovative AI Techniques Revitalize Baoxiang Pattern Design Using Shape Grammar and Diffusion Models

Innovative AI Techniques Revitalize Baoxiang Pattern Design Using Shape Grammar and Diffusion Models UiPath Stock (NYSE: PATH) Remains Undervalued Despite Strong Growth Potential

UiPath Stock (NYSE: PATH) Remains Undervalued Despite Strong Growth Potential TikTok Launches AI Transparency Tools to Combat Misinformation and Enhance User Control

TikTok Launches AI Transparency Tools to Combat Misinformation and Enhance User Control