In an increasingly competitive landscape for AI video generation, the Kling 2.6 API offers a practical solution for developers seeking to create short video clips with synchronized audio. Positioned as a serviceable option rather than a groundbreaking model, Kling 2.6 allows users to turn text prompts or images into usable draft footage efficiently. This approach caters to teams that prioritize ease of use and straightforward output over ultimate quality, making it an attractive choice for development projects where rapid iteration is essential.

Accessible through platforms like Kie.ai, the Kling Video 2.6 API enables creators to prototype video ideas or implement simple generative features within applications. By alleviating the burden of managing complex models, the API empowers teams to focus on innovation while controlling costs, thereby enhancing productivity for developers who value convenience.

Technical Details

One standout feature of the Kling Video 2.6 API is its support for “native audio,” allowing the generation of visuals and audio tracks in a single request. This integration simplifies the workflow, as developers receive a finished clip complete with voices, ambient sounds, and basic effects, eliminating the need for post-processing audio. Such functionality is particularly beneficial for lightweight content tools where synchronized audio is a necessity.

The API goes beyond typical text-to-video systems by granting developers control over who speaks, the content of their speech, and the emotional tone of the delivery. It can produce ambient sound and sound effects, offering enough flexibility to adjust pacing and mood without necessitating a separate sound design pipeline. The Kling 2.6 API currently supports English and Chinese speech, automatically translating other languages into English for audio output, thus broadening its usability.

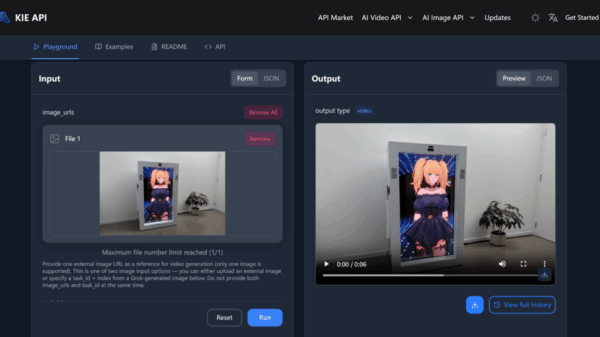

Both the Kling Text to Video and Image to Video APIs are designed for a seamless workflow, allowing developers to submit prompts or image references. The system autonomously handles scene construction, motion, voice generation, and audio mixing. This emphasis on simplicity positions Kling 2.6 as an ideal tool for rapid prototyping, content automation, and applications where quick generation is prioritized over detailed frame control.

Enhanced audio quality is another hallmark of the Kling 2.6 API. It produces vocal tracks, ambient textures, and sound effects as distinct layers, leading to clearer audio and structured mixes compared to earlier iterations. While it does not aim to replace professional production tools, the output is sufficiently detailed for early drafts and consumer applications, reinforcing its practicality for everyday use.

The model also benefits from improved semantic understanding, allowing it to interpret prompts and narrative structures with greater consistency. This enhancement results in outputs that more accurately mirror the creator’s intent, particularly in scenes where dialogue, actions, and environmental cues must align seamlessly.

Integration with the Kling 2.6 API begins by obtaining an API key and selecting the appropriate model endpoint. Developers then send a JSON request to create a video, containing their prompt and optional image URLs. With a fixed duration of either 5 or 10 seconds, the output behavior remains predictable, simplifying client-side handling. For applications requiring asynchronous updates, a callback URL can be used to notify developers when the model completes processing, streamlining workflows in automated pipelines.

Pricing is another appealing aspect of the Kling 2.6 API, which operates on a credit-based, pay-as-you-go model through Kie.ai. A 5-second video without audio costs US $0.28, while a 10-second clip without audio is priced at US $0.55. When audio is included, costs rise to US $0.55 for a 5-second clip and approximately US $1.10 for a 10-second video. These rates are roughly 20% lower than standard pricing, positioning Kling 2.6 as a cost-effective option for experimentation.

The credit system allows teams to start with as little as US $5, with further discounts available for larger purchases. This pricing structure makes the Kling 2.6 API an appealing choice for small-scale video generation or gradual integration into existing products, all without the commitment of a monthly subscription.

The Kling Video 2.6 API finds numerous applications across various sectors. It serves as a rapid prototyping tool for creative teams, enabling the quick generation of short scenes using only text. The integrated audio capability simplifies the production of clips complete with voices and sound effects, facilitating the testing of narrative concepts within consumer applications.

Moreover, design and content applications can leverage the Kling Image to Video API to animate static visuals into motion, offering “instant motion drafts” for moodboards or templates. This feature eliminates the complexities of maintaining a custom animation pipeline, enhancing user experience.

In marketing and social applications, the Kling Text to Video API aids in creating quick promotional snippets or onboarding visuals. The combination of text prompts with automatic audio narration allows for the swift generation of coherent clips, addressing the speed and efficiency requirements typical of marketing workflows.

As demand for user-friendly AI video features grows, the Kling 2.6 API demonstrates how streamlined outputs and integrated audio generation can mitigate challenges associated with video production. By focusing on delivering reliable results for developers, Kling 2.6 contributes to an evolving landscape of practical video generation tools that prioritize functionality over spectacle.

See also Generative AI Transforms Election Campaigns: Enhanced Voter Engagement and Risks Ahead

Generative AI Transforms Election Campaigns: Enhanced Voter Engagement and Risks Ahead Innovative AI Techniques Revitalize Baoxiang Pattern Design Using Shape Grammar and Diffusion Models

Innovative AI Techniques Revitalize Baoxiang Pattern Design Using Shape Grammar and Diffusion Models UiPath Stock (NYSE: PATH) Remains Undervalued Despite Strong Growth Potential

UiPath Stock (NYSE: PATH) Remains Undervalued Despite Strong Growth Potential TikTok Launches AI Transparency Tools to Combat Misinformation and Enhance User Control

TikTok Launches AI Transparency Tools to Combat Misinformation and Enhance User Control New Research Reveals Semantic Leakage Can Corrupt LLMs, Eliciting ‘Weird Generalizations’

New Research Reveals Semantic Leakage Can Corrupt LLMs, Eliciting ‘Weird Generalizations’