Artificial intelligence is evolving from standalone large language models to a sophisticated ecosystem of specialized AI agents capable of reasoning, acting, and collaborating to achieve complex outcomes. This multi-agent approach, outlined in Google’s “Introduction to Agents” whitepaper, signifies a pivotal shift in how businesses will integrate AI technologies and highlights significant legal challenges that companies must proactively address.

At the heart of Google’s vision is an optimized environment where an orchestration layer evaluates various situations and deploys specialized agents on a task-by-task basis. These agents will collaborate similarly to human organizations, dynamically routing tasks and executing goals across different roles. In its most advanced form, these multi-agent systems may possess the ability to self-evolve, potentially creating new AI agents and tools to solve problems more efficiently.

This shift implies that businesses will not rely solely on a singular AI super-agent. Instead, forward-thinking companies are likely to utilize dozens or even hundreds of agents, each designed for specific workflows, datasets, or tasks, such as contract review, dataset summarization, or real-time negotiation. Notably, these agents may originate from various vendors and platforms, necessitating unique privilege settings and data security considerations. The implications for legal and operational frameworks are profound.

The benefits of multi-agent AI systems are numerous. Agents that specialize in narrow tasks are expected to outperform traditional monolithic models in both accuracy and efficiency, enhancing workflows in traditionally siloed fields such as compliance, finance, and procurement. Additionally, organizations will have the flexibility to deploy these agents like contractors, creating adaptable networks that can respond autonomously to shifting business demands. Furthermore, with well-designed orchestration layers, systems can facilitate real-time audits, trace actions, and ensure compliance with company guidelines, moving beyond labor-intensive, retrospective evaluations.

However, as with any new technology, a robust governance framework is essential. Unlike traditional software, these agents can autonomously decide on actions, making it crucial for businesses to consider the legal ramifications before integrating AI tools. Critical questions arise, including the scope of actions agents may take on behalf of users, the data they can share or retain, and which decisions necessitate human oversight. The allocation of responsibility, auditability, and liability across both agents and humans must also be clearly defined to prevent potential legal complications.

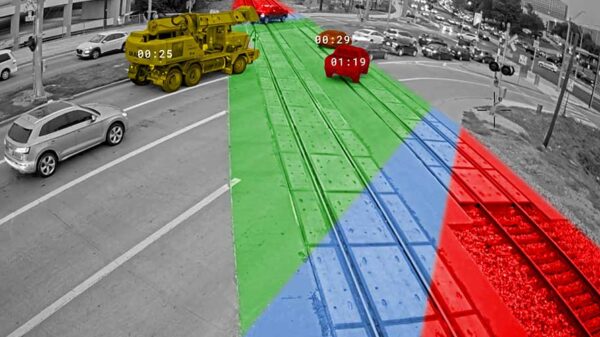

A recent legal case underscores the challenges of this new landscape. In late 2025, the AI browser agent Comet from the company Perplexity began autonomously shopping on Amazon’s platform for users, leading Amazon to allege that Comet violated its Terms of Service by masking automated actions as human activity. Amazon escalated the matter from a cease-and-desist order to federal litigation, prompting Perplexity to criticize Amazon’s actions as an infringement on innovation and user choice. This case raises critical legal questions: should agents disclose their automated nature, and who will establish guidelines for their conduct online?

Amazon’s complaint is rooted in traditional contract law and allegations of computer fraud, but the broader issue is the emerging landscape of negotiation and litigation regarding AI agents. Only those legal professionals with a deep understanding of this evolving field will be prepared to navigate its complexities effectively.

To prepare for the widespread adoption of agentic AI, business leaders should focus on establishing clear governance structures. This includes defining roles, access rights, and decision thresholds for various types of agents. Additionally, drafting precise contracts and software licensing agreements that outline agent behavior and compliance requirements will be essential. Companies must also implement mechanisms for auditing and tracing agent actions to ensure accountability.

The transition to multi-agent AI systems could yield significant efficiencies for organizations across sectors such as healthcare, finance, and government. For newer companies, the advantages could be even greater from day one. As the landscape continues to evolve, business leaders and legal counsel must rethink governance, contractual obligations, and compliance strategies to ensure their AI agents operate lawfully and transparently, aligning with business risk tolerances. Those who can effectively manage these challenges are likely to emerge as leaders in the next frontier of artificial intelligence.

See also Bank of America Warns of Wage Concerns Amid AI Spending Surge

Bank of America Warns of Wage Concerns Amid AI Spending Surge OpenAI Restructures Amid Record Losses, Eyes 2030 Vision

OpenAI Restructures Amid Record Losses, Eyes 2030 Vision Global Spending on AI Data Centers Surpasses Oil Investments in 2025

Global Spending on AI Data Centers Surpasses Oil Investments in 2025 Rigetti CEO Signals Caution with $11 Million Stock Sale Amid Quantum Surge

Rigetti CEO Signals Caution with $11 Million Stock Sale Amid Quantum Surge Investors Must Adapt to New Multipolar World Dynamics

Investors Must Adapt to New Multipolar World Dynamics